2021 Eclipse Cyclone DDS ROS Middleware Evaluation Report with iceoryx and Zenoh

Index

- Introduction

- Performance

- Services

- WiFi

- Features

- Quality

- Free-form

- Appendix A: Test Environment

- Appendix B: Performance Summary Per Platform

- Appendix C: Tests that fail with OS network buffer defaults

Introduction

This report is our response to the Default DDS Provider Questionnaire provided by Open Robotics. This report includes everything required to understand and reproduce the test results. Artifacts provided include how-to instructions for reproducing everything in this report, including test scripts, graph plot scripts, raw data, tabulated data, detailed test result PDF for each test, and the summary plots you see below.

The Eclipse Foundation eclipse.org provides our global community of individuals and organizations with a mature, scalable, and business-friendly environment for open source software collaboration and innovation. The Foundation is home to the Eclipse IDE, Jakarta EE, and over 350 open source projects, including runtimes, tools, and frameworks for a wide range of technology domains such as the Internet of Things, automotive, geospatial, systems engineering, and many others. The Eclipse Foundation is a European-based international not-for-profit association supported by over 300 members who value the Foundation’s unique Working Group governance model, open innovation processes, and community-building events.

Eclipse Cyclone DDS and Eclipse iceoryx are projects of the Eclipse IoT and Eclipse OpenADx (Autonomous Driving) WGs. Eclipse Zenoh is a project of Eclipse IoT and Eclipse Edge Native WG.

Eclipse Cyclone DDS is a small, easy and performant implementation of the OMG DDS specification. Eclipse iceoryx is a zero-copy pub/sub implementation for high bandwidth sensors such as cameras, LiDARs and is built into Cyclone DDS 0.8 and later. Eclipse Zenoh ROS 2 DDS plugin adds support for distributed discovery, wireless, WAN, Internet, microcontrollers, micro-ROS and is in the next ROS 2 Rolling sync. Example Zenoh users include 5G cloud robotics EU Horizon 2020 at Universidad Carlos III de Madrid, Indy Autonomous Challenge ROS 2 racecars and US Air Force’s Ghost Robotics ROS 2 robodogs.

Users get technical support via github issues, cyclonedds gitter, iceoryx gitter and zenoh gitter. Technical support is provided by the contributors and the user community. Project documentation besides READMEs is here: cyclonedds.io, iceoryx.io, zenoh.io and blog.

Contributors to Eclipse Cyclone DDS with iceoryx and Zenoh include ADLINK, Apex.AI, Bosch, Open Robotics, Rover Robotics and dozens of ROS community members.

These are active projects as you can see here: cyclonedds, iceoryx, zenoh, rmw_cyclonedds, zenoh-plugin-dds, zenoh-pico, cyclonedds-cxx. Note that Eclipse Foundation Development Process results in contributions being made as a smaller number of larger and thoroughly tested chunks. This means that the number of commits instead of number of pull requests is a more appropriate way to measure the liveliness of Eclipse projects.

ADLINK’s DDS team co-invented DDS, co-founded the OMG DDS SIG and contributed much of the original DDS specification. ADLINK DDS team co-authored many of the current DDS Specifications and actively participates in spec revisions and future DDS related specs. ADLINK co-chairs the OMG DDS Technical Committee.

Performance

A large number of tests were run with different combinations of data type, message size, message frequency, number of subscribers, and Quality of Service (QoS) settings. From struct16 to pointcloud8m data types and sizes. For frequency, tests were run from 1Hz to 500Hz, to unlimited free-running. Experiments were also run with 1 subscriber up to 10 subscribers. Each scenario is repeated with two different QoS configurations (originally there were 4 QoS configurations, but we realized there was no difference in the results). Scaling tests were run for 1 to 50 topics and nodes. The tests, raw data, tabulated data, and plots are here. The test result summary spreadsheets are here.

Throughput and latency for large messages

Without configuration, what is the throughput and latency (in addition to any other relevant metrics) when transferring large topics (like ~4K camera images) at medium frequencies (~30Hz)?

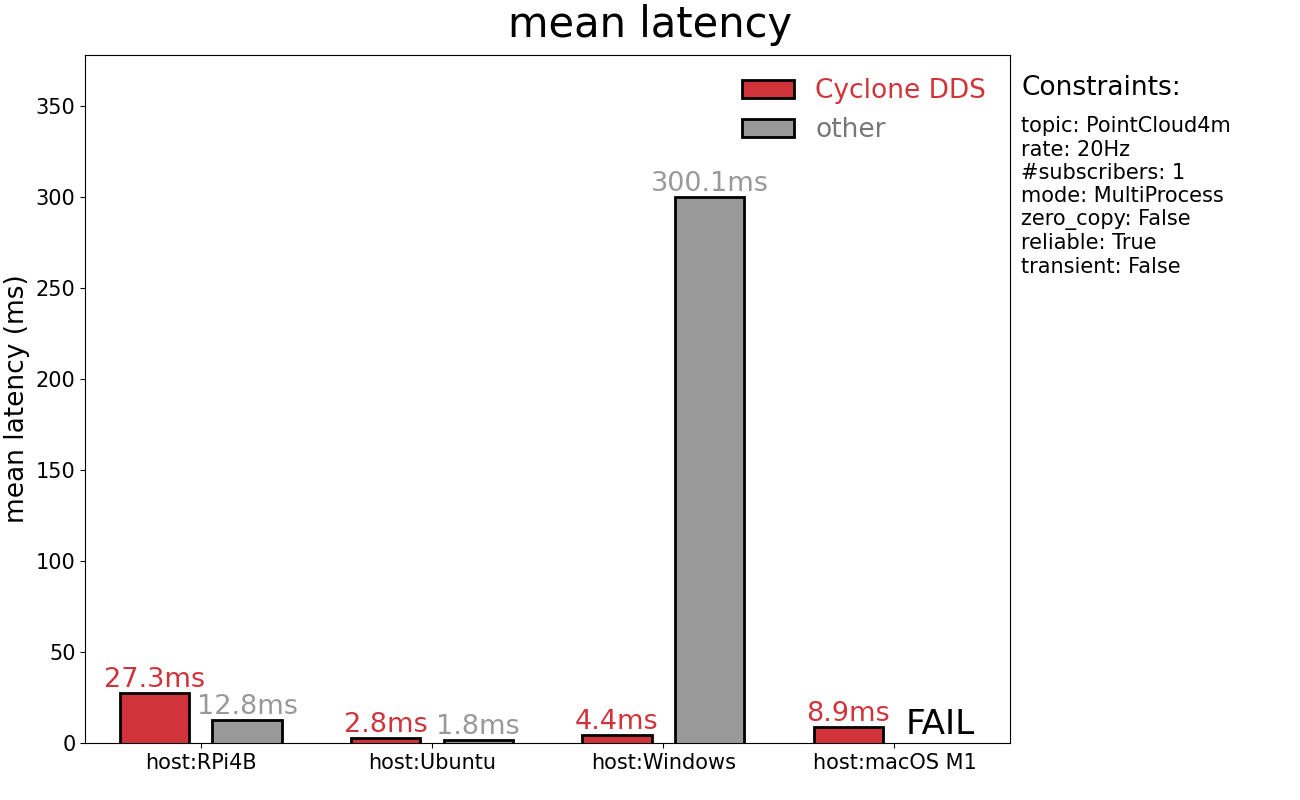

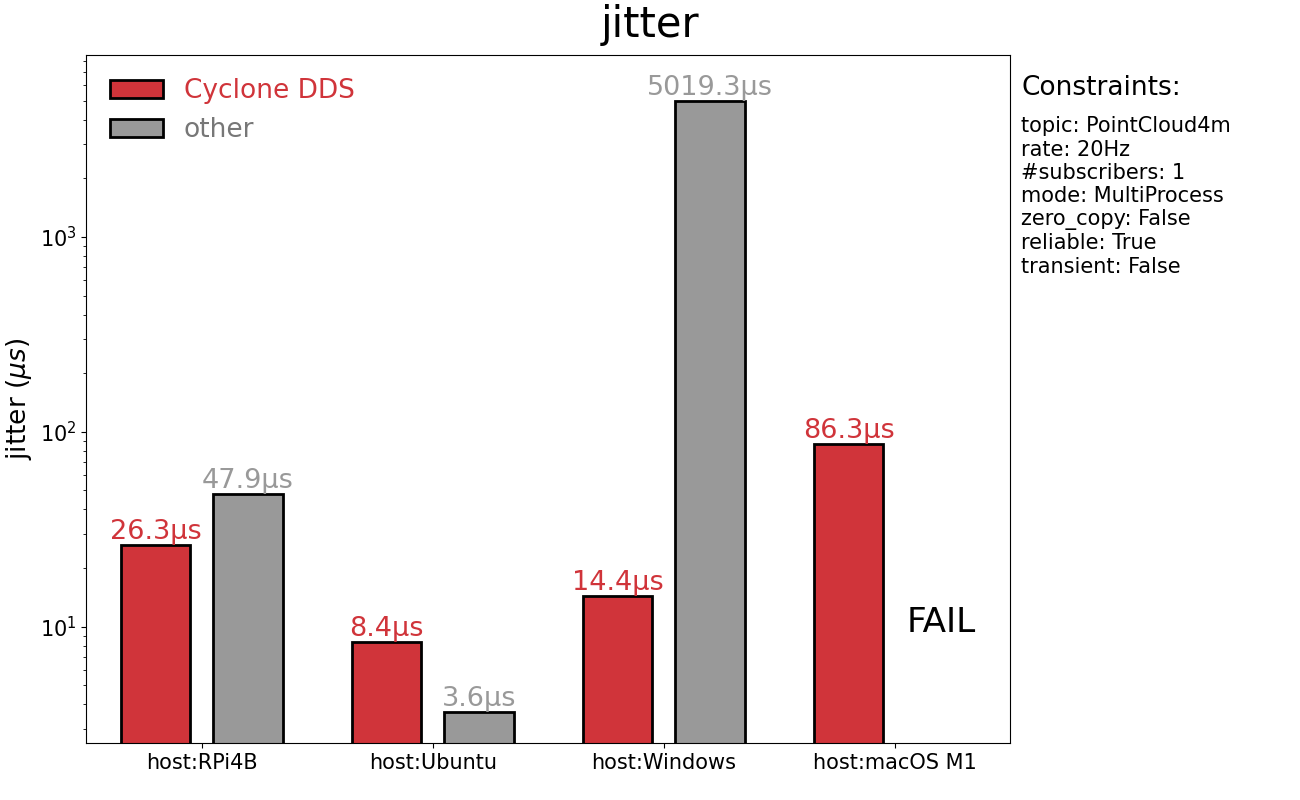

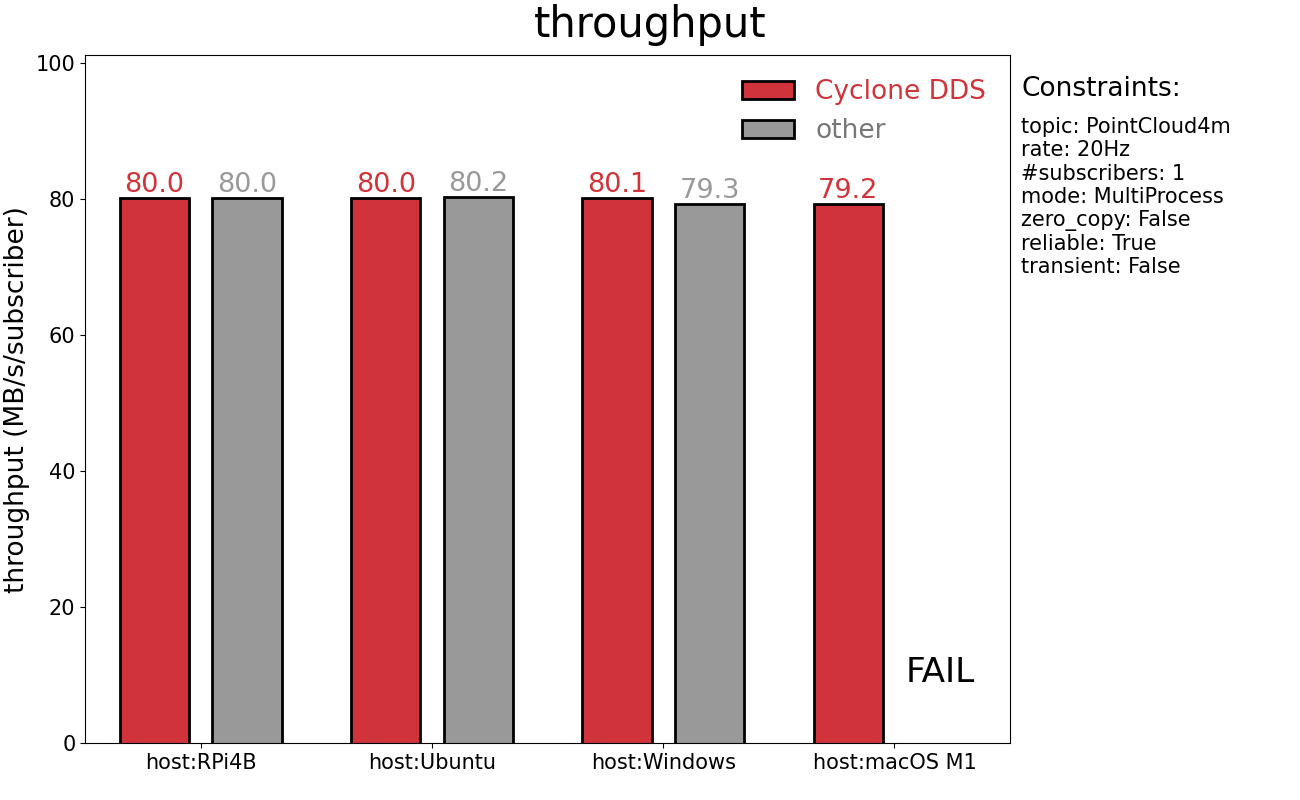

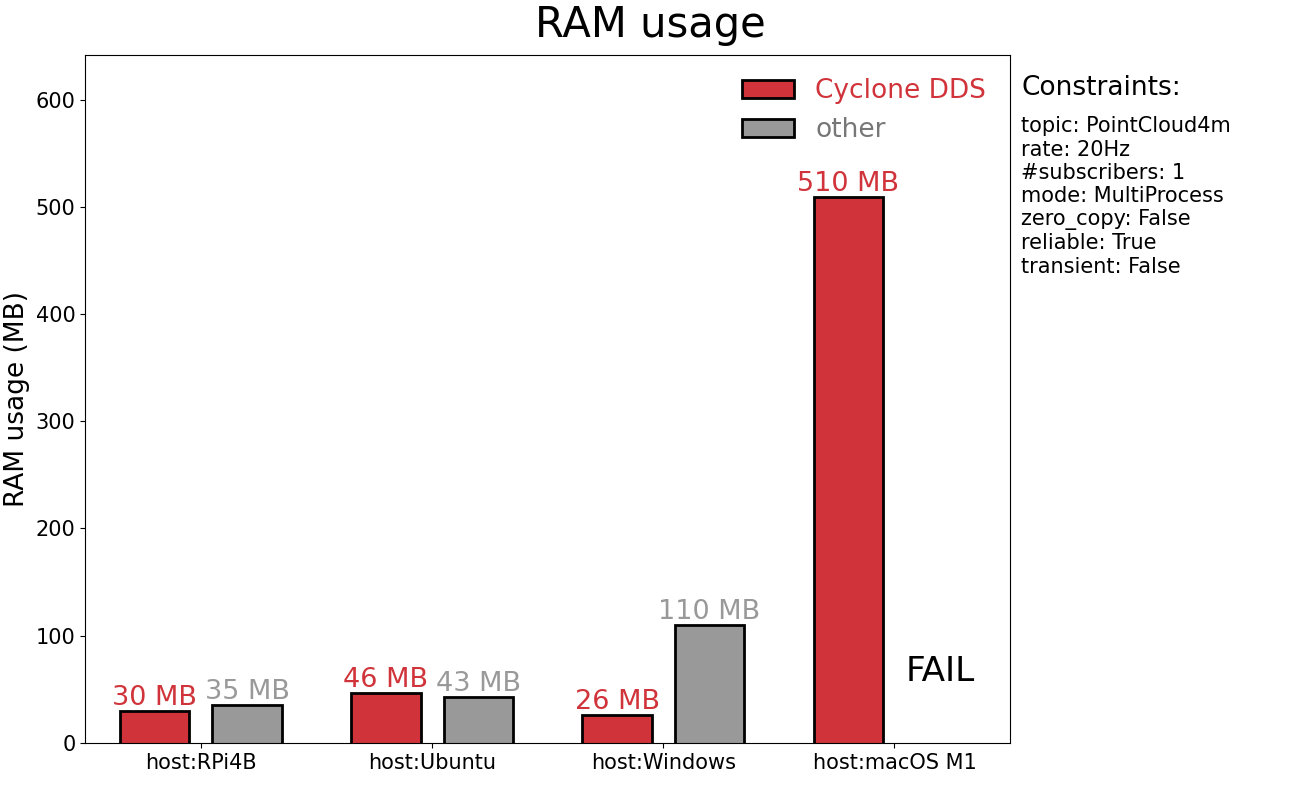

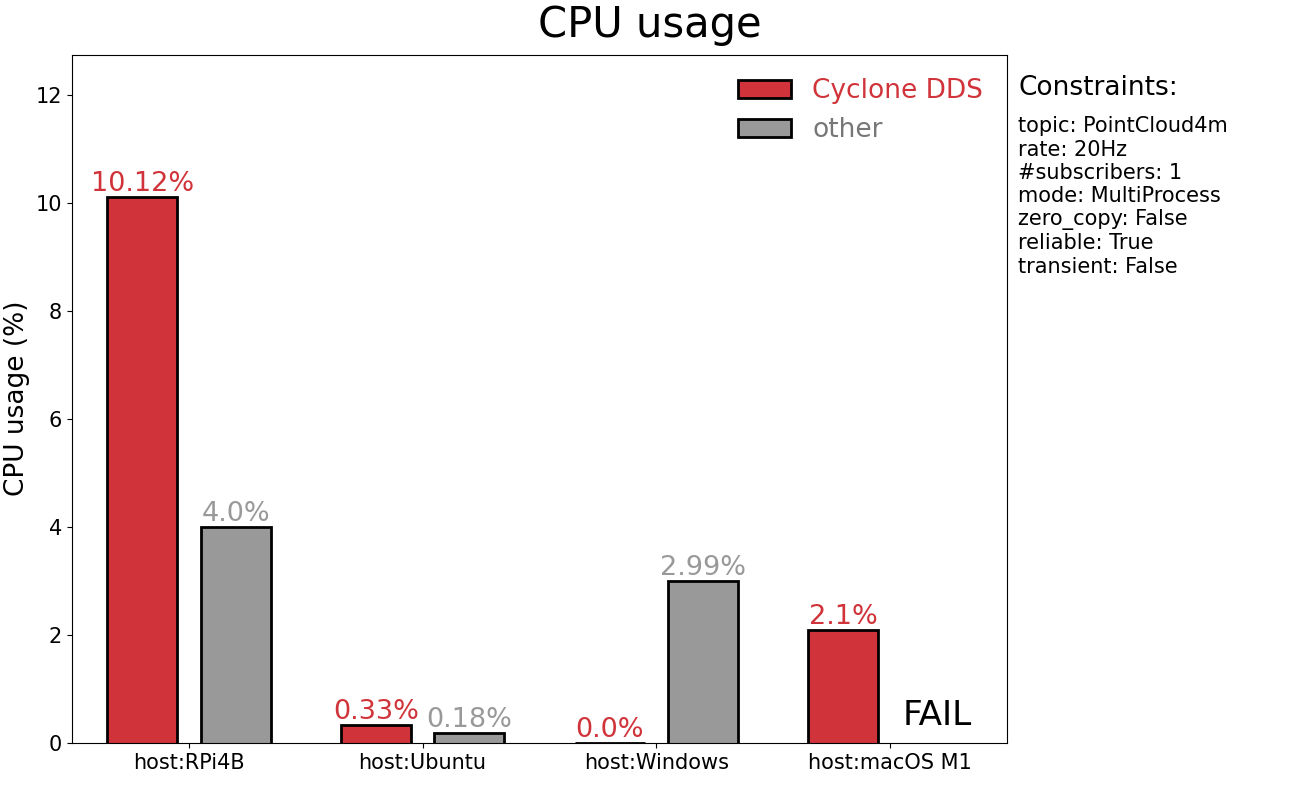

Here are 4MB messages at 20Hz. How to instructions, test scripts, raw data, tabulated data, tabulation scripts, plotting scripts and detailed test result PDFs for every individual test are here.

Note: Regarding Cyclone DDS CPU usage reporting as “0.0%” for Windows 10, the resolution of the timing was such that the measurement is below the threshold. The CPU usage on Windows 10 was probably similar to the Ubuntu results, but we simply couldn’t measure it in this test run.

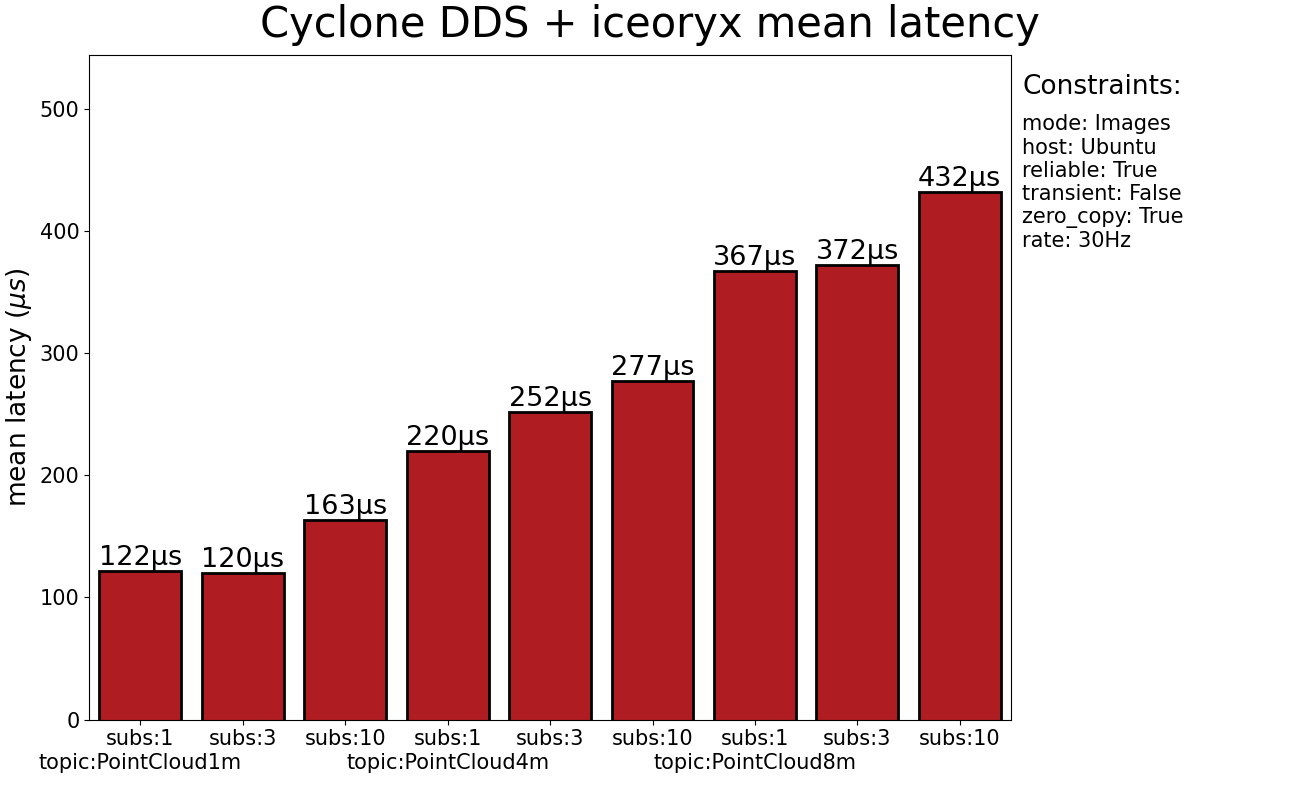

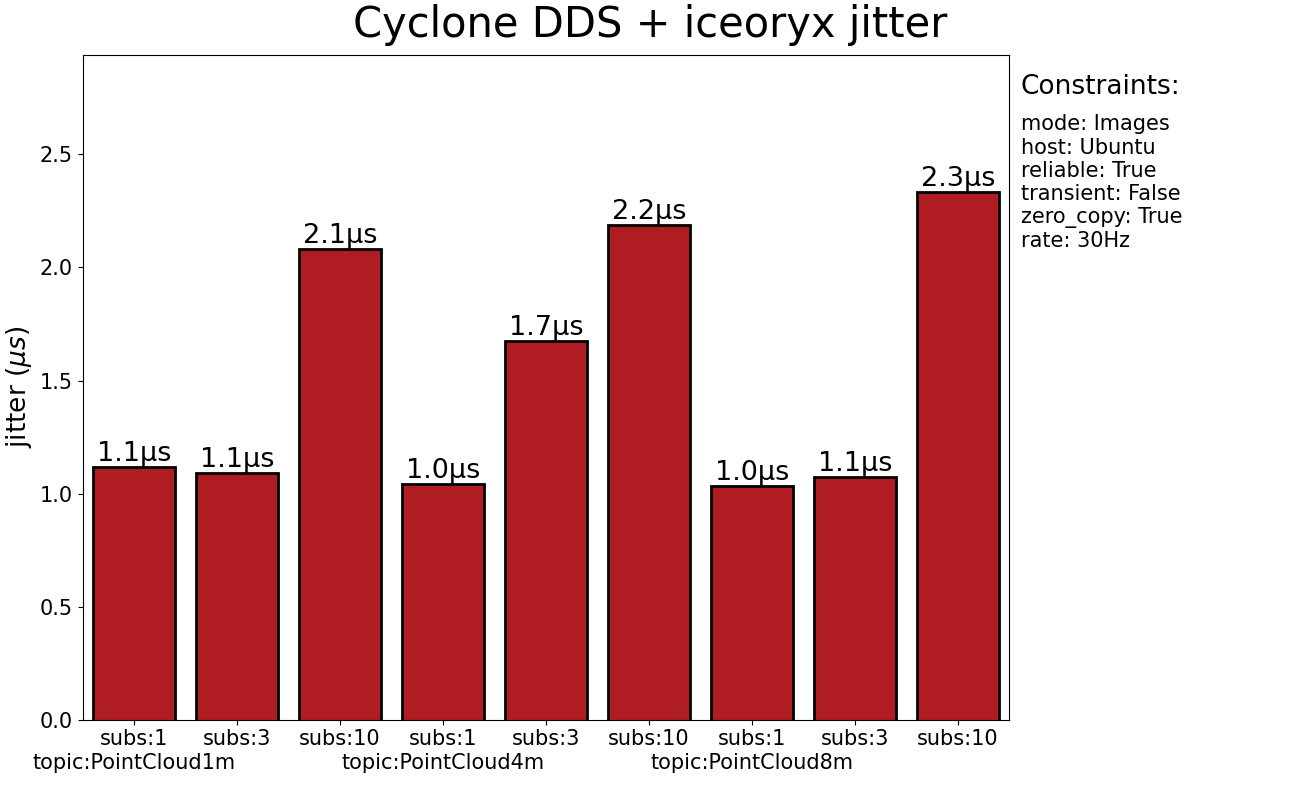

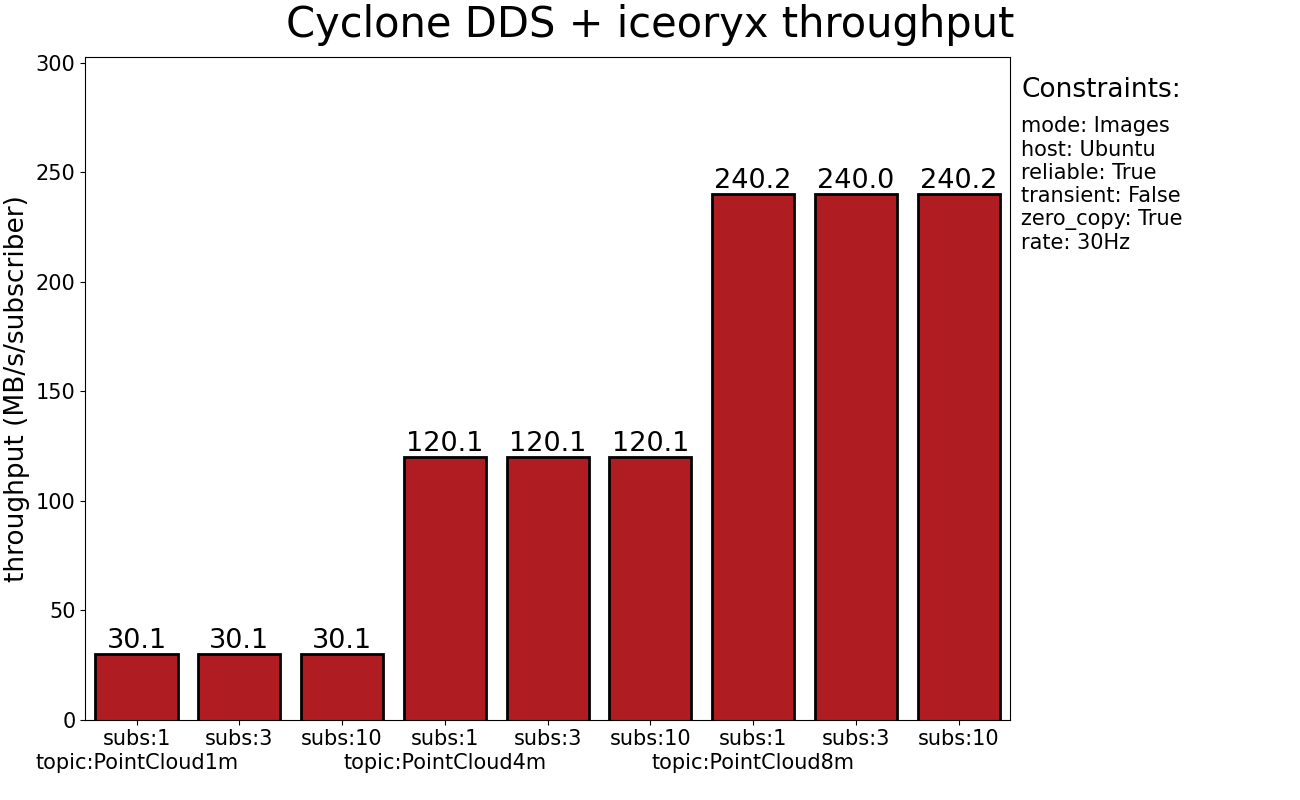

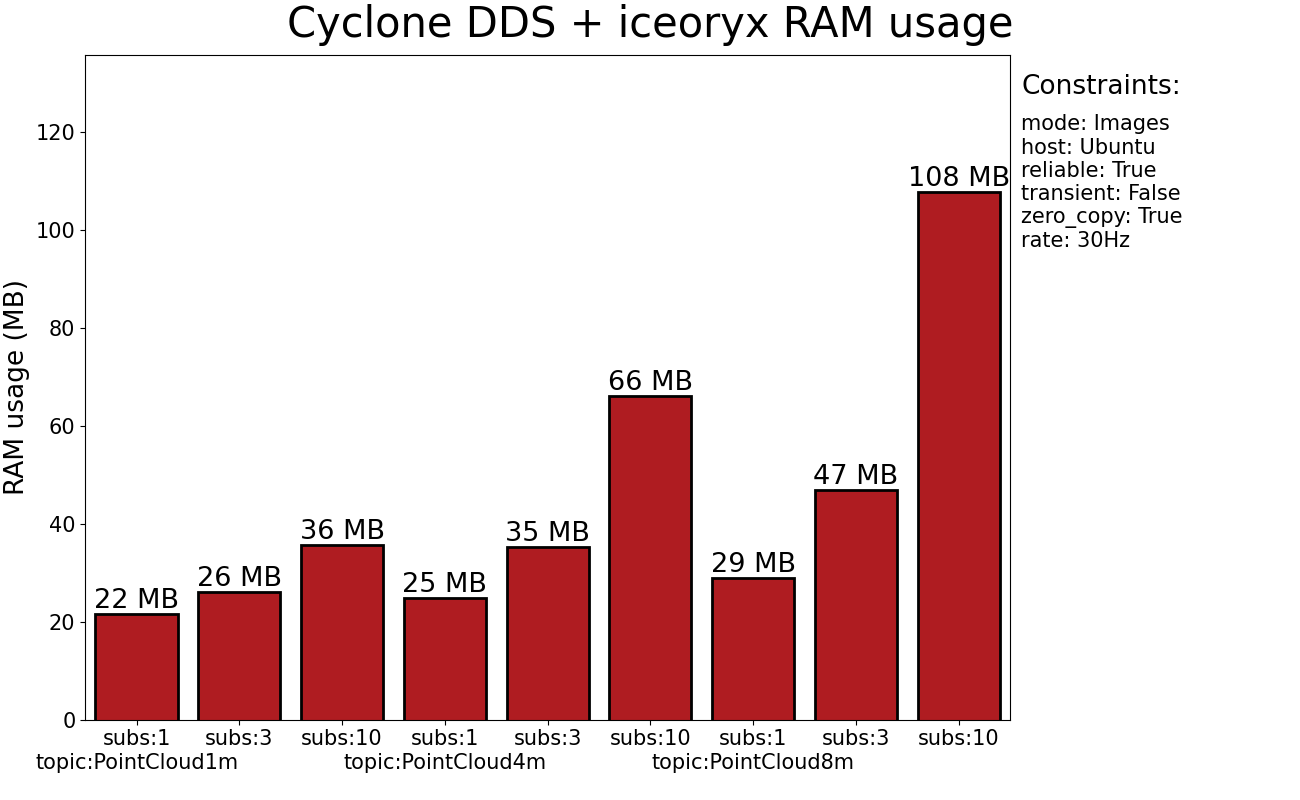

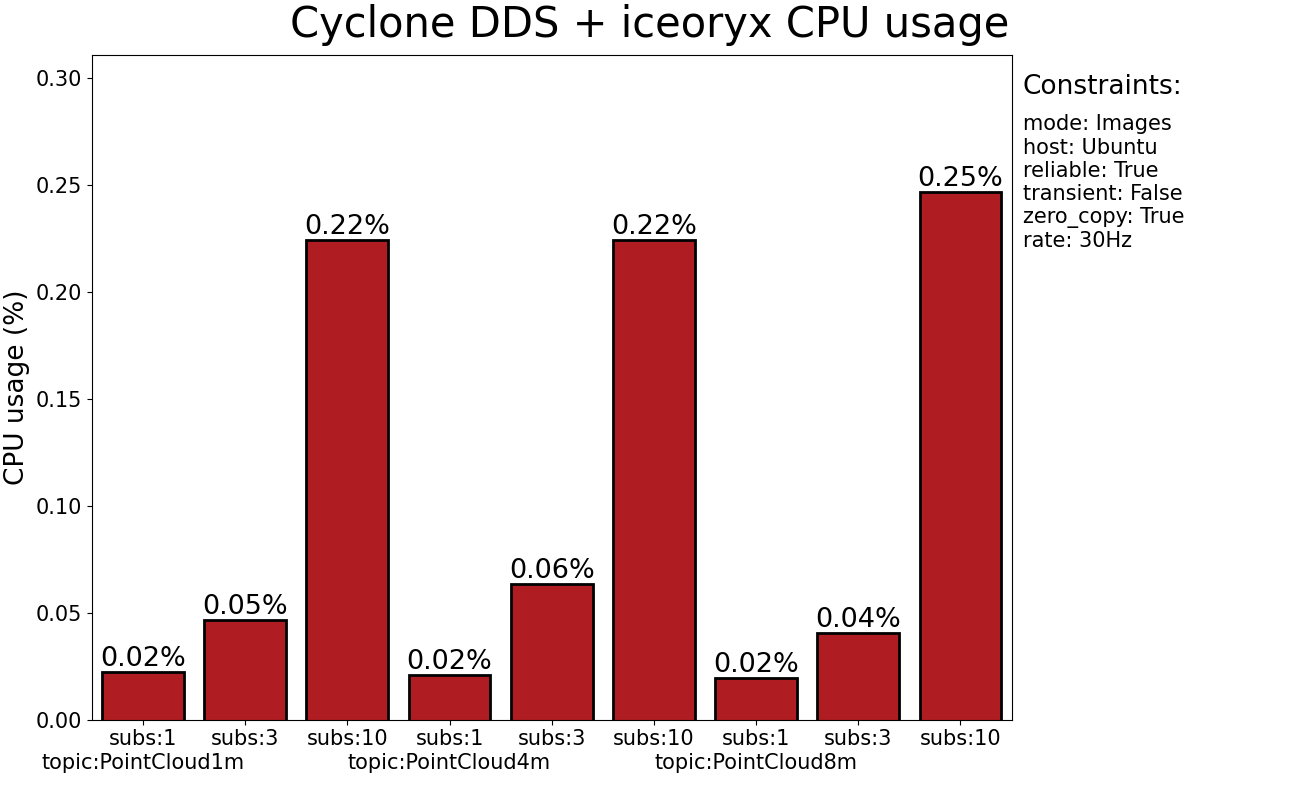

The tests were run again at 30Hz on using the rclcpp RMW LoanedMessage API with shared memory. Results are shown for Cyclone DDS + iceoryx. The instructions to use zero-copy with Cyclone DDS + iceoryx are here. Software engineers at ADLINK & Apex.AI followed the other middleware’s published instructions to use LoanedMessage API, but the other middleware failed these tests without getting any messages through.

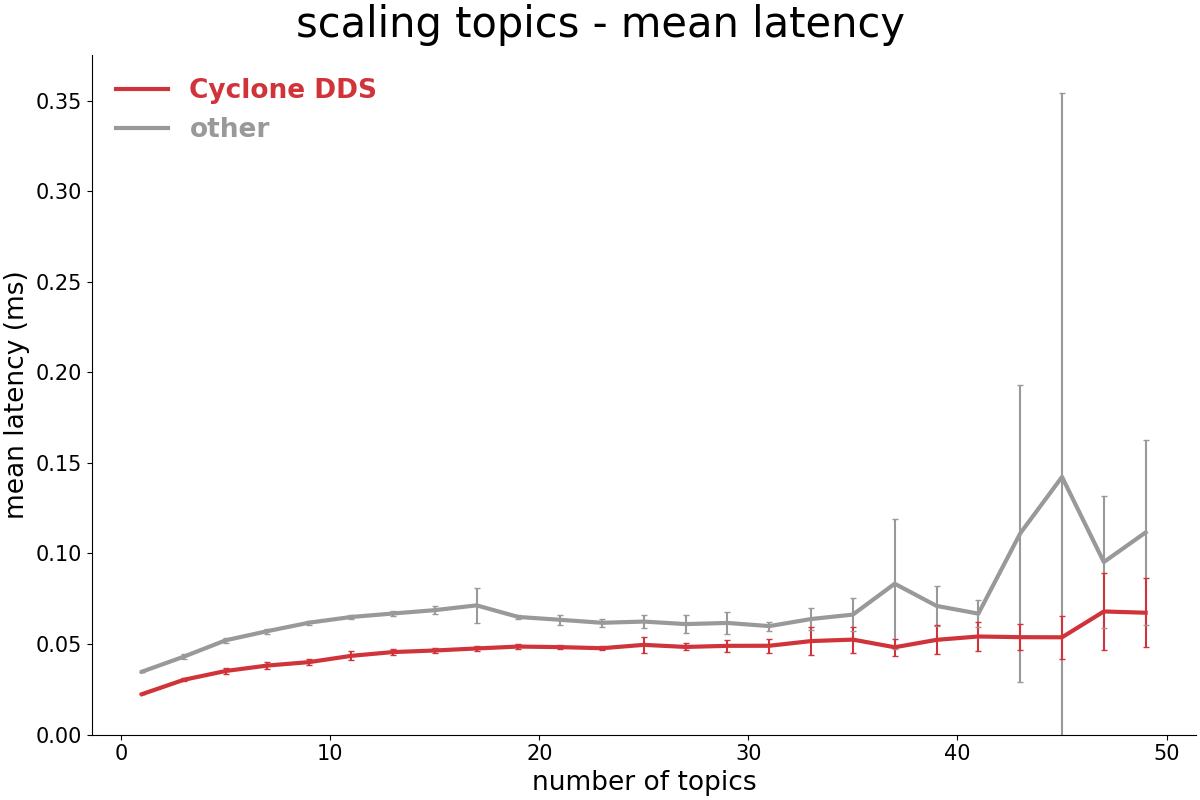

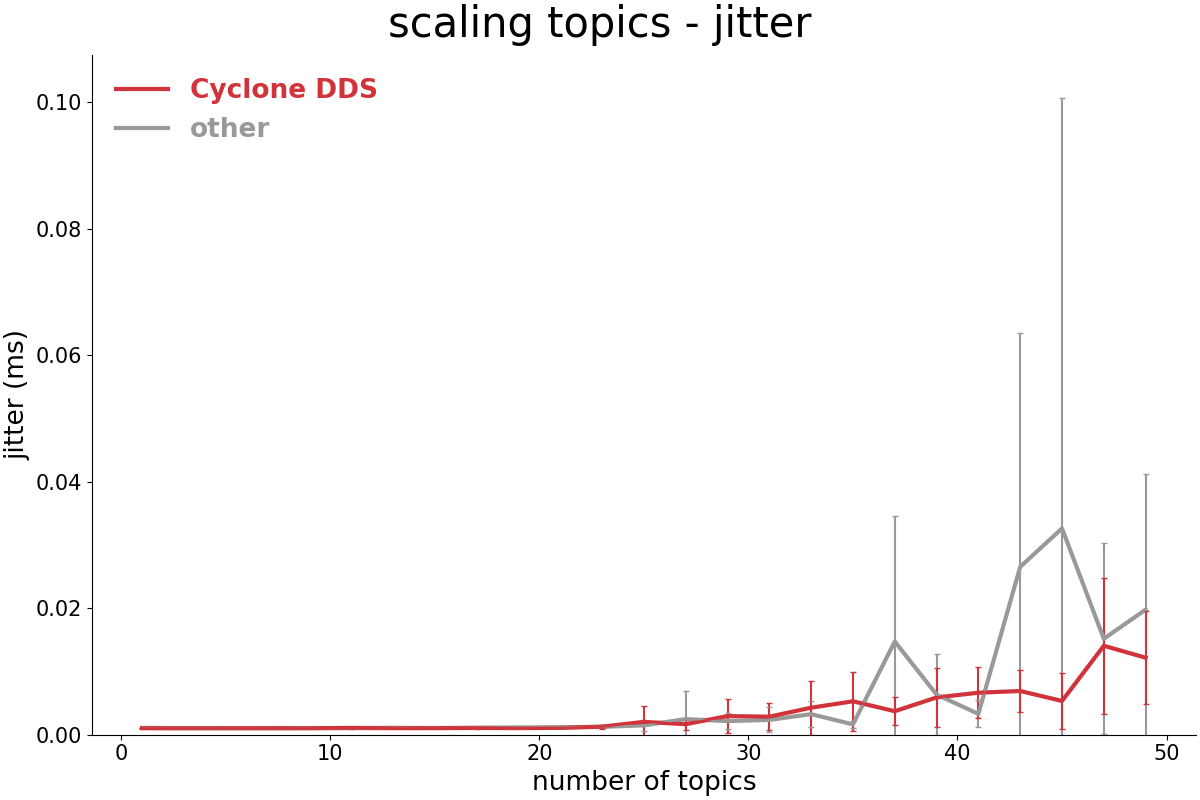

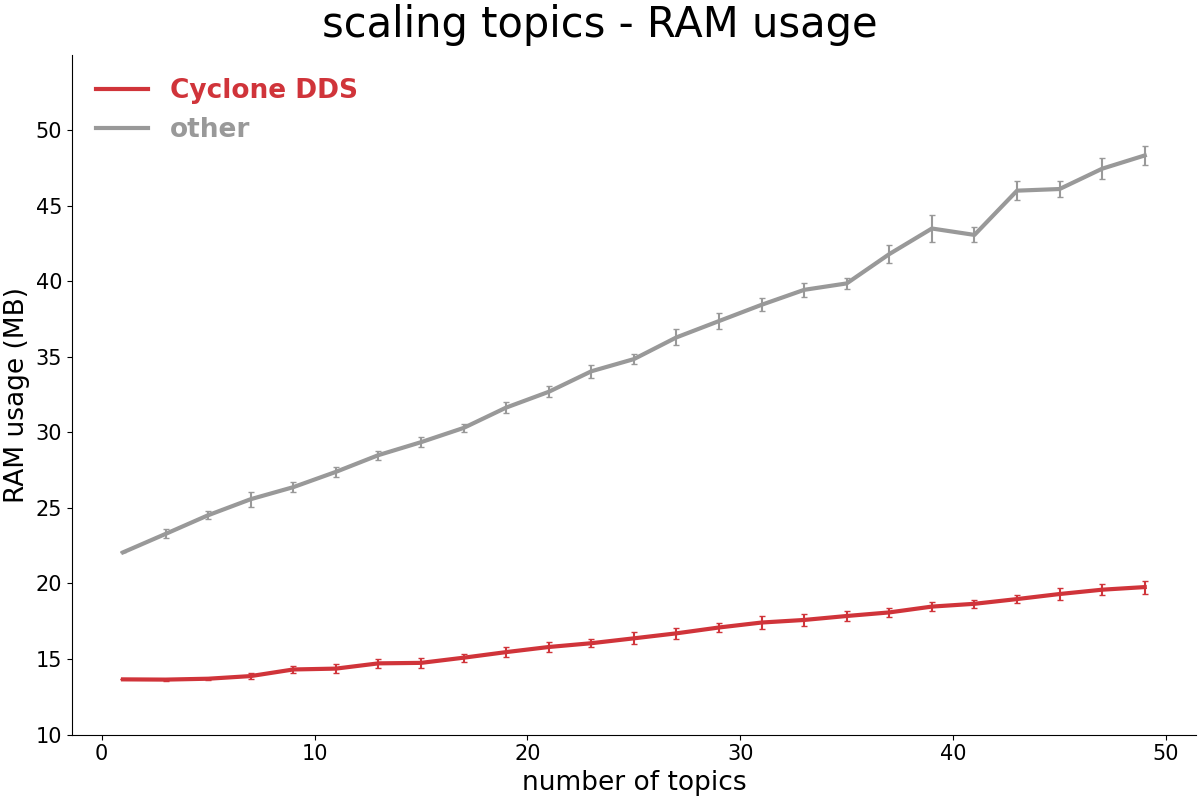

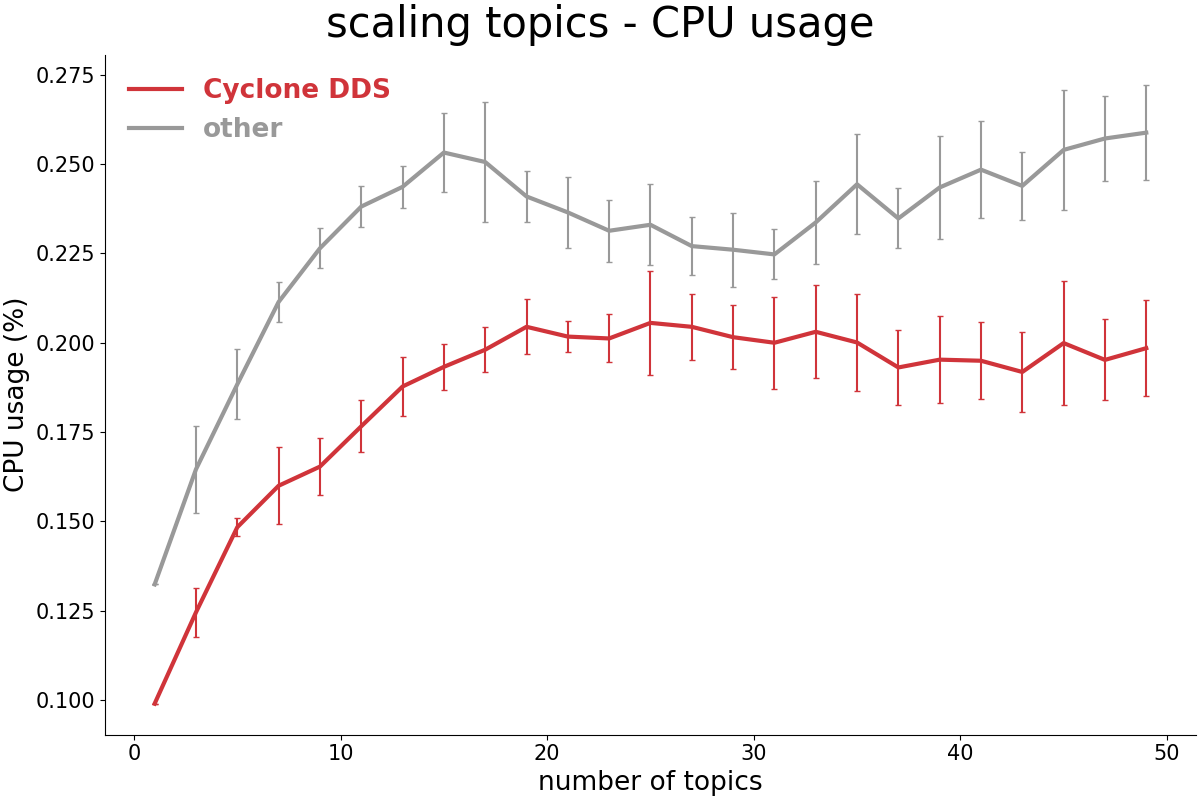

Without configuration, how does the implementation scale with the number of topics in the system?

Cyclone DDS scales well with the number of topics without configuration. These test findings are consistent with those reported to us by iRobot and Tractonomy. Shown below are the effects of scaling the number of topics and nodes with struct16 messages at 500 Hz on Ubuntu 20.04.3 amd64. How to instructions, test scripts, raw data, tabulated data, tabulation scripts, plotting scripts and detailed test result PDFs for every individual test are here.

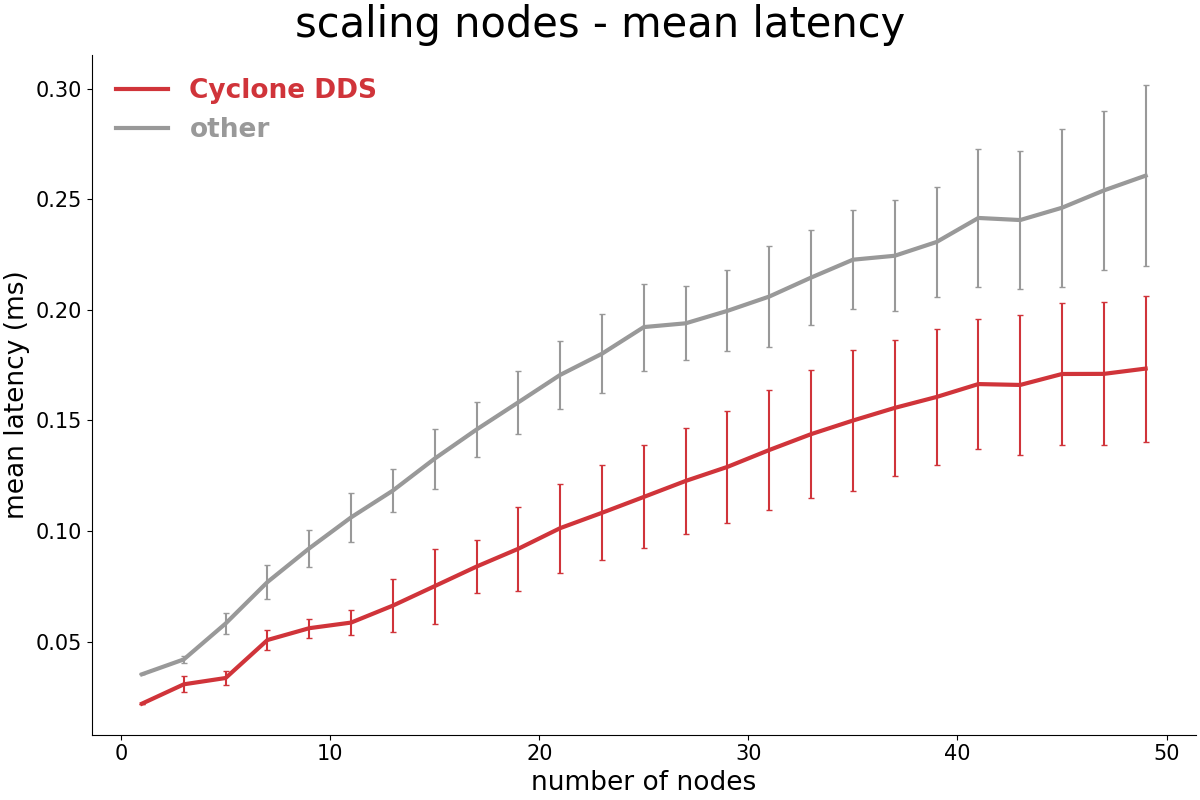

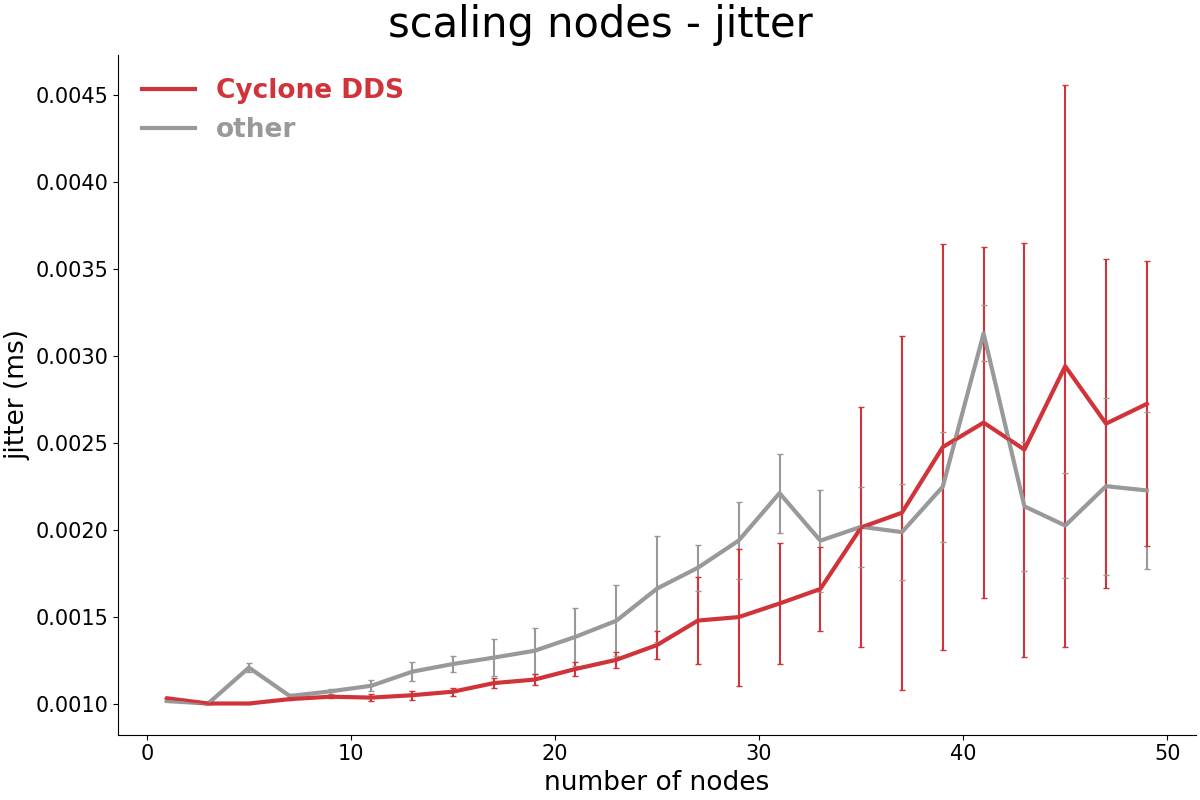

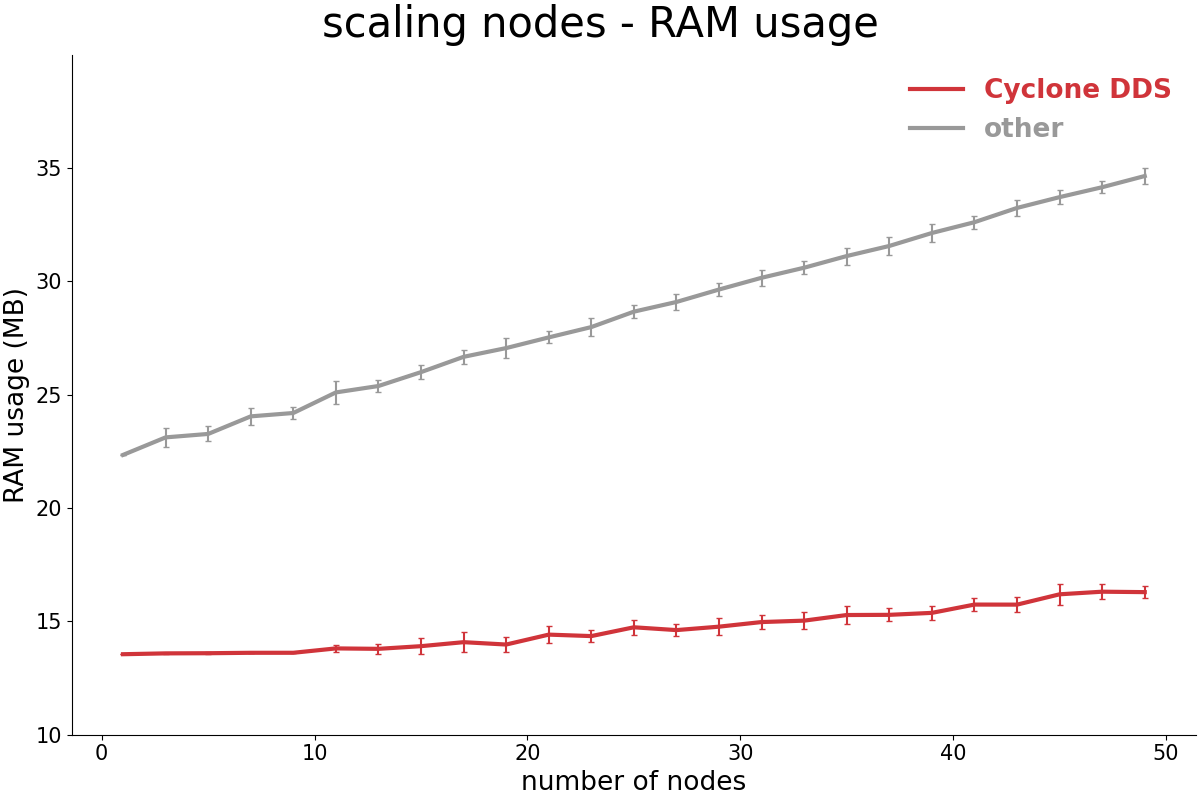

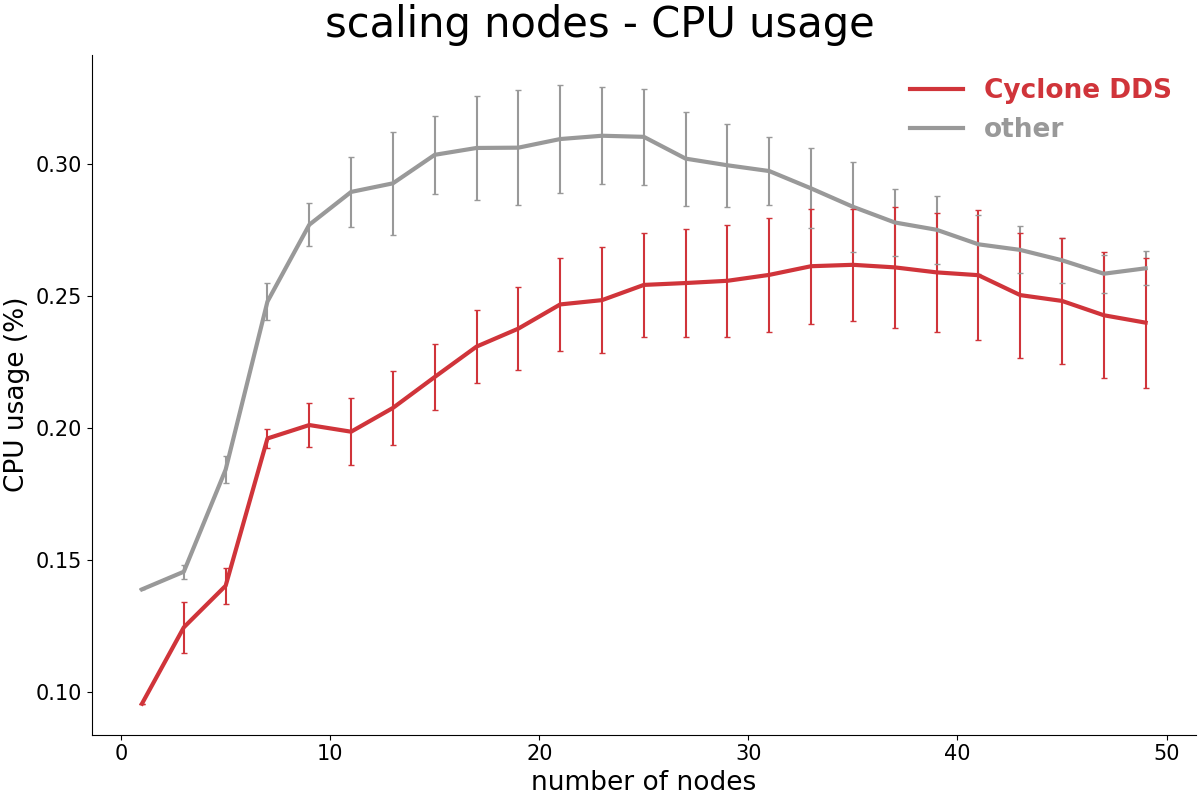

Without configuration, how does the implementation scale with the number of nodes in the system?

Cyclone DDS scales well with the number of nodes without configuration. In the charts below you see scaling the number of nodes with one topic per node with struct16 messages at 500 Hz on Ubuntu 20.04.3 amd64. How to instructions, test scripts, raw data, tabulated data, tabulation scripts, plotting scripts and detailed test result PDFs for every individual test are here.

General performance

Please provide benchmarks for inter-host, inter-process (with and without LoanedMessages), and intra-process (with and without LoanedMessages) throughput for both large and small message sizes, on both Linux and Windows.

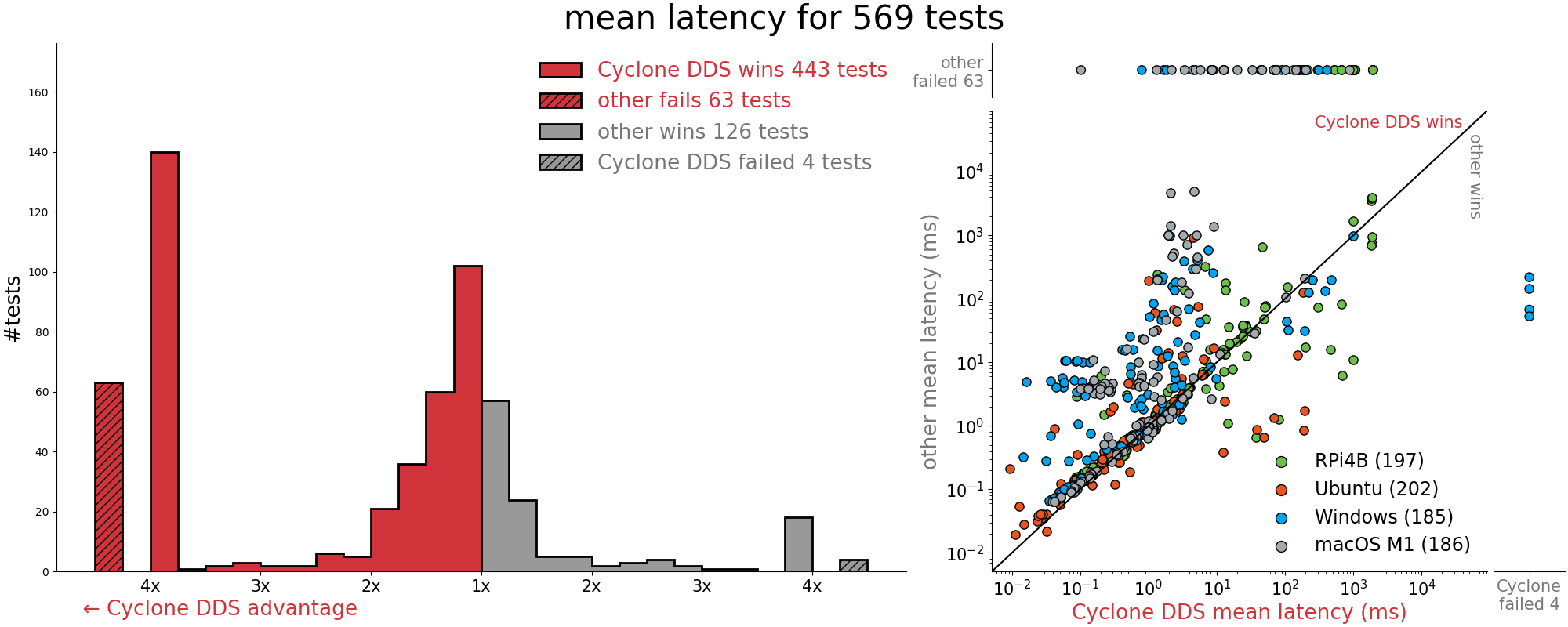

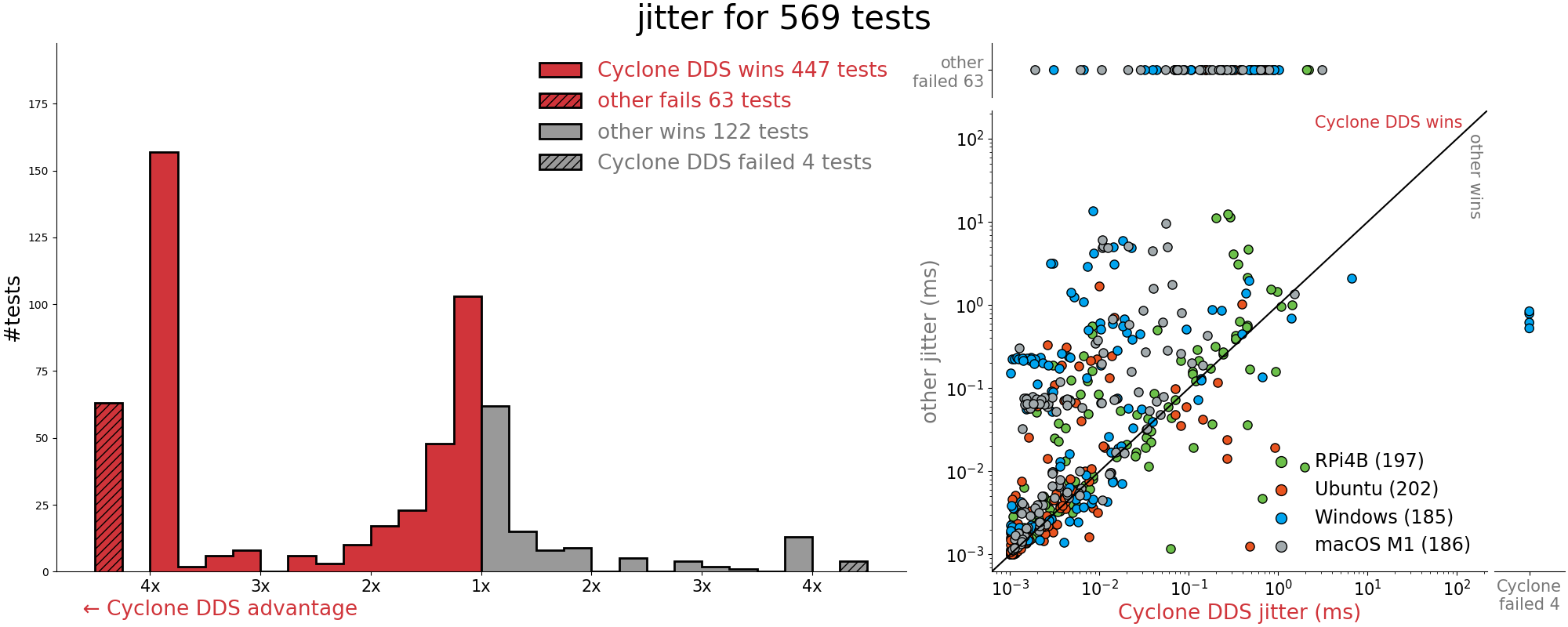

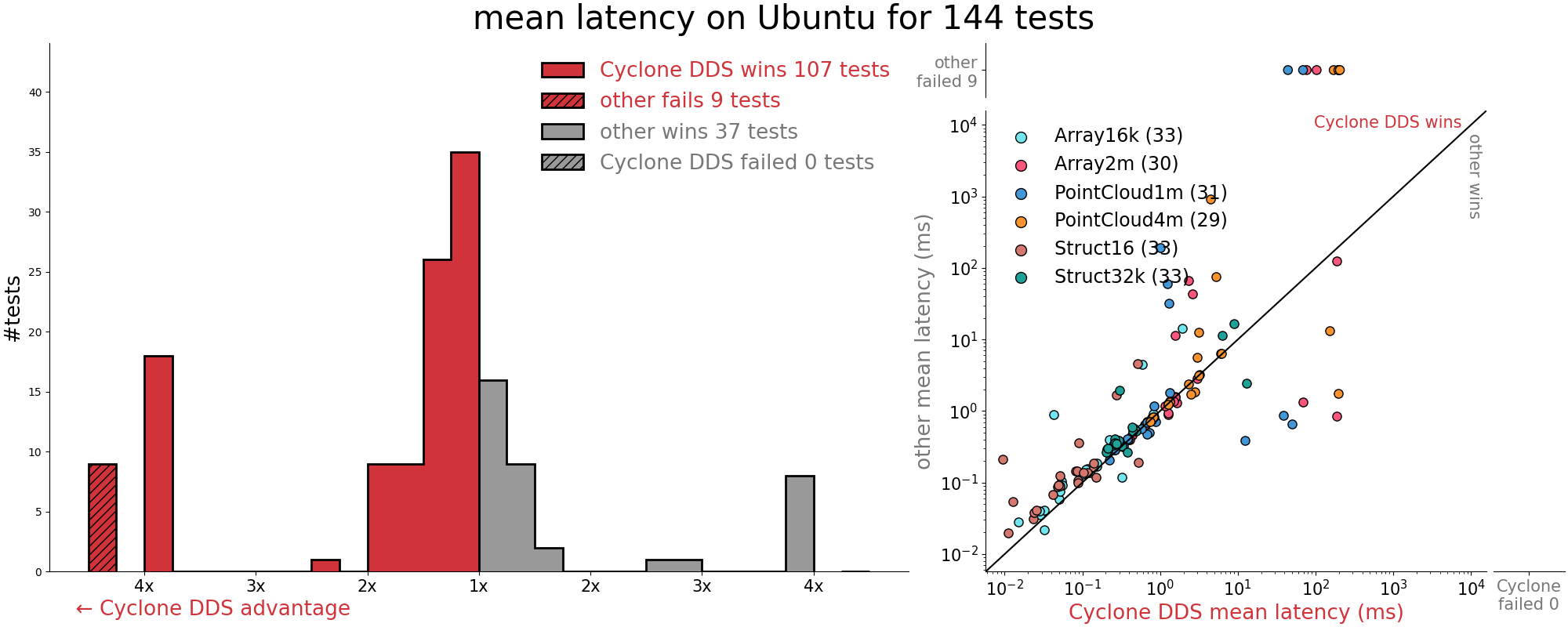

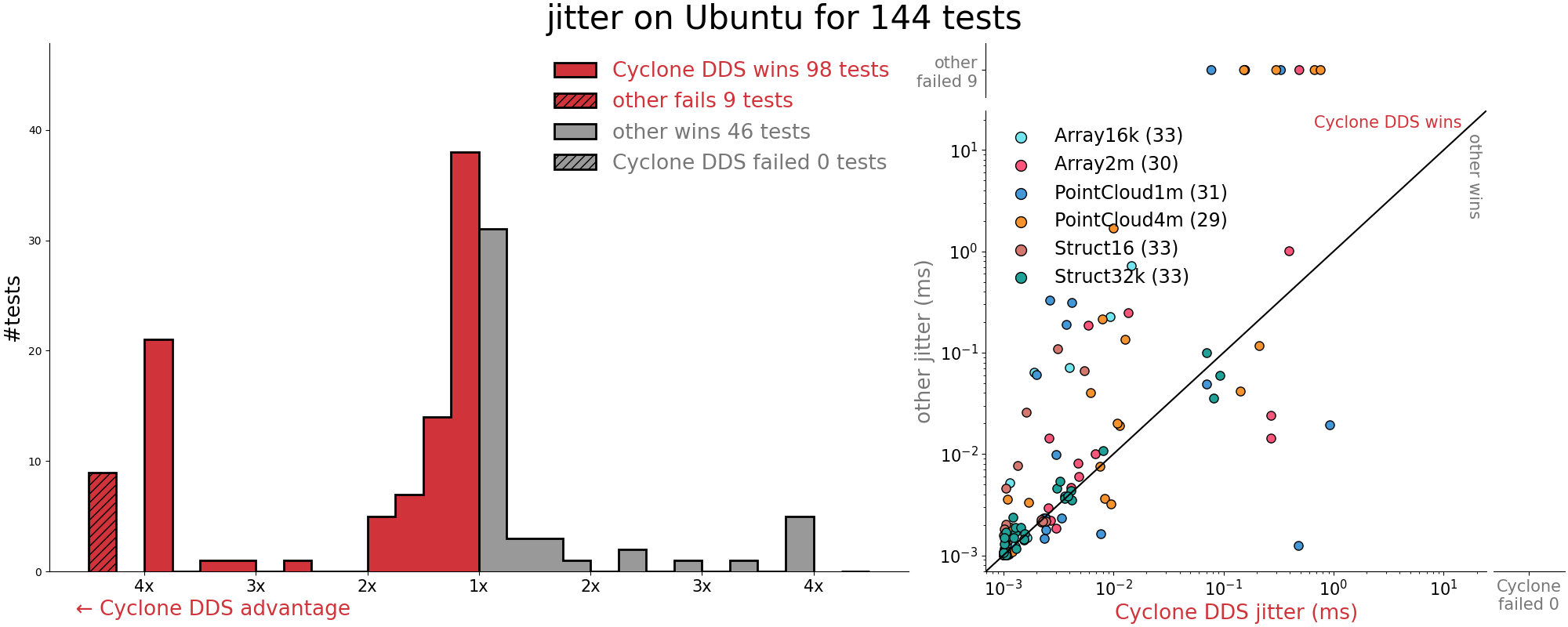

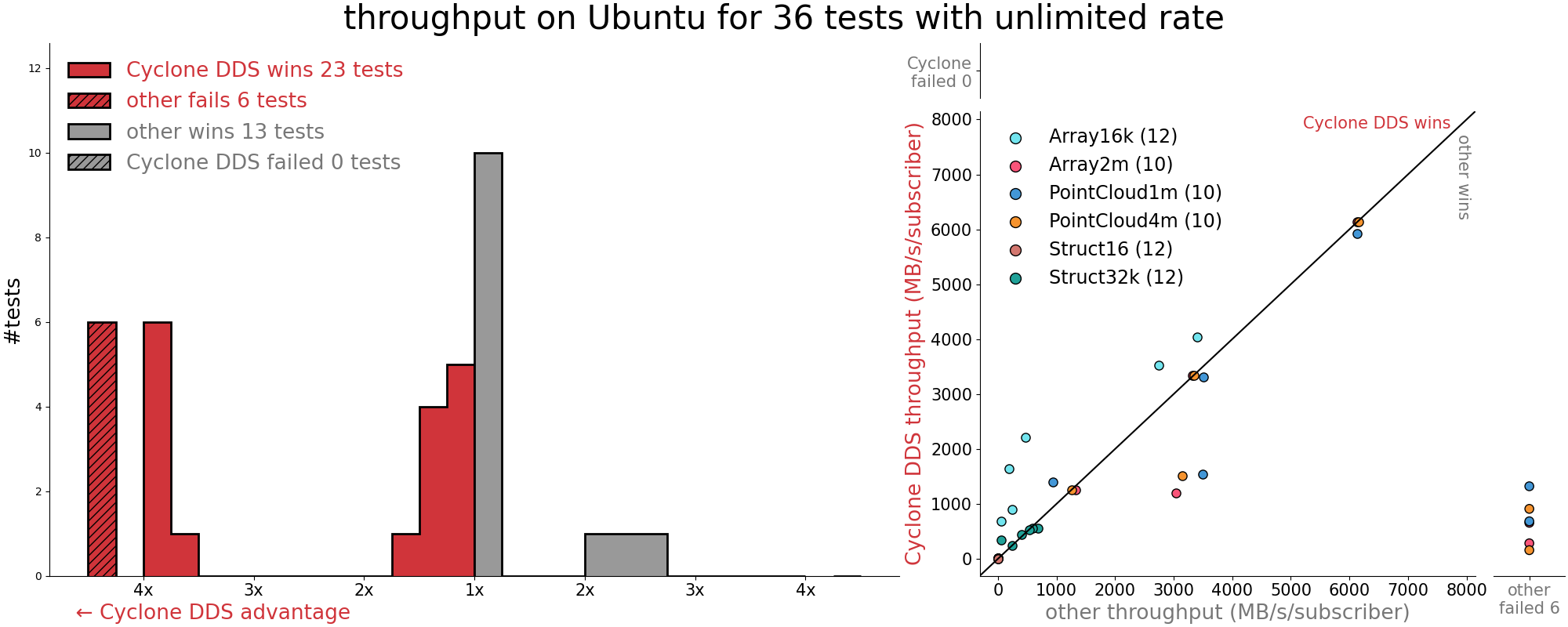

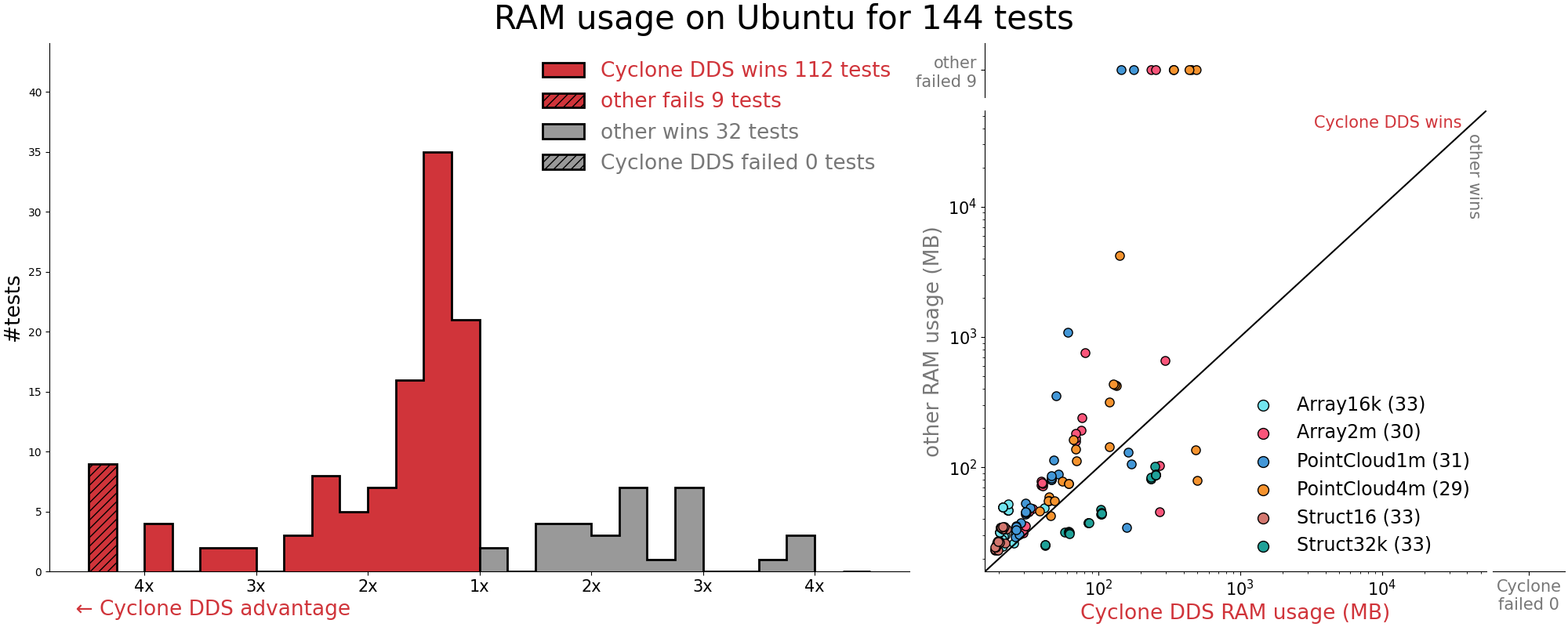

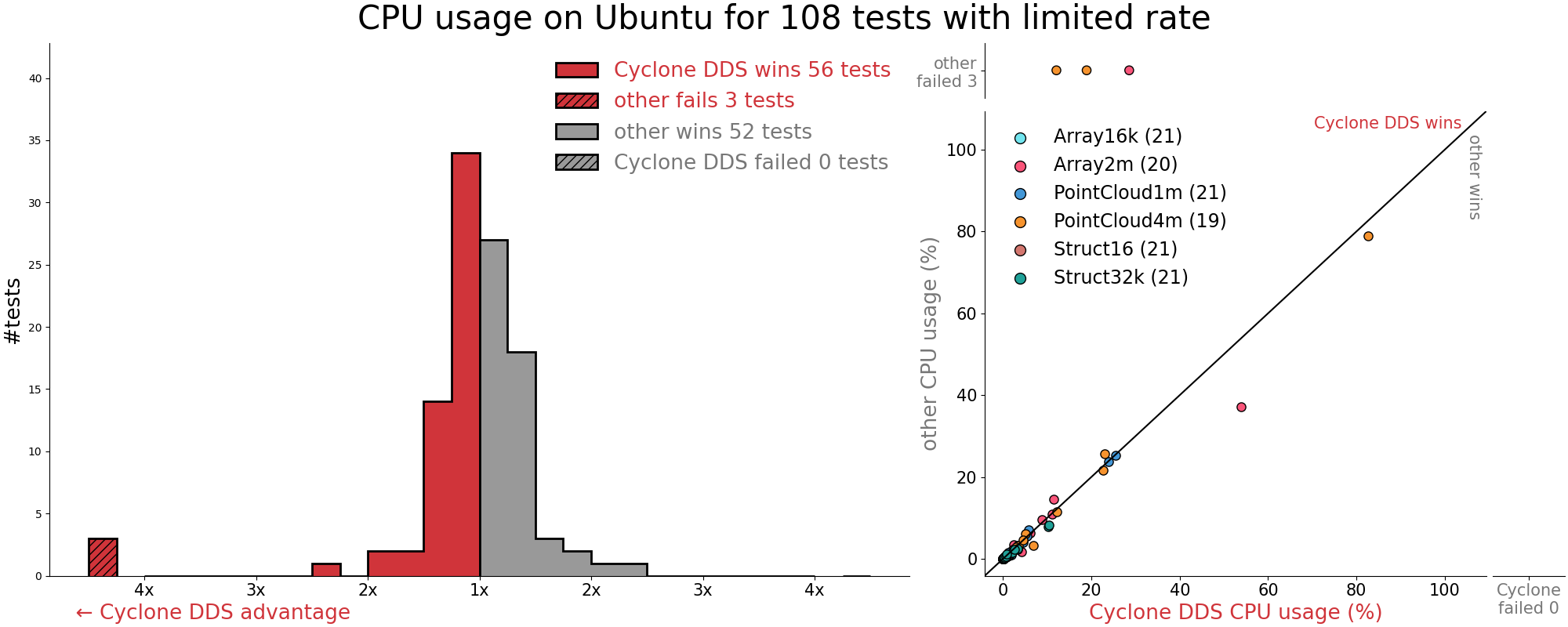

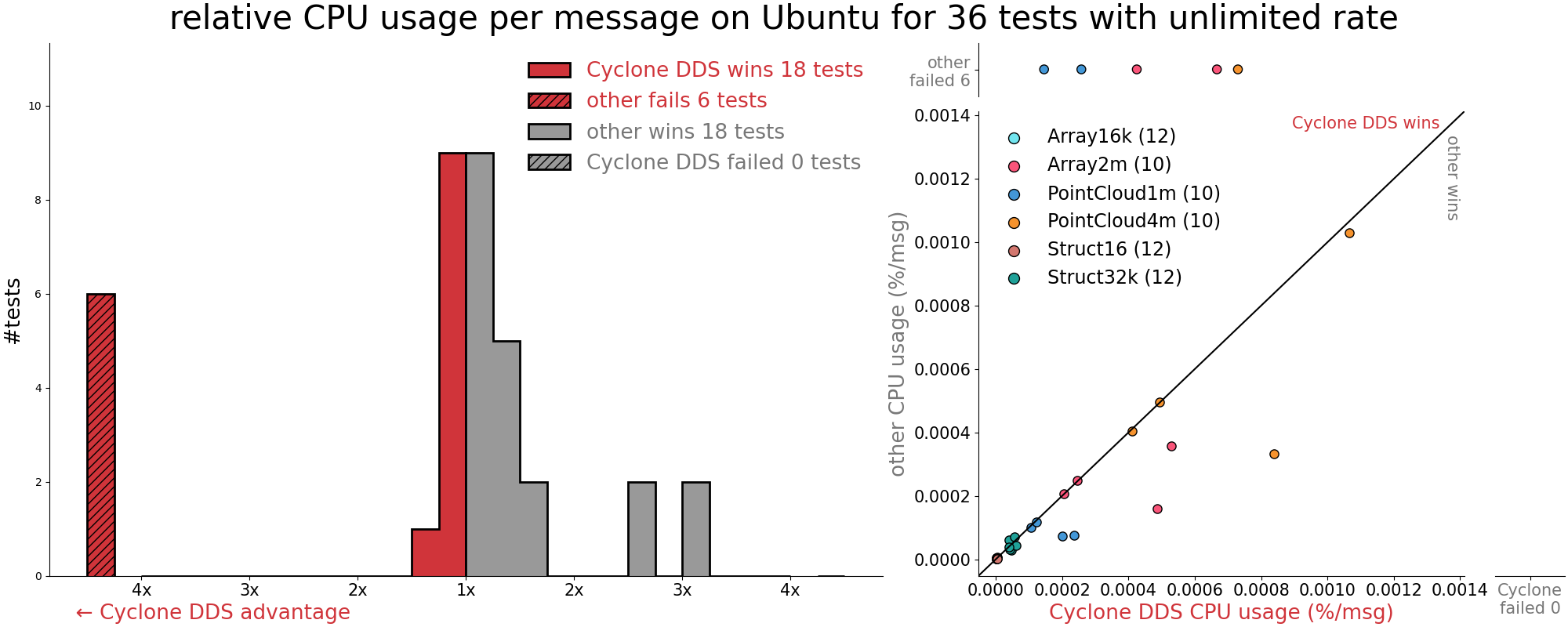

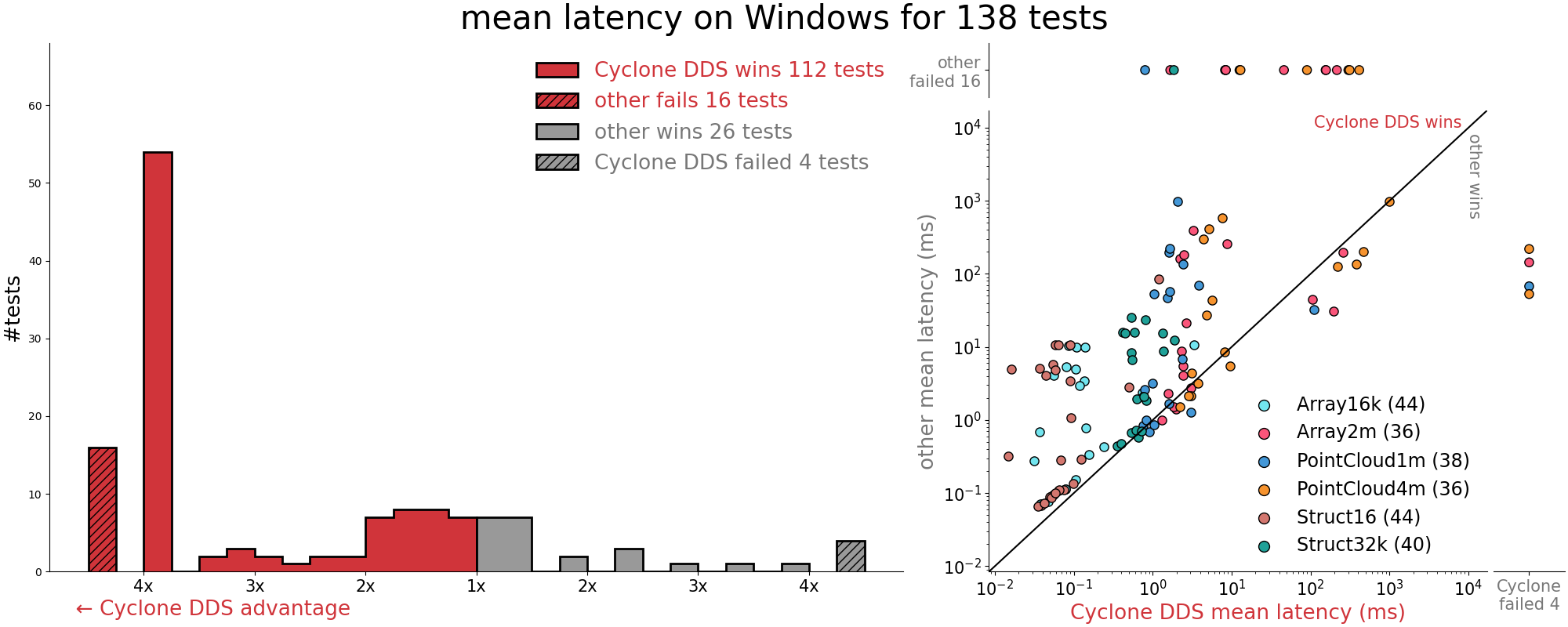

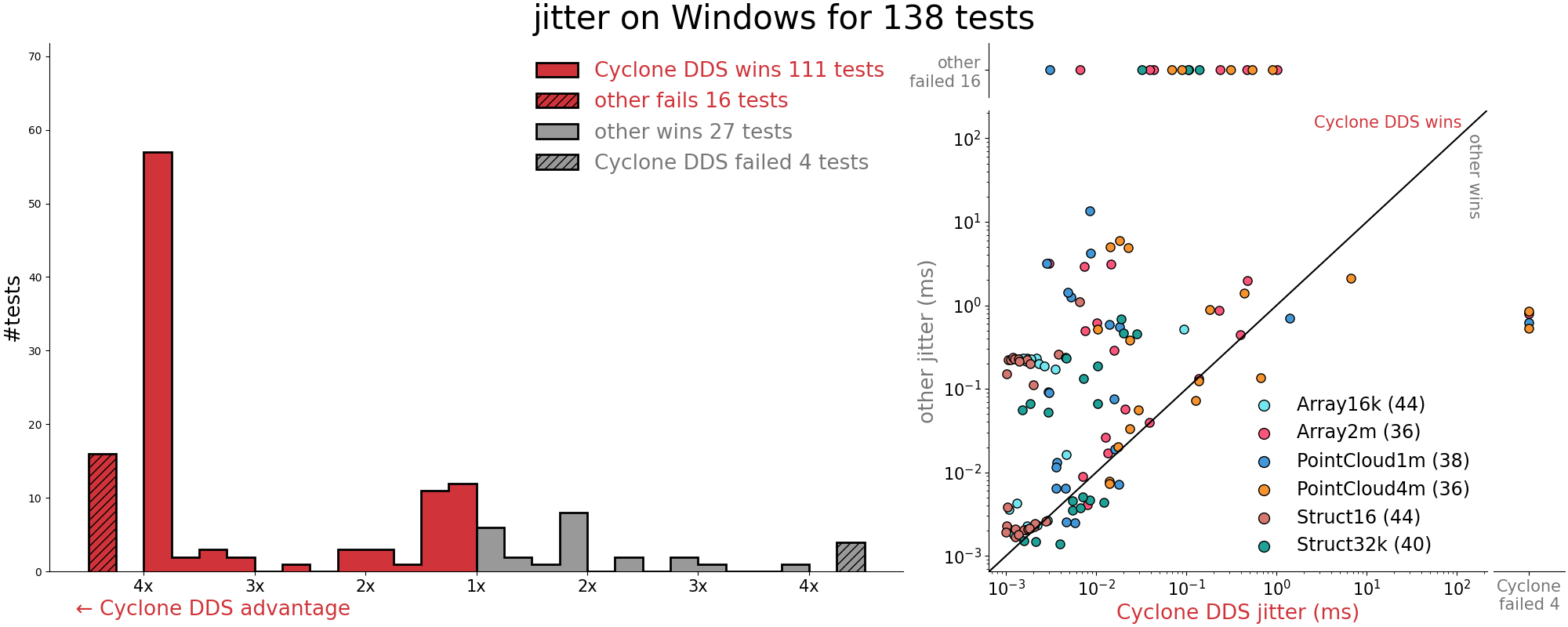

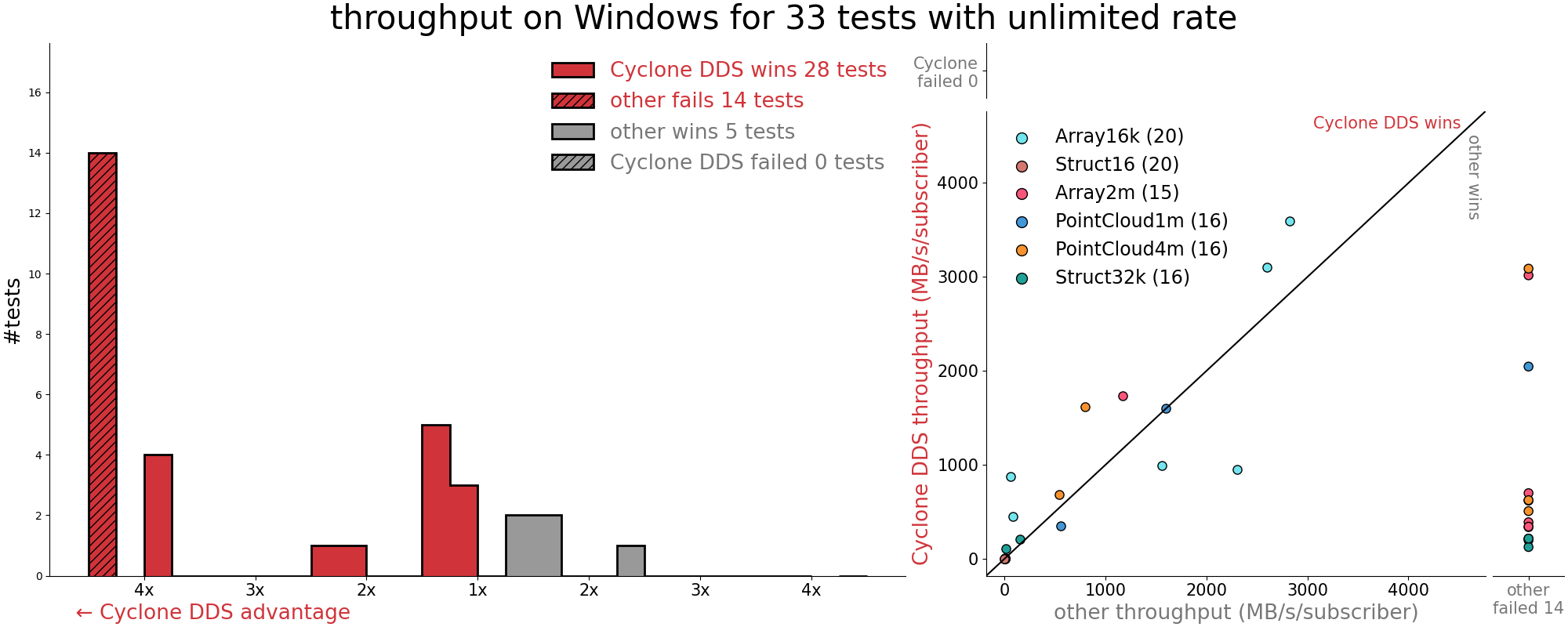

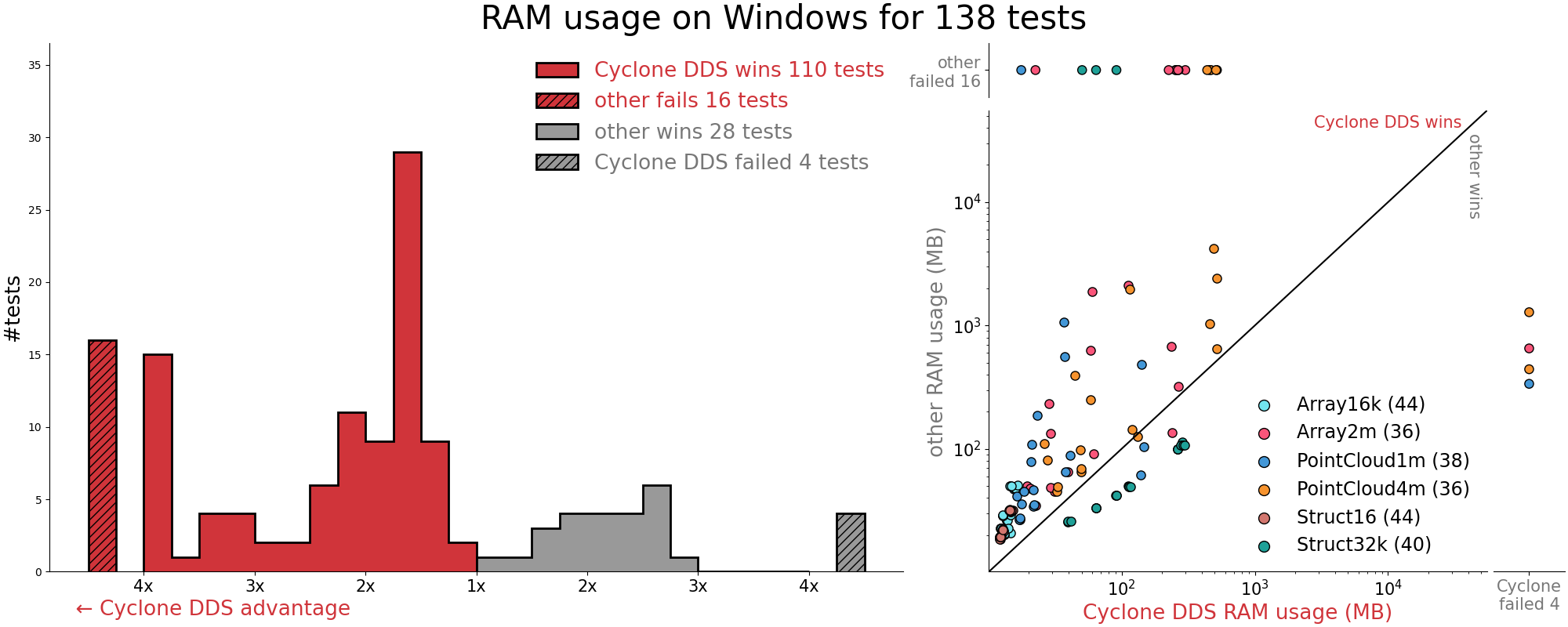

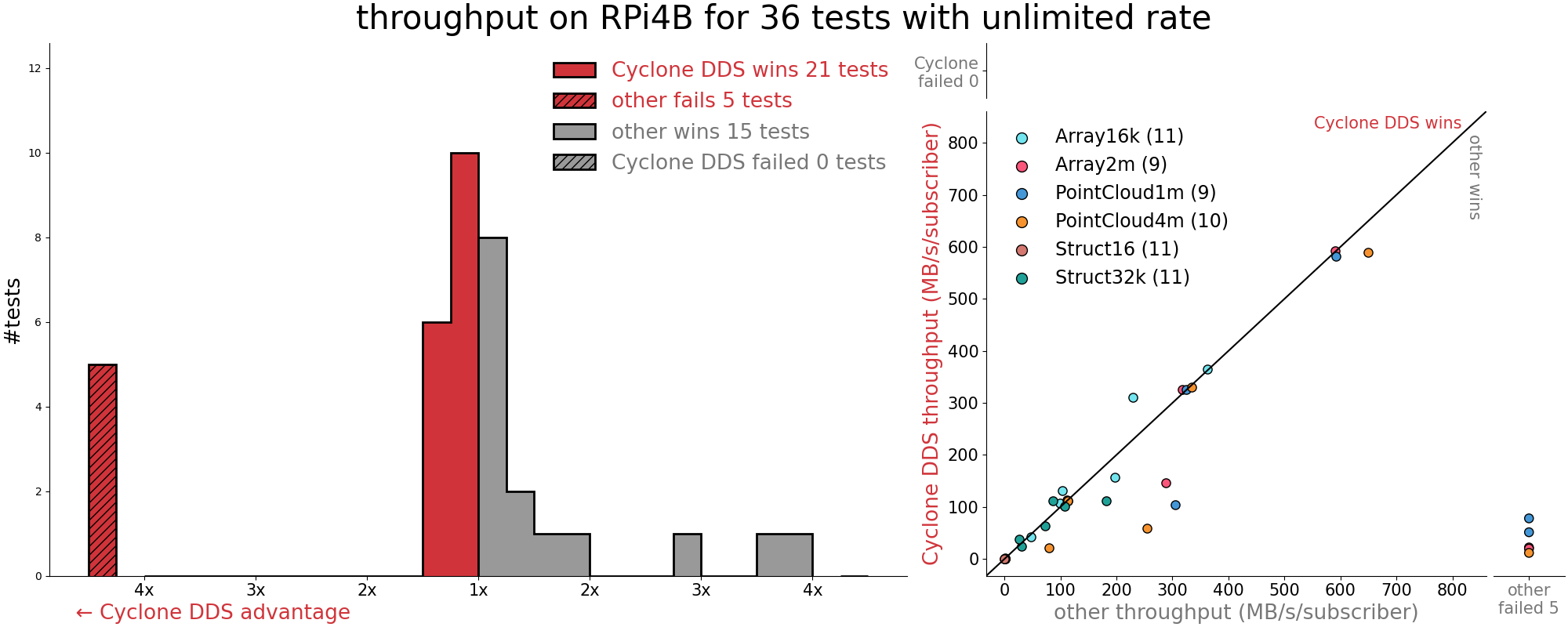

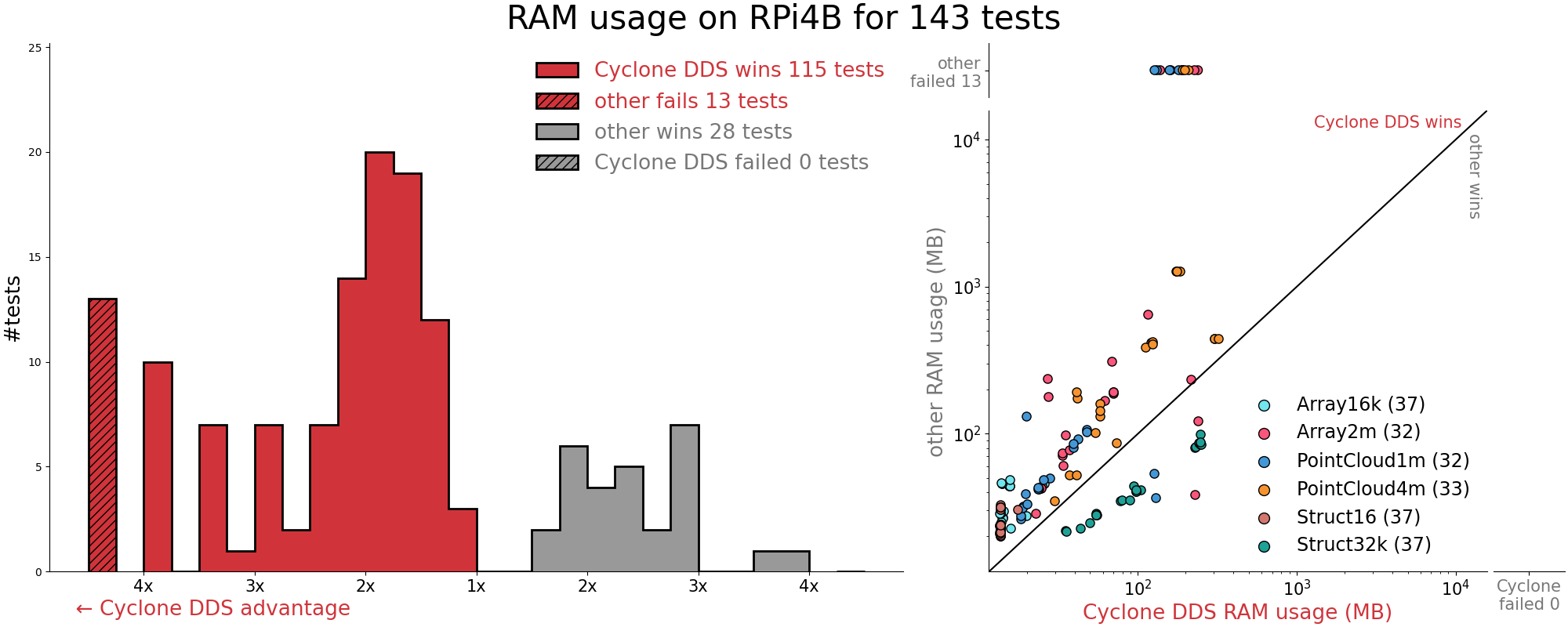

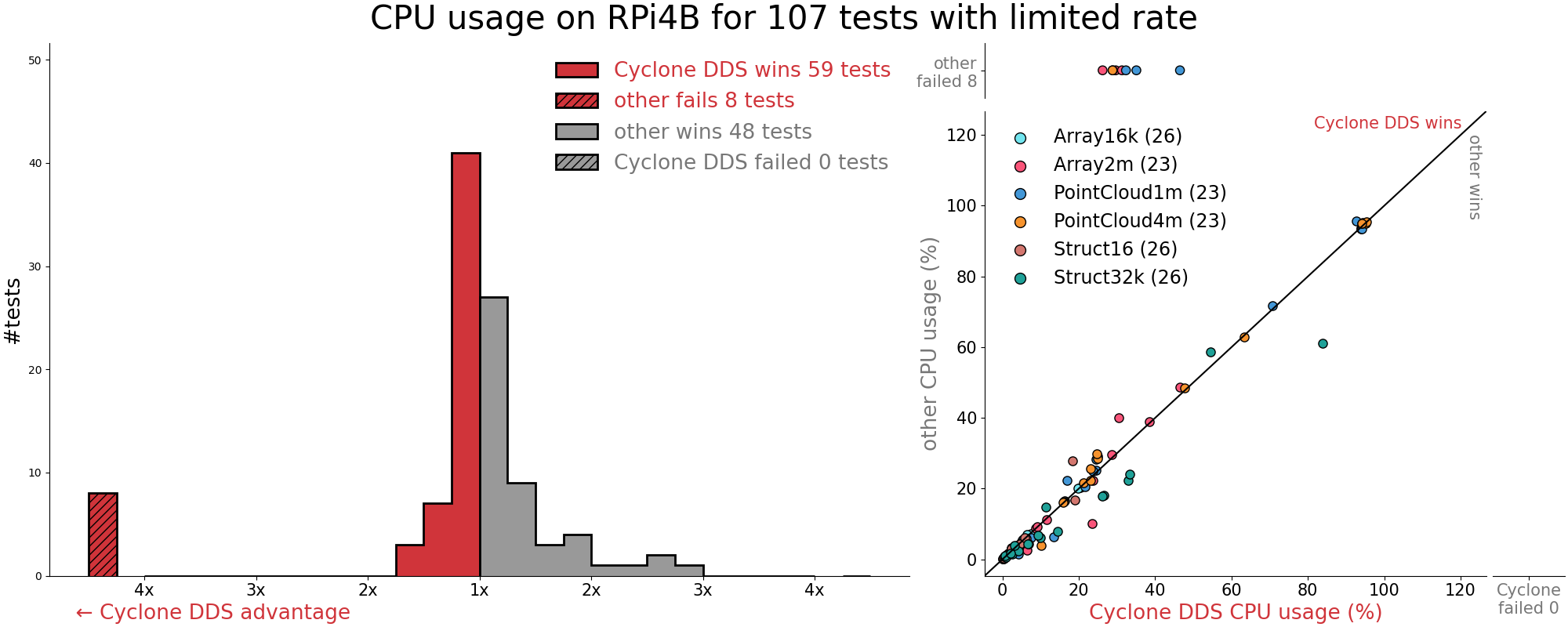

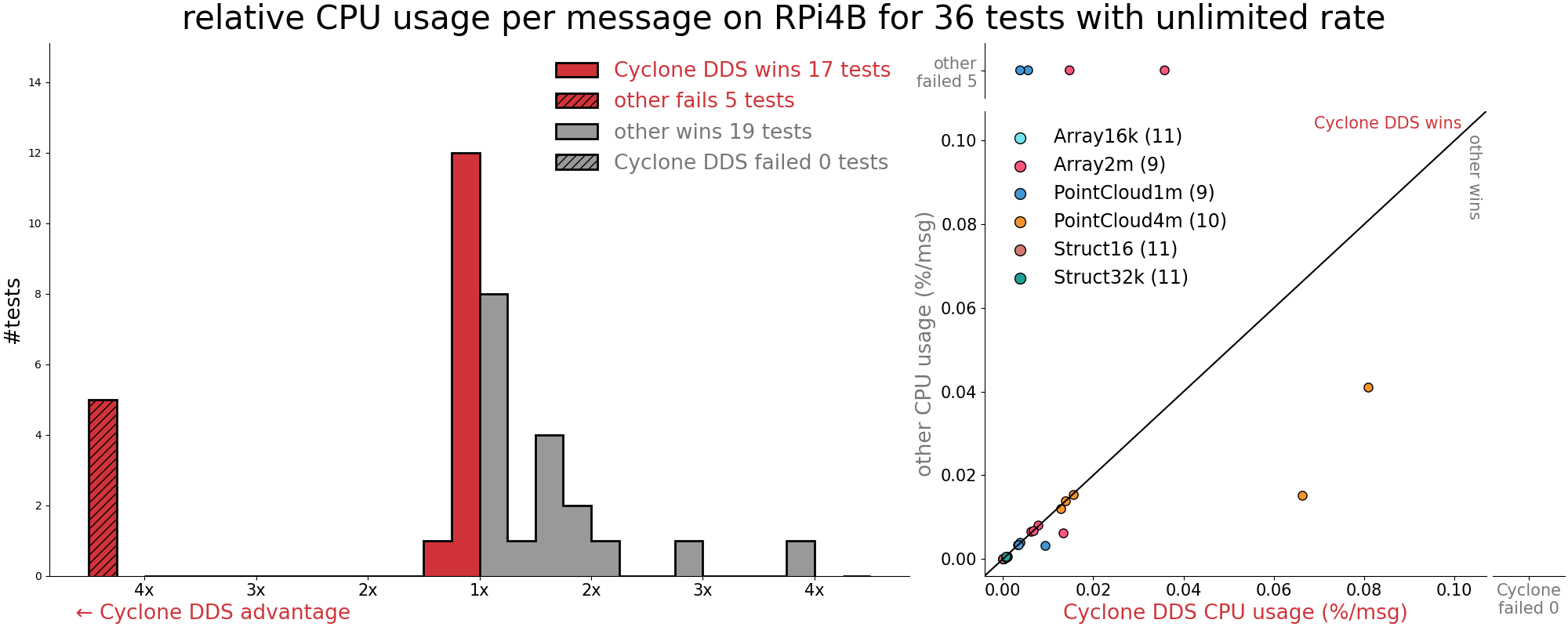

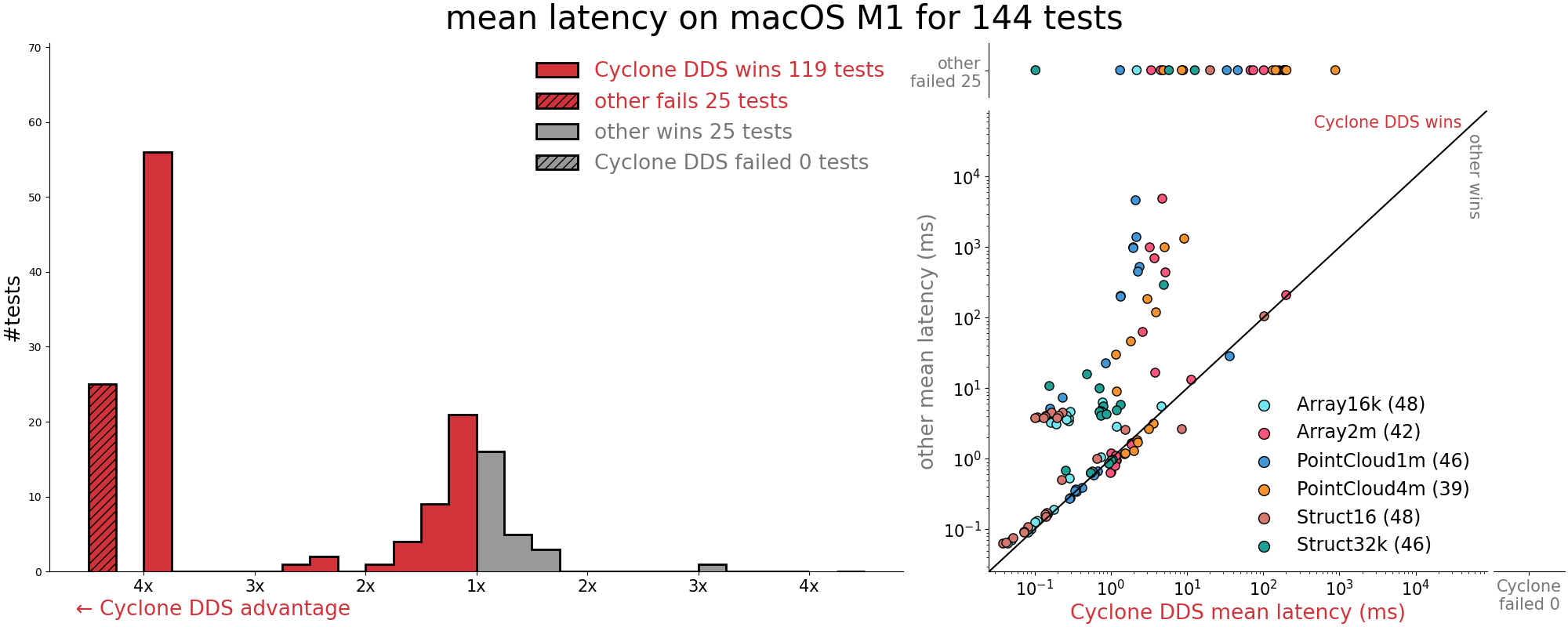

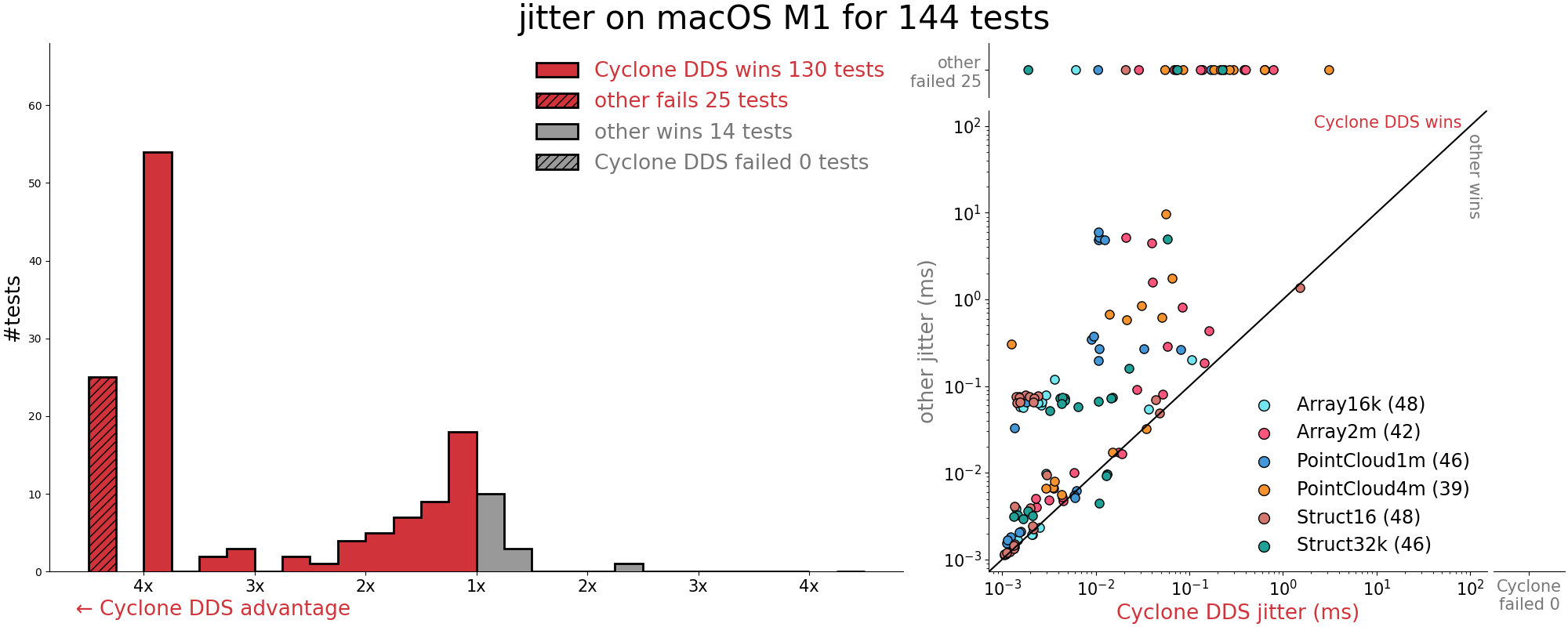

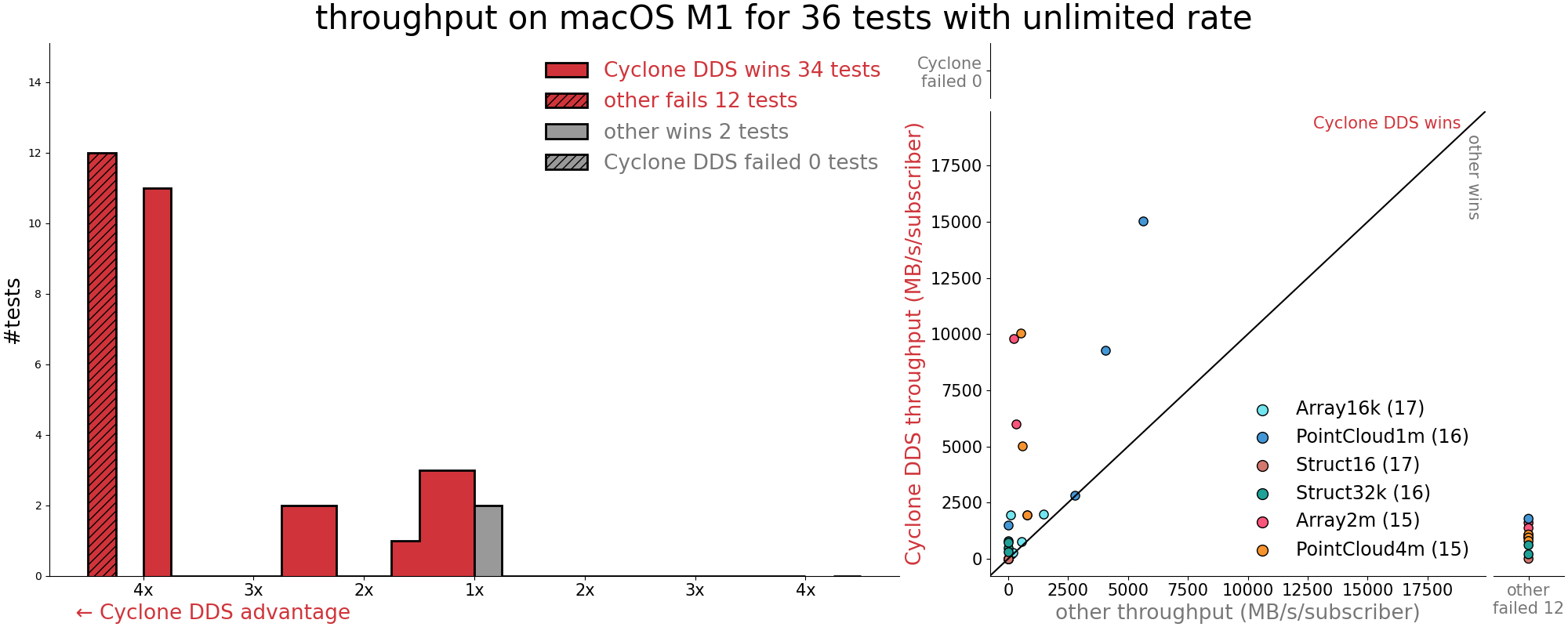

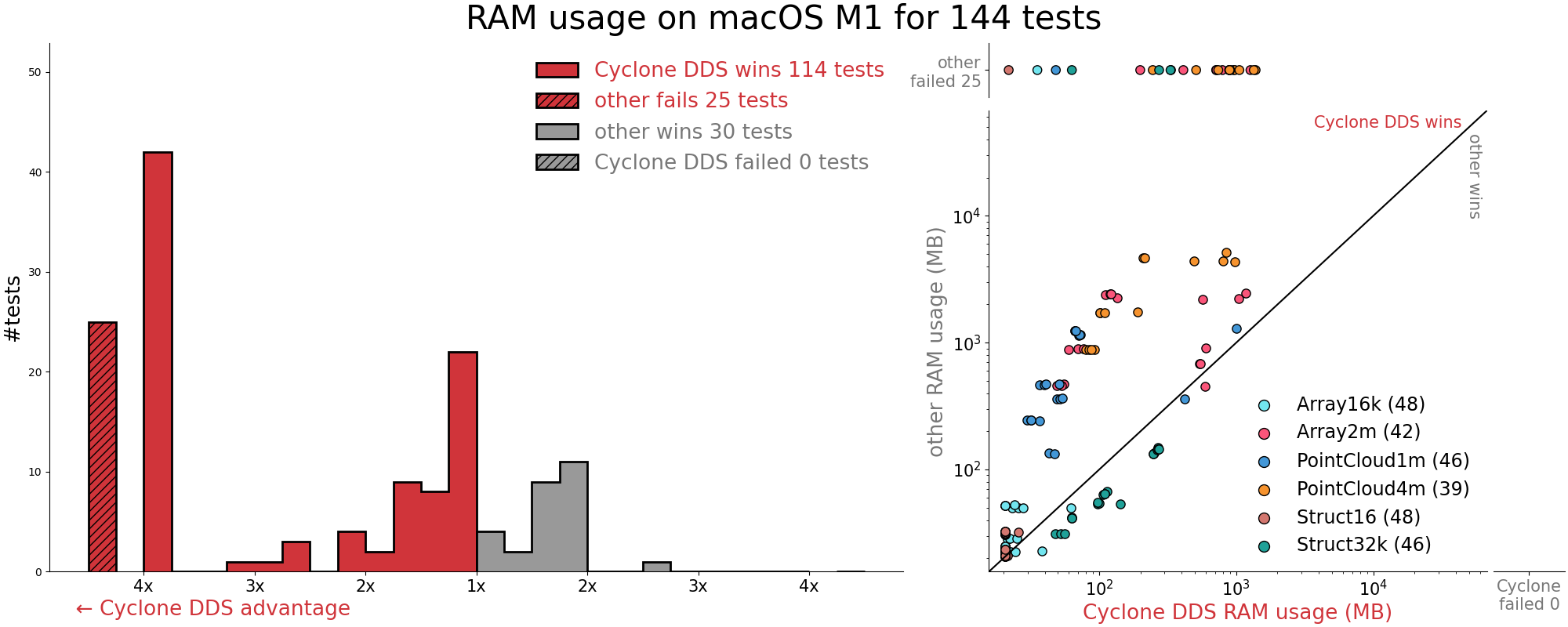

Following is the summary overview of the results for all tests, all platforms. How to instructions, test scripts, raw data, tabulated data, tabulation scripts, plotting scripts and detailed test result PDFs for every individual test are here. Also see Appendix B: Performance Summary Per Platform.

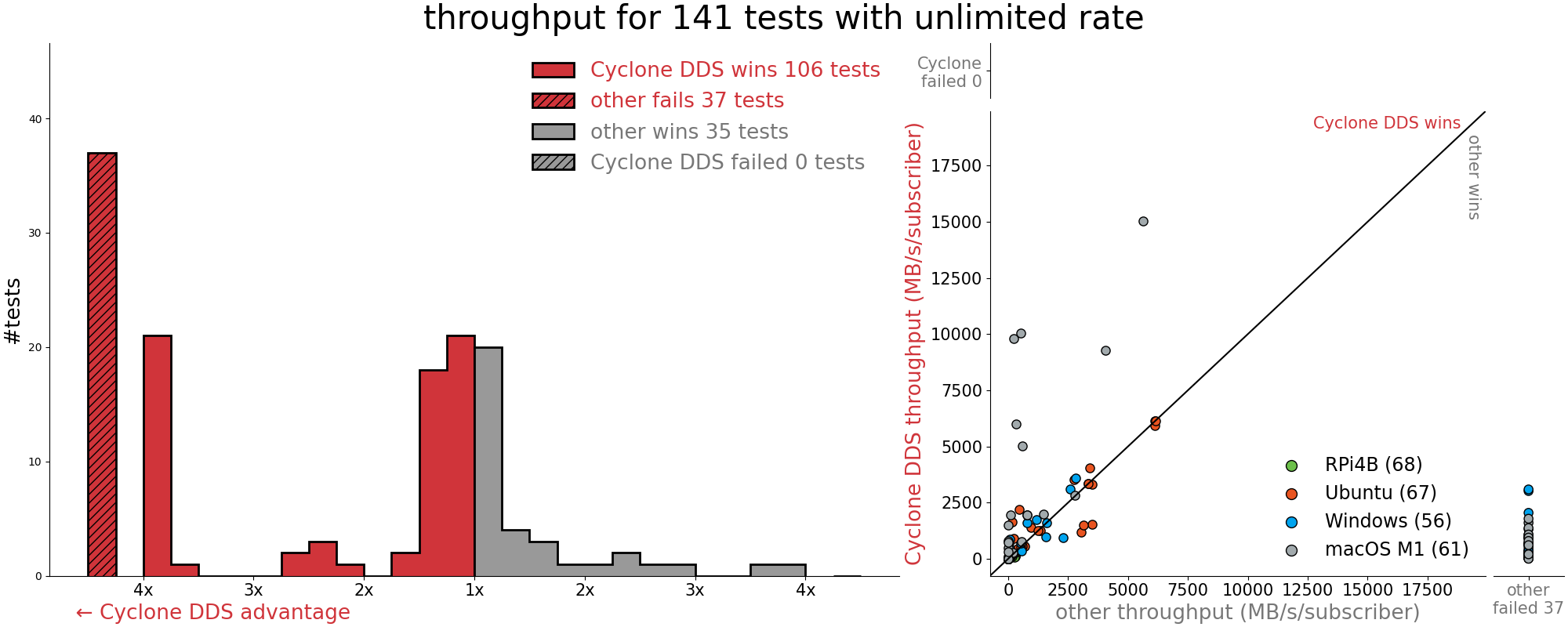

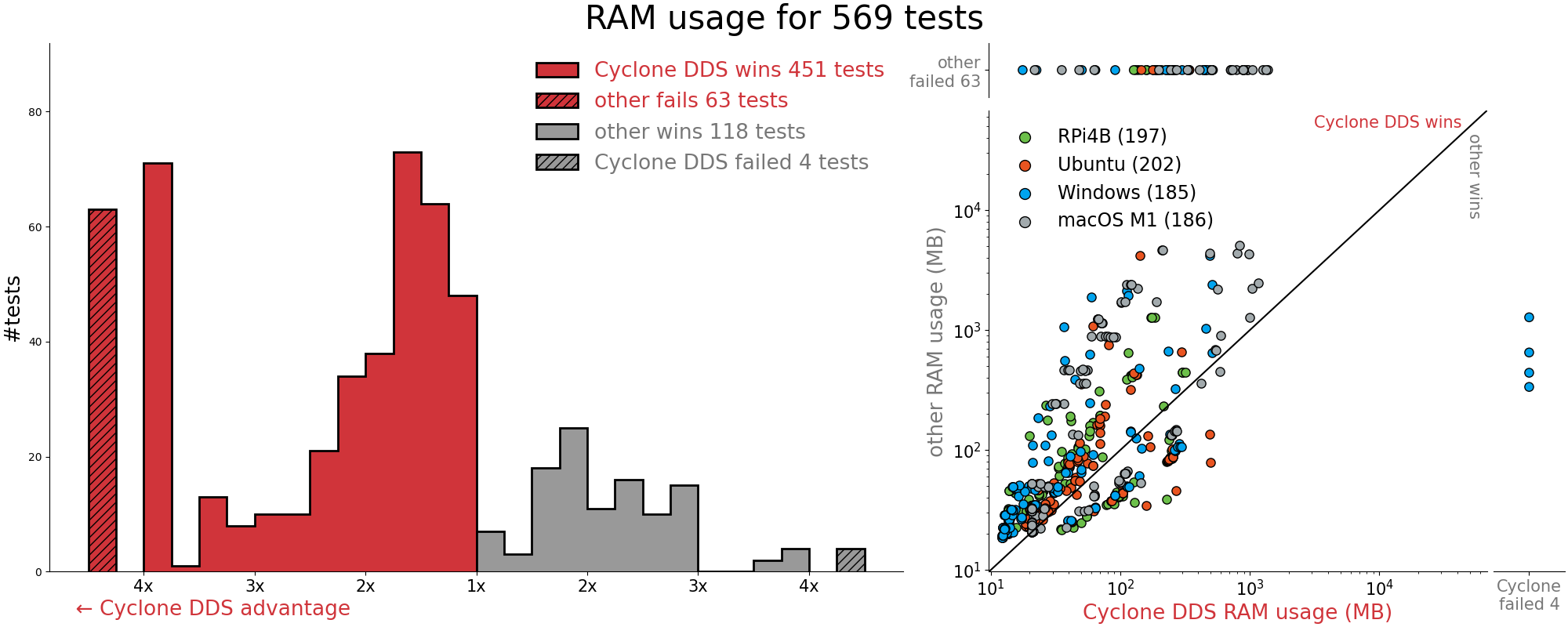

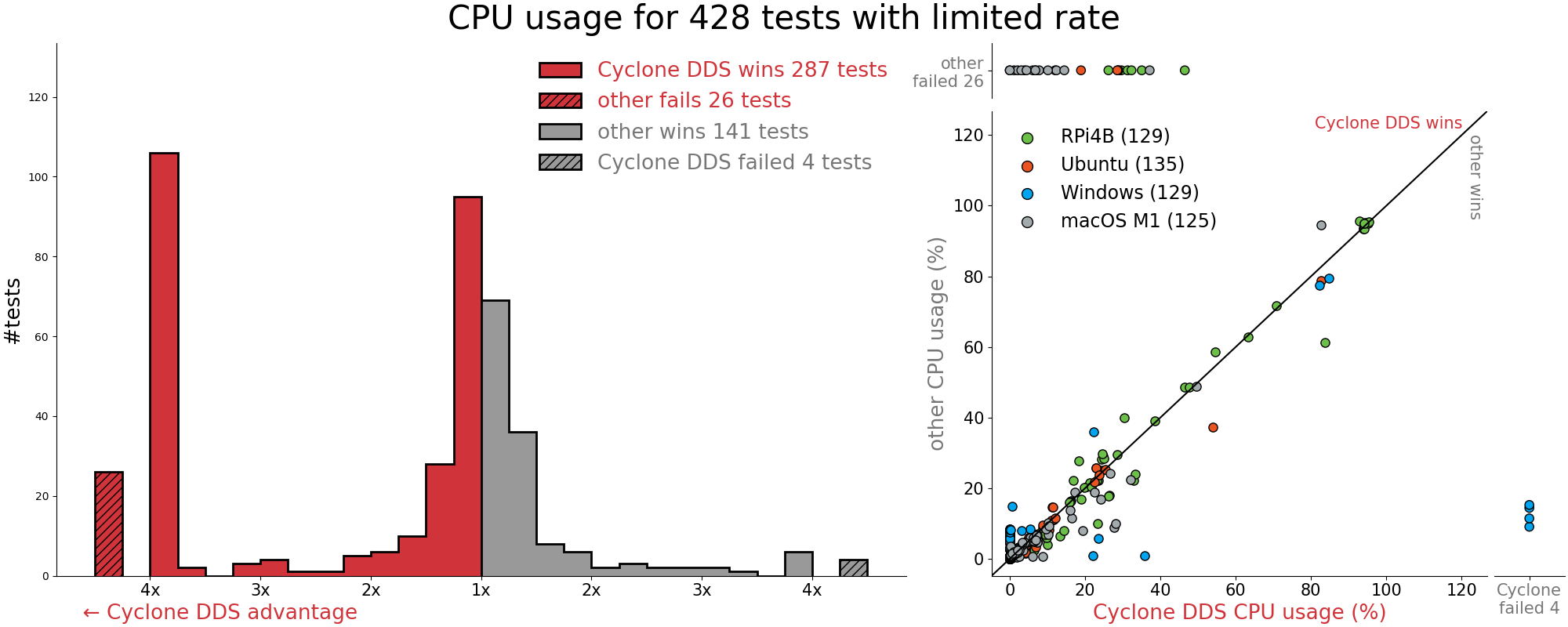

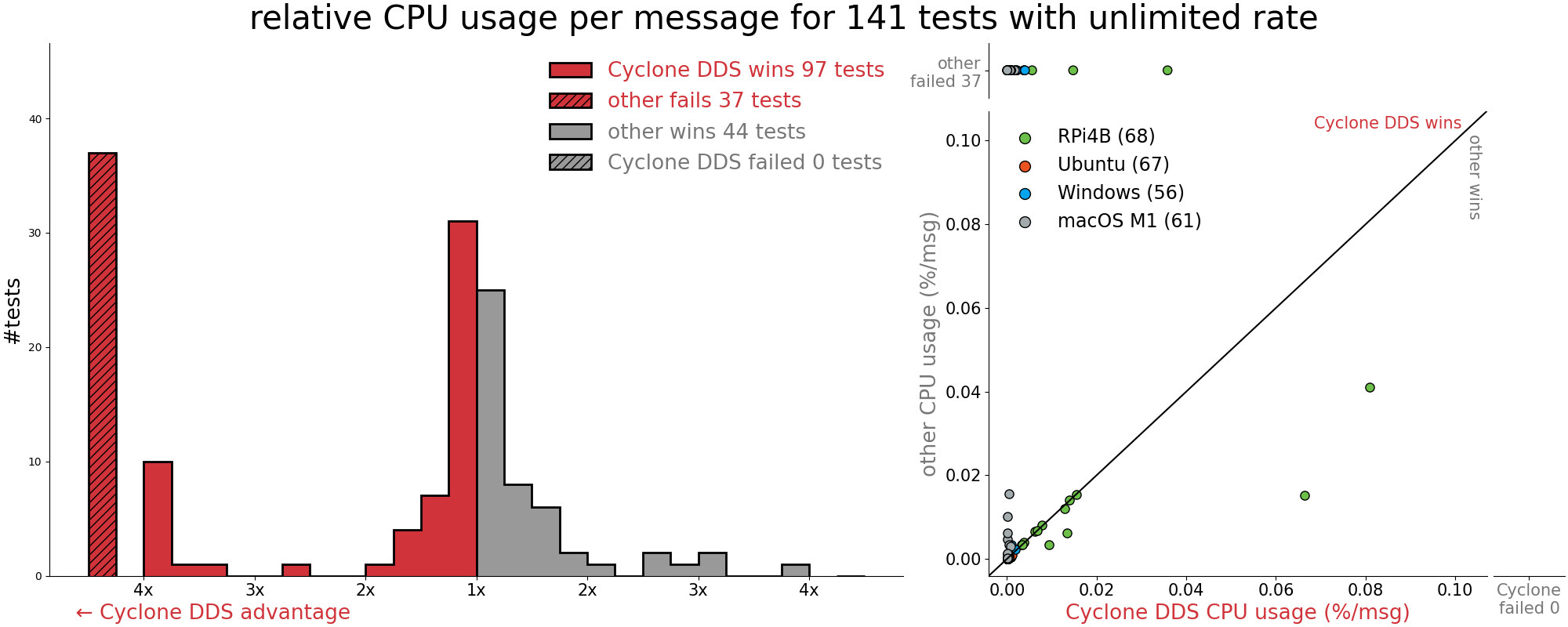

For 569 tests with varying message types, sizes, rates, subscribers, QoS, and platforms the result was the following (also see the graphs below the bullet list):

- Cyclone DDS has lower latency in 443 tests

- Cyclone DDS has lower latency jitter in 447 tests

- Cyclone DDS has higher throughput in 106 of 141 tests with other failing 37 of the 141 throughput tests

- Cyclone DDS has lower memory utilization in 451 tests

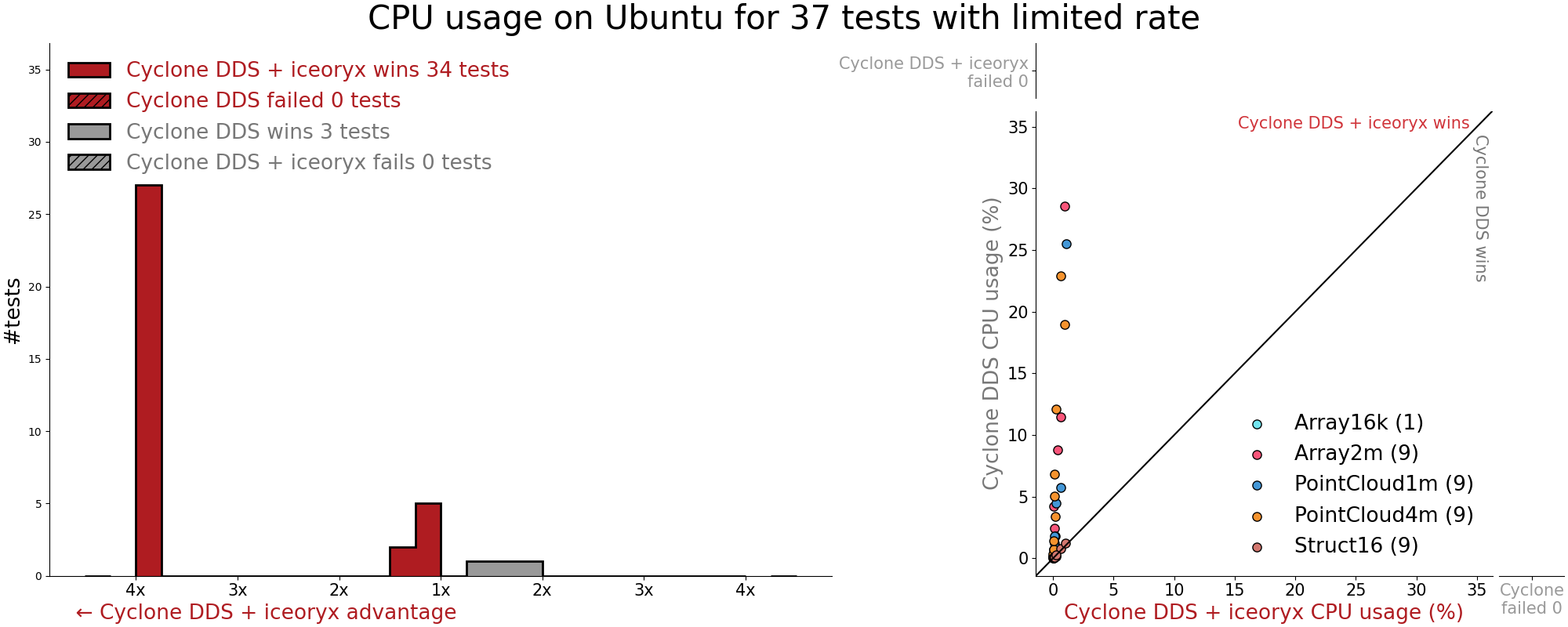

- Cyclone DDS has lower CPU utilization in 484 tests (387 limited + 97 unlimited)

- Cyclone DDS scaled better by number of topics and nodes

- Cyclone DDS failed 4 tests while the other middleware failed 63 tests

- Cyclone DDS failed 4 tests were Windows 10 default network buffer size, large messages, 500Hz

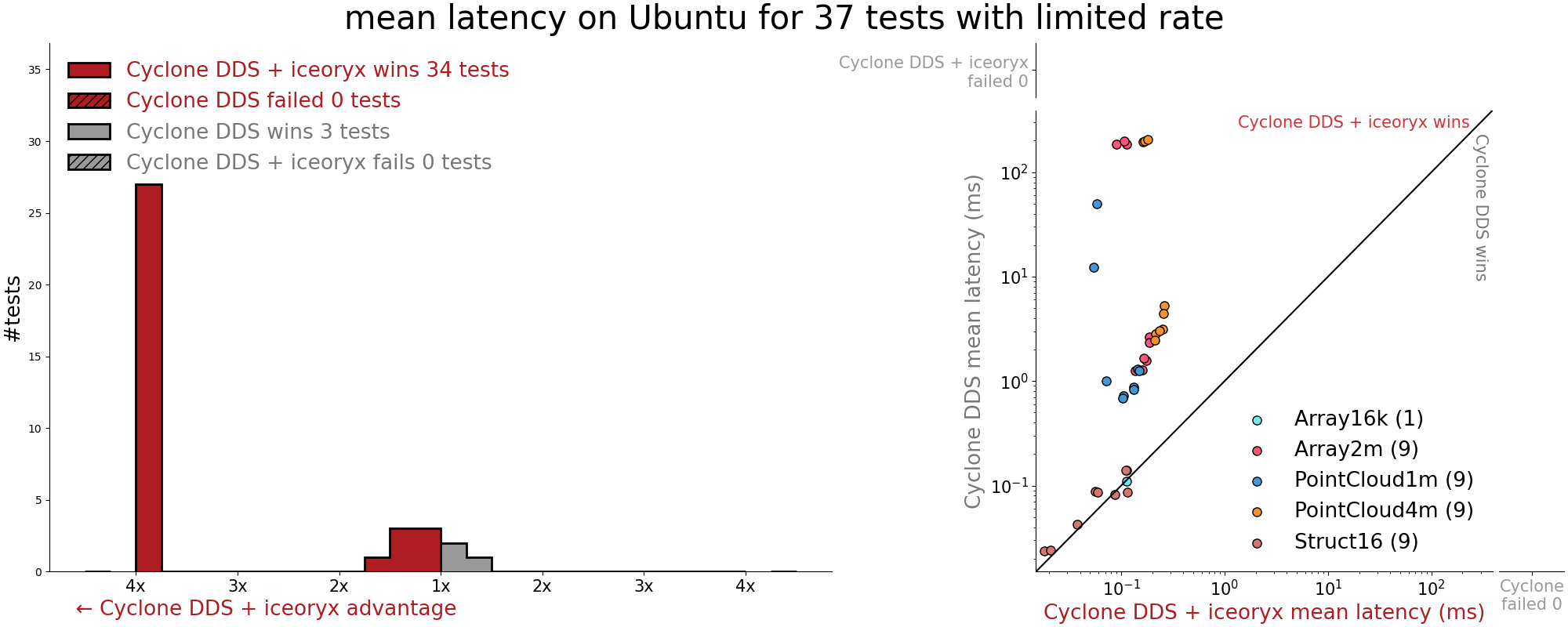

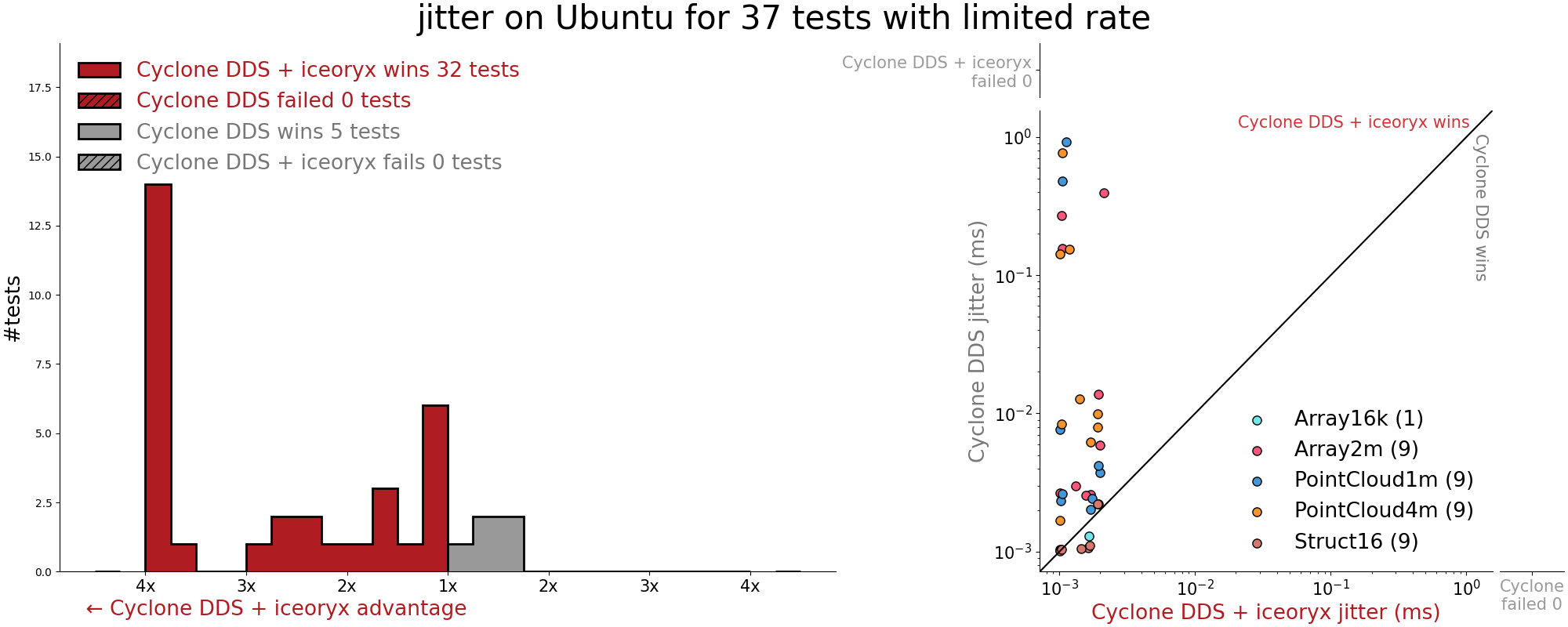

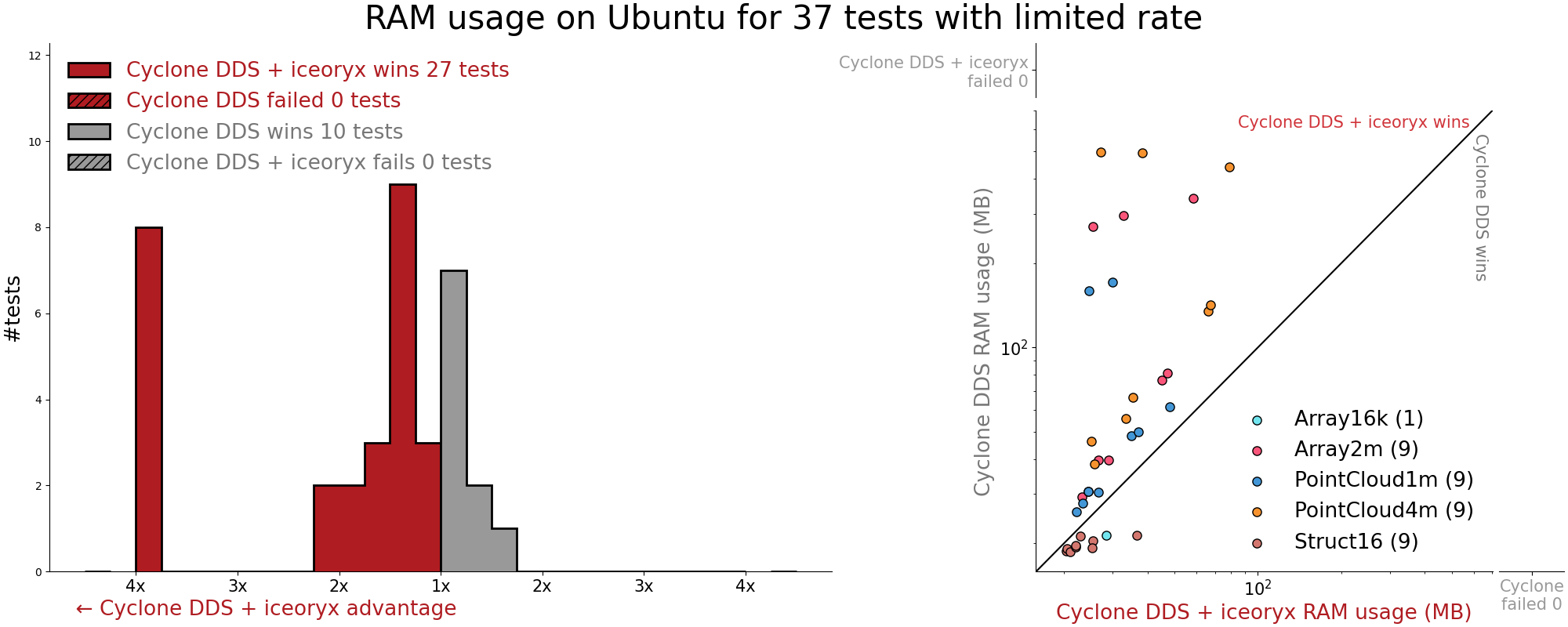

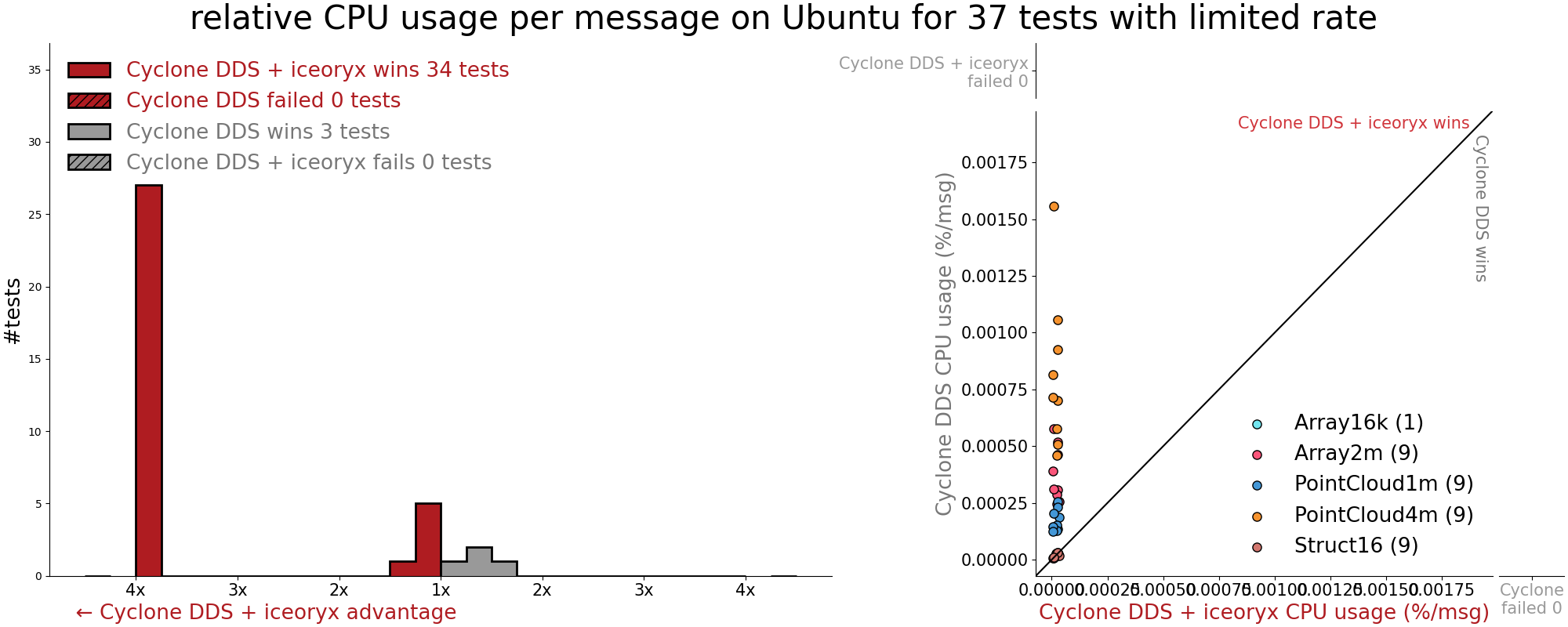

Cyclone DDS with iceoryx Improvement

Cyclone DDS with built-in iceoryx zero-copy was tested using the rclcpp RMW “LoanedMessage” API. As you can see below Cyclone DDS with iceoryx improves upon Cyclone DDS in every measure: latency, jitter, throughput, memory, total CPU usage, relative CPU per message. The instructions to use zero-copy with Cyclone DDS + iceoryx are here. Software engineers at ADLINK & Apex.AI followed the other middleware’s published instructions to use LoanedMessage API, but the other middleware failed these tests without getting any messages through.

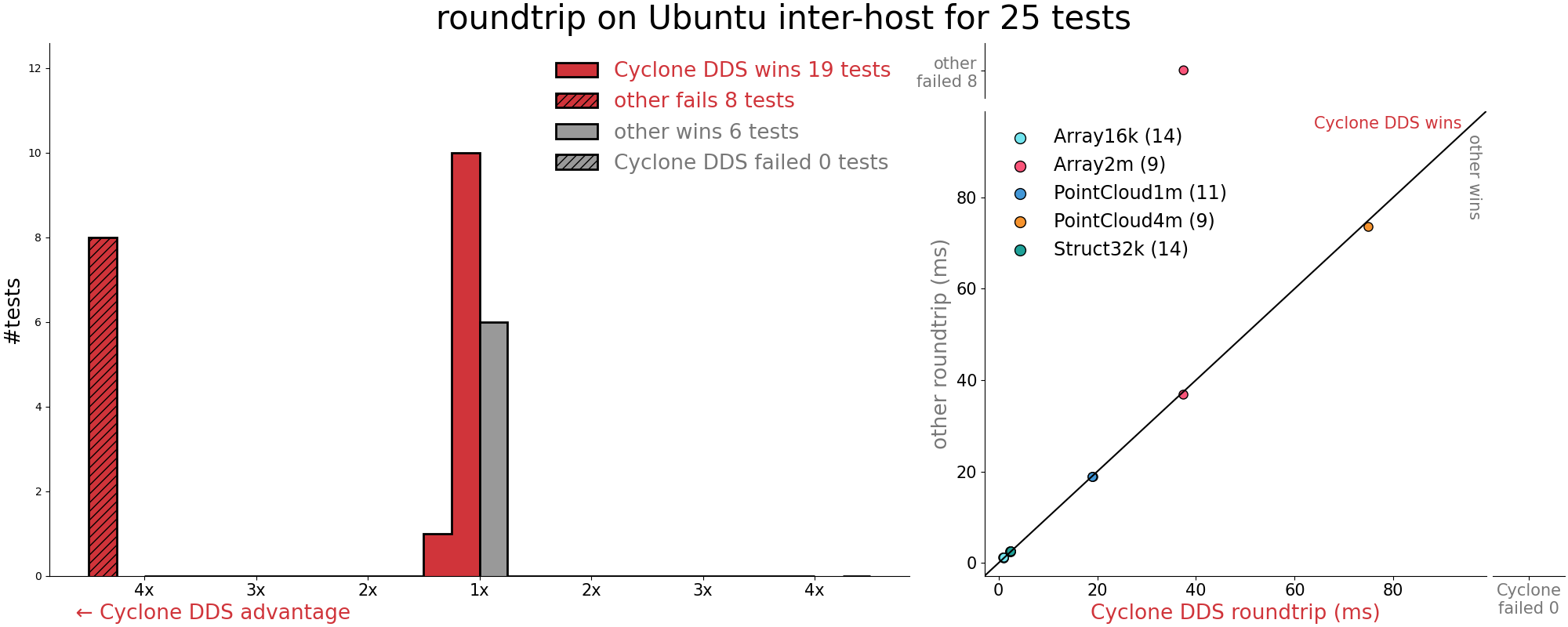

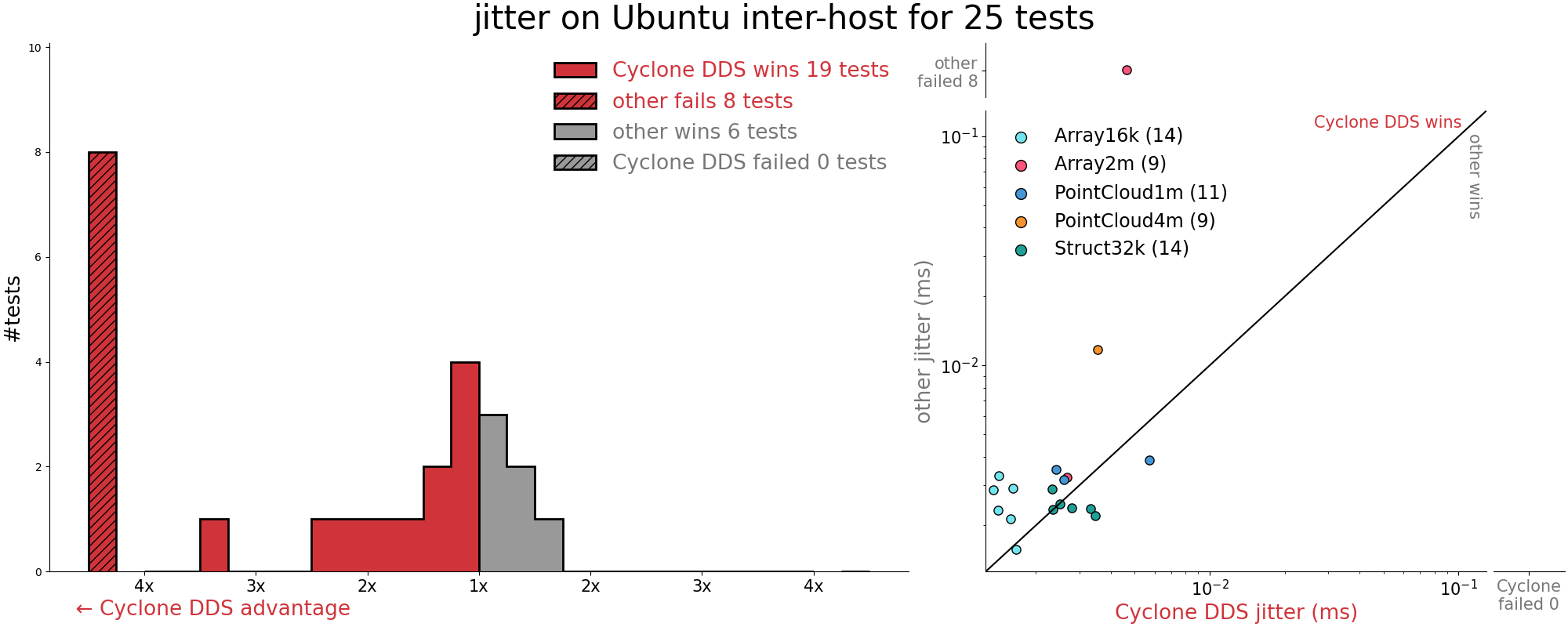

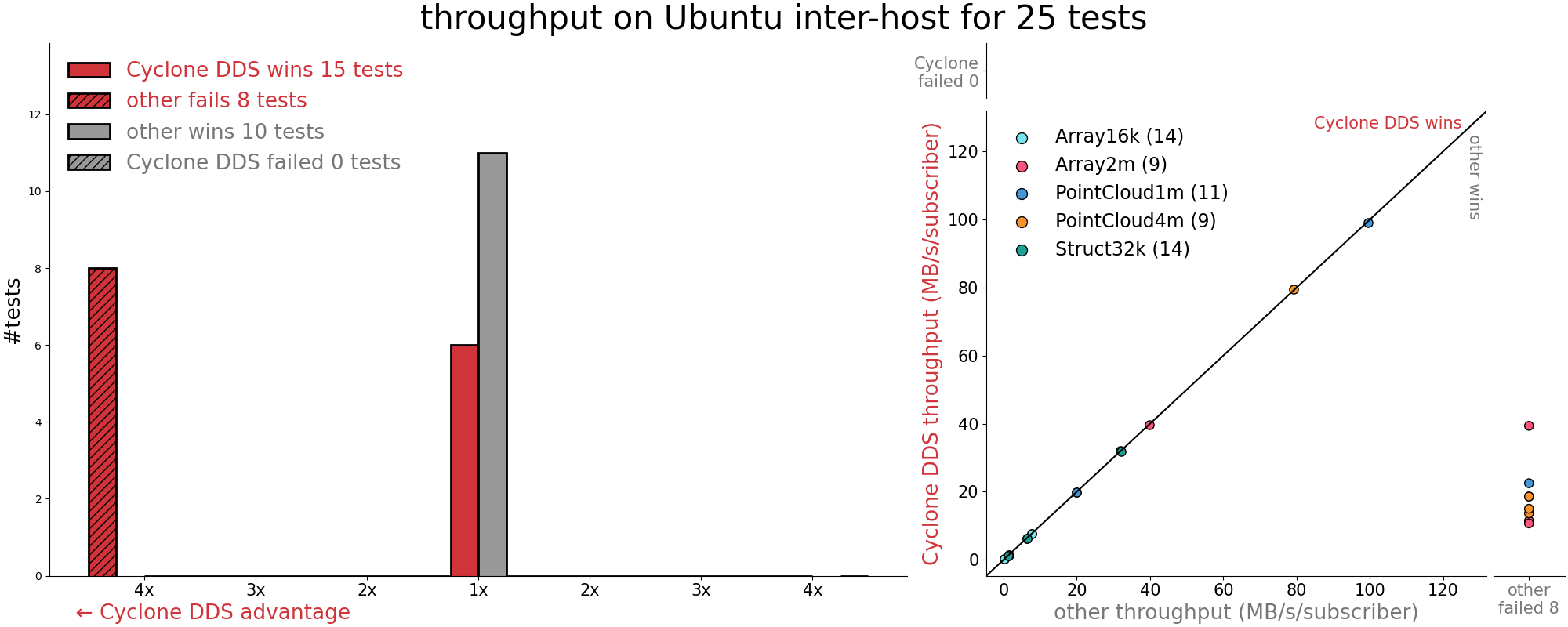

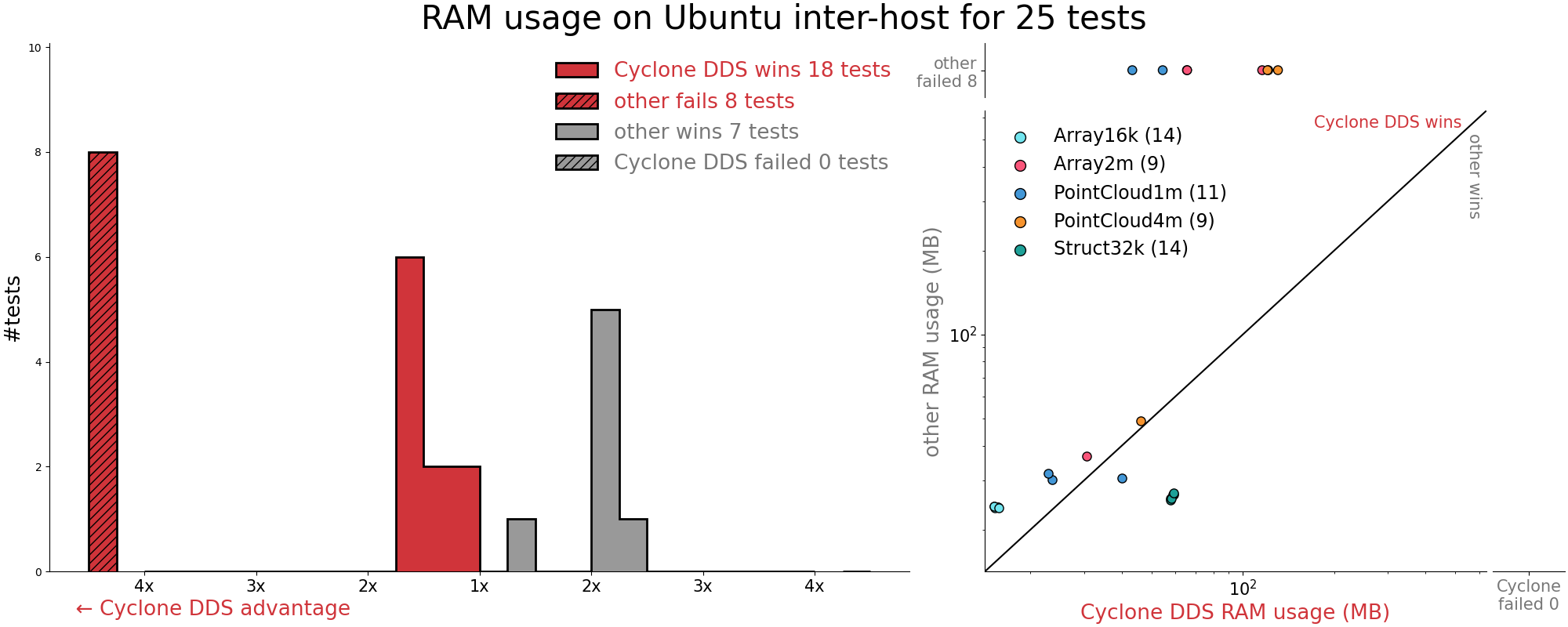

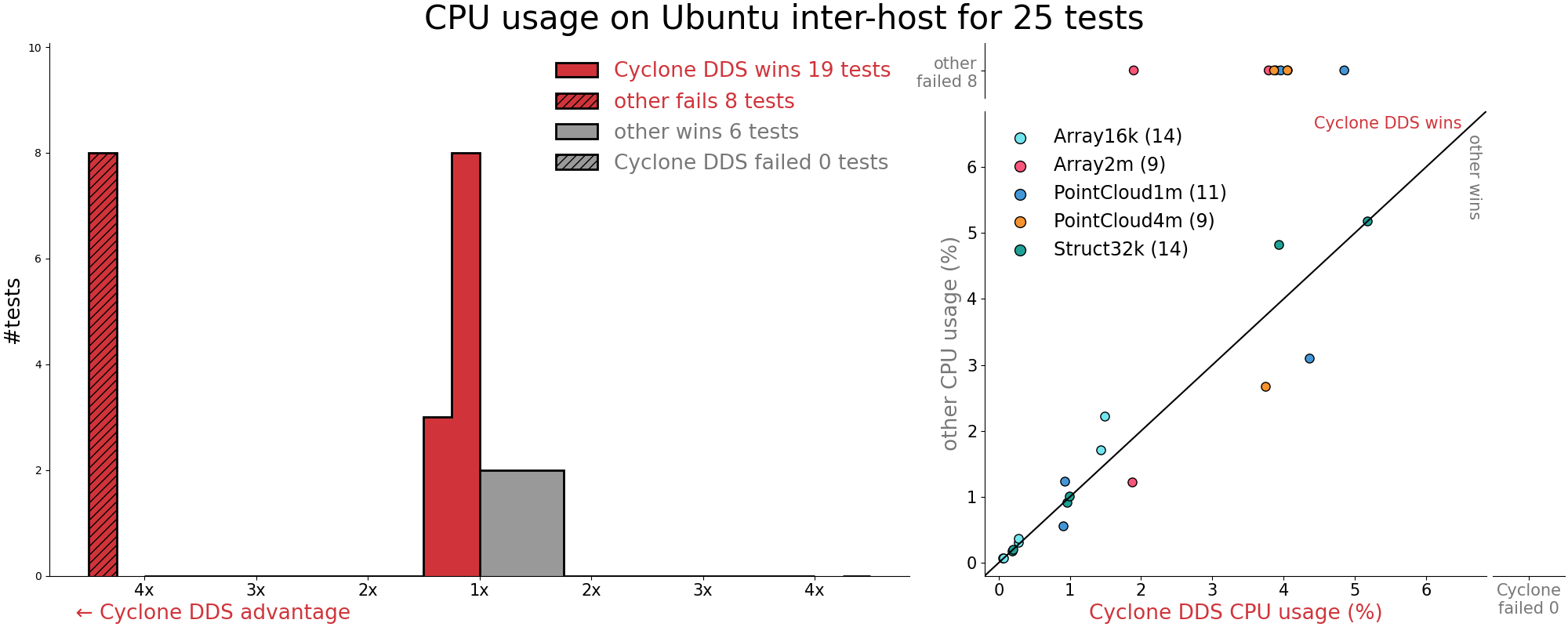

Inter-host Performance

Inter-host test detailed plots are here, and the most relevant plots are shown below.

- Cyclone DDS wins 90 times while the other implementation wins 35 times

- Cyclone DDS fails 0 tests while the other implementation fails 8 tests

For a pub/sub pair in separate processes, what is the average round-trip time, throughput, and CPU/memory utilization? How does this scale with topic frequency and topic size?

Multi-process test results are covered elsewhere in this report, the plots are here, the test scripts, raw data, tabulated data are here.

Services

Several users have reported instances where services never appear, or they never get responses. What do you think the problems might be, and what are you doing to try and address these problems?

- ros2/ros2/issues/1074

- ros2/rmw_fastrtps/issues/392

- ros2/rmw_fastrtps/pull/418

- ros2/rmw_cyclonedds/issues/74

- ros2/rmw_cyclonedds/issues/191

The only referenced issues are timing-related and tickets left open for the day things change and some code to work around missing guarantees in the DDS discovery protocol can be removed. Starting with the latter, since the introduction of that in the RMW layer in June 2020, we have not received any reports of missing service responses, and we believe this to be reliable. The main downside is that it can delay the service on servicing the first request from a given client by the discovery latency if that client initiates the request immediately upon discovering the service.

Given that it may delay the service response for the first request, this therefore does provide a plausible explanation to the “delayed first response” symptom in ros2/ros2/issues/1074.

Secondly, with a service request timeout of 10ms, if either the request or response message is lost, the repair will likely have to wait until a timer goes off, because requests and responses are typically not immediately followed by further messages on the same writer. The typical timing for recovery in such circumstances is in the order of 10s of milliseconds.

So the observed behaviour is in line with expectations. We do intend to provide more guarantees in the DDS discovery protocol that will allow the client to wait until the request can be handled without further discovery-related delays. This serves the Cyclone DDS user base more generally than only improving service requests.

The longish recovery delay when the final message before the writer falls silent for a while is something that we would like to improve on. It necessarily involves waiting on a timer and sending additional packets, and is thus not likely to ever recover in less than a few milliseconds. On WiFi the delays will necessarily be longer because WiFi has a relatively high intrinsic latency.

How do services scale with the number of clients? And/or the amount of request traffic?

Client/service interaction is implemented using a pair of topics, following the existing design for services at the time of implementing the Cyclone DDS RMW layer. That means each client and each server introduces two endpoints. The standard discovery protocol requires these to be advertised to all other processes in the network. At the level of DDS discovery, each additional client causes an amount of work/traffic that scales linearly with the size of the system.

For ensuring delivery of responses (in the absence of loss of connectivity), the services check locally cached data, which is also linear in the number of clients.

The actual response message is (currently) sent to all readers of that particular topic. If multicast is available, this means a single message, but even so, there will be acknowledgements sent back from each reader. So again, linear in the number of clients.

The intent has always been to start using writer-side content filtering once that is available, but this has been delayed for a variety of reasons. We are considering extending the API to directly support writing to a specific reader (which can be considered as a special case of writer-side content-filtering). This will prevent responses being sent to all clients.

WiFi

We’ve had a lot of reports from users of problems using ROS 2 over WiFi. What do you think the causes of the problems are, and what are you doing to try to address these problems?

- Some example issues from users:

The challenges of DDS when operating over WiFi are relatively well known to those that have been involved with DDS for sufficiently long time. The main causes of these challenges are related (1) the fact that DDS heavily relies on UDP/IP multicast communication which is know to be problematic on WiFi, (2) the verbosity of DDS discovery protocol, and (3) the lossy nature of WiFi.

In order to alleviate these and other challenges posed by DDS when trying to scale out or run over Wide Area Network we have the designed the zenoh protocol and implemented it as part of the Eclipse Zenoh project (see http://zenoh.io).

Below we describe how zenoh can be transparently used by ROS 2 applications to improve their behaviour over WiFi and in general to have better scalability as well as transparently operate at Internet scale.

How well does the implementation work out-of-the-box over WiFi?

Cyclone DDS over WiFi “just works” unless the WiFi is reached via a wired network. Because whether or not the selected interface is a wifi interface determines whether Cyclone DDS uses allowmulticast=true or allowmulticast=spdp (multicast discover, unicast data).

So if it is using an Ethernet that integrates in a WiFi network, it sees a wired network and sets it to true, where you might have wanted to manually set it to spdp which sets it to multicast discovery & unicast data.

When we run Cyclone DDS over WiFi, we have no problems, it “just works”. Your mileage may vary with application topology, number of nodes, topics, data rates & sizes, type of WiFi, antenna type & WiFi chipset, background noise, and movement of network nodes.

In the default configuration it works by sending packets using UDP/IP. If the network works well, it works well. If the network doesn’t work well, it works less well. If the network is very bad, it won’t work.

It is worth noting that the DDSI stack in Cyclone DDS has been deployed for a decade providing the communication via WiFi between autonomous vehicles from a top agriculture machinery manufacturer.

ROS 2 applications that want to leverage zenoh for R2X communication can simply deploy an instance of the zenoh-plugin-dds and transparently communicate over WiFi.

Zenoh’s default configuration has been proven to reduce DDS discovery traffic by 97% (see https://zenoh.io/blog/2021-03-23-discovery/) and with some tuning, we have seen it dropping the discovery traffic by over 99%.

How does the system behave when a robot leaves WiFi range and then reconnects?

With Cyclone DDS this behaves the same as well as others. With zenoh-plugin-dds the disruption of a temporary out-of-range WiFi link is quickly resolved once the connection is re-established. As the level of discovery information shared by the zenoh protocol is extremely small when compared to DDS, and much of the information exchanged by DDS is not even necessary, the communication is re-established immediately without inundating the network with discovery data as in the DDS case.

The disruption of a temporary out of range WiFi link is quickly resolved once the connection is re-established. As the level of discovery information shared by the zenoh protocol is extremely small when compared to DDS, and much of the information exchanged by DDS is not even necessary, the communication is re-established immediately without inundating the network with discovery data as in the DDS case.

How long does it take to launch a large application like RViz2 over WiFi?

Cyclone DDS and other RMW both launch large applications like RViz2 over WiFi in the same amount of time. Rover Robotics has done much testing of Nav2 bring up with RViz2 over WiFi.

This question really depends on the network and the complexity of the robot. However, as zenoh reduced DDS discovery by up to 99.97% the start-up time is dramatically reduced. There is discussion of that here: Minimizing ROS 2 discovery traffic

What is a solution for default DDS discovery on lossy networks?

Leveraging the zenoh-plugin-dds addresses the problem of lossy networks where it does not leverage multicast, and the protocol is extremely parsimonious and wire efficient. Here is an overview and tutorial using ROS 2 turtlebot. This plugin is in the next Rolling sync.

How does performance scale with the number of robots present in a WiFi network?

In DDS, the discovery data is sent from everyone to everyone else in spite of actual interest. Additionally, DDS shares discovery data for readers / writers and topics. As tested by iRobot, Cyclone DDS scales well. Additionally, Erik Boasson added domainTag to the OMG DDSI 2.3 specification to support implementing iRobot’s use case - find any Roomba by serial number among ~1,000 robots on the network.

In zenoh, only subscriptions are shared and more importantly the resource generalisation mechanism described in eclipse-zenoh/zenoh-plugin-dds allows to reduce the entire set of subscriptions of a robot to a single expression.

Thus the improved scalability provided by the zenoh-plugin-dds over DDS is a consequence of the very nature of the protocol.

Features

What is the roadmap and where is it documented?

The Cyclone DDS + iceoryx joint roadmap is here: Apex.Middleware. Here are the stand-alone roadmaps for Eclipse Cyclone DDS, Eclipse iceoryx, and Eclipse Zenoh.

Can the middleware be configured to be memory-static at runtime?

No, however the design and architecture of the middleware components do not prevent them from being hardened to be memory-static at runtime. Currently performance is paramount over being memory-static at runtime. In addition, through proper configuration of Cyclone DDS and iceoryx, runtime allocations can be avoided in many places (through the use of upper-bounded messages, and using zero copy where appropriate).

What support is there for microcontrollers?

Zenoh-pico (see eclipse-zenoh/zenoh-pico) supports micro-controllers such as STM32, ESP32, and the Zephyr OS reference board, namely the reel board. Zenoh-pico supports micro-ROS with rmw_zenoh_pico_cpp. Zenoh-pico uses the Zenoh ROS 2 DDS plugin zenoh-plugin-dds which works with all Tier 1 ROS Middleware and is in the next ROS 2 Rolling sync. Zenoh-pico overview and step-by-step “how to” instructions are here. Instructions for ROS 2 with Zenoh are here. Instructions for using Cyclone DDS with Zenoh on constrained networks are here.

Are there tools available for integrating/bridging with other protocols (MQTT, etc)? What are they, and how do they work?

Yes. The provided simple APIs and multiple language bindings facilitate integration/bridging with other protocols via Eclipse Cyclone DDS sister projects Eclipse Paho (MQTT), Eclipse Milo (OPC-UA), Eclipse Tahu (legacy SCADA/DCS/ICS), Eclipse Californium (COAP), et al. Cyclone DDS’s 37 sister projects are listed here iot.eclipse.org/projects

How much adherence is there to the RTPS standard?

Full adherence with one exception; for interoperability with the other middleware, Cyclone DDS has been modified to accept some invalid messages that the other ROS middleware sends despite the RTPS specification demanding that those be ignored.

How much support for the DDS-Security specification is provided in the DDS implementation?

DDS-Security specification support is described here. The three plugins that comprise the DDS Security Model in Cyclone DDS are: Authentication Service Plugin; Access Control Service Plugin; Cryptographic Service Plugin. Cyclone DDS implements (or interfaces with libraries that implement) all cryptographic operations including encryption, decryption, hashing, digital signatures, etc. This includes the means to derive keys from a shared secret.

Does the package have explicit tooling and support for protocol dissection?

Yes. Wireshark, performance_test, and ddsperf. Please refer to the cyclonedds.io/docs, performance_test, and the “Debugging” section of rmw_cyclonedds readme. The Wireshark DDSI-RTPS plugin is here.

- Create a Wireshark capture: wireshark -k -w wireshark.pcap.gz

- Performance_test for generating workloads and traffic using performance_test or ddsperf.

- Configure Cyclone to create richer debugging/tracing output

Quality

What is the currently self-declared REP-2004 quality of the package implementing the RTPS/DDS protocols and the RMW?

Cyclone DDS and iceoryx are self-declared to be Quality Level 2 here and here.

How else does the package measure quality? Please list specific procedures or tools that are used.

Eclipse Foundation Development Process has been refined over the course of hundreds of projects to produce high quality software. The development process also addresses project lifecycle, reviews, releases and grievances. Automated quality testing includes CI, test coverage, static code analysis, integration tests, and sanitizer tests as described in the report section “What kinds of tests are run?” further below. Project documentation besides the READMEs is found here: cyclonedds.io/docs, iceoryx.io, zenoh.io and blog.

Where is the development process documented?

These projects follow the Eclipse Foundation Development Process. It documents the development process used by hundreds of Eclipse Foundation projects including Cyclone DDS, iceoryx and Zenoh. In addition, the contributing guidelines are documented here for Cyclone DDS, iceoryx and Zenoh. Note that Eclipse Foundation Development Process results in contributions being a smaller number of larger and thoroughly tested chunks. This means that looking at the number of commits instead of number of pull requests is a more appropriate way to evaluate the liveliness of any Eclipse project including these.

What kinds of tests are run? Smoke tests, unit tests, integration tests, load tests, coverage? What platforms are each of the tests run on?

The following kinds of tests are run:

- Continuous Integration cyclonedds CI, iceoryx CI

- Test coverage cyclonedds test coverage, iceoryx test coverage

- Static code analysis cyclonedds static code analysis, iceoryx static code analysis uses commercial tools PC-Lint Plus and Axivion Suite. Additionally we are working on the introduction of clang_tidy which will be enabled with the iceoryx 2.0 release planned for Humble and will be run publicly on github.

- Sanitizer tests of the cyclonedds CI. Here are the iceoryx sanitizer tests.

Additionally, Open Robotics runs the full ROS 2 test suite with Cyclone DDS nightly.

Has the DDS Security implementation been audited by a third-party?

Yes. Trend Micro security researchers working on security vulnerability research audit Eclipse Cyclone DDS with Eclipse iceoryx. These security researchers perform fuzz testing of Cyclone DDS with iceoryx to test for security vulnerabilities. They are automating the vulnerability searches.

Free-form

In this section, you can add any additional information that you think is relevant to your RMW implementation. For instance, if your implementation has unique features, you can explain them here. These should be things technical in nature, not just marketing. Please keep your responses limited to 2000 words with a reasonable number of graphs; we’ll truncate anything longer than that during editing of the report. If you cannot fit all of your data into the limit, feel free to provide a link to an external resource which we’ll include in the report.

Many have adopted the Eclipse Cyclone DDS ROS middleware after performing their technical due diligence of the available ROS middleware implementations. Adopters include:

- Default ROS middleware for ROS 2 Galactic

- Most Nav2 users run Eclipse Cyclone DDS per the Nav2 WG Leader

- Default ROS middleware for Autoware Foundation developers

- Default ROS middleware for Apex.OS

- Default ROS middleware for Indy Autonomous Challenge Base Vehicle Software WG

- Default ROS middleware for SVL Simulator

- Default ROS middleware for SOAFEE “Sophie” Scalable Open Architecture For Embedded Edge open source with Arm, ADLINK, Apex.AI, AutoCore, Capgemini Engineering, Continental, CARIAD, Green Hills Software, Linaro, Marvell, MIH Consortium, Red Hat, SUSE, Tier IV, Volkswagen, Woven Planet (Toyota Research), Zing Robotics as explained here. ZDNet explains SOAFEE here.

- Eclipse Cyclone DDS adopters, Eclipse iceoryx adopters, Eclipse Zenoh adopters

We also strongly support and encourage the ROS 2 community and ROS 2 projects such as Navigation2, MoveIt2 and Autoware.Auto.

The DDSI core of Cyclone DDS is widely deployed in thousands of mission critical systems including Fujitsu fiber switches in the Internet backbone and AT&T network; autonomous agricultural vehicles; NASA programs, 560 megawatt gas turbines, ship defence systems of 18 countries, and cow milking robots which is udderly delightful.🐄

Cyclone DDS has progressed from REP 2004 Quality Level 4 in 2019 to Quality Level 3 in 2020 and Quality Level 2 in 2021. We expect Cyclone DDS and iceoryx to be Quality Level 1 in 2022 (except for section 4.v.a automated code style enforcement which the Cyclone DDS lead committer Erik Boasson has determined is the work of the devil).

Something that is beyond the scope of this report but worth mentioning is that there is higher performance available especially for larger messages to the Cyclone DDS user if they want to change defaults such as:

- Increase maximum message size

- Increase fragment size

- Increase high-water mark for the reliability window on the writer side

- Increases to the default kernel network buffer sizes such as the UDP receive buffers (

net.core.rmem_max,net.core.rmem_default)

For Cyclone DDS + iceoryx users of LoanedMessage API with shared memory, the behavior is already well optimized and there is little need to change anythings from defaults.

Appendix A: Test Environment

“Ubuntu” AMD Ryzen 5 5600X (turbo disabled), 32GB DDR4-3600 RAM, Ubuntu 20.04.3 HWE

- ROS 2 Galactic Patch Release 1 amd64 binary download

- Apex.AI/performance_test

“Windows 10” AMD Ryzen 5 5600X (turbo disabled), 32GB DDR4-3600 RAM, Windows 10 Pro Version 21H1 Build 19043.1237

- Visual Studio 2019

- ROS 2 Galactic Patch Release 1 built from source

- Apex.AI/performance_test

““RPi4B” Raspberry Pi Model 4B, 4GB RAM, Ubuntu 20.04.3

- ROS 2 Galactic Patch Release 1 arm64 binary download

- Apex.AI/performance_test

“macOS M1” Macbook Pro M1 2020, macOS Big Sur

- ROS 2 Galactic Patch Release 1 built from source

- Apex.AI/performance_test

Inter-host is pair of PCs with Intel Xeon E3-1275 v5, 32GB RAM, Ubuntu 20.04.3 HWE

- ROS 2 Galactic Patch Release 1 amd64 binary download

- Apex.AI/performance_test

- Netgear GS108 unmanaged switch

Appendix B: Performance Summary Per Platform

This section shows the performance summary for each of the tested platforms. How to instructions, test scripts, raw data, tabulated data, tabulation scripts, plotting scripts and detailed plots for every individual test are here.

Ubuntu

- Cyclone DDS wins 414 times while other wins 198 times.

- Cyclone DDS fails 0 tests while other fails 9 tests.

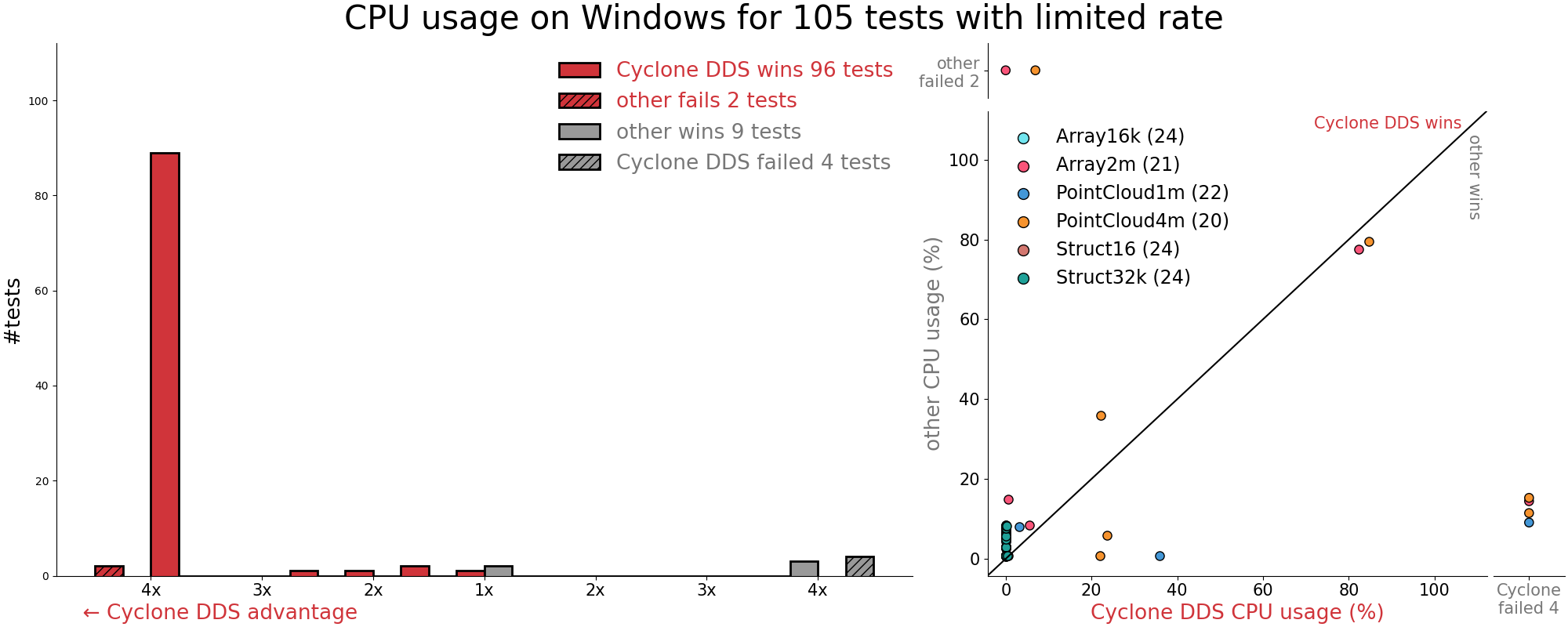

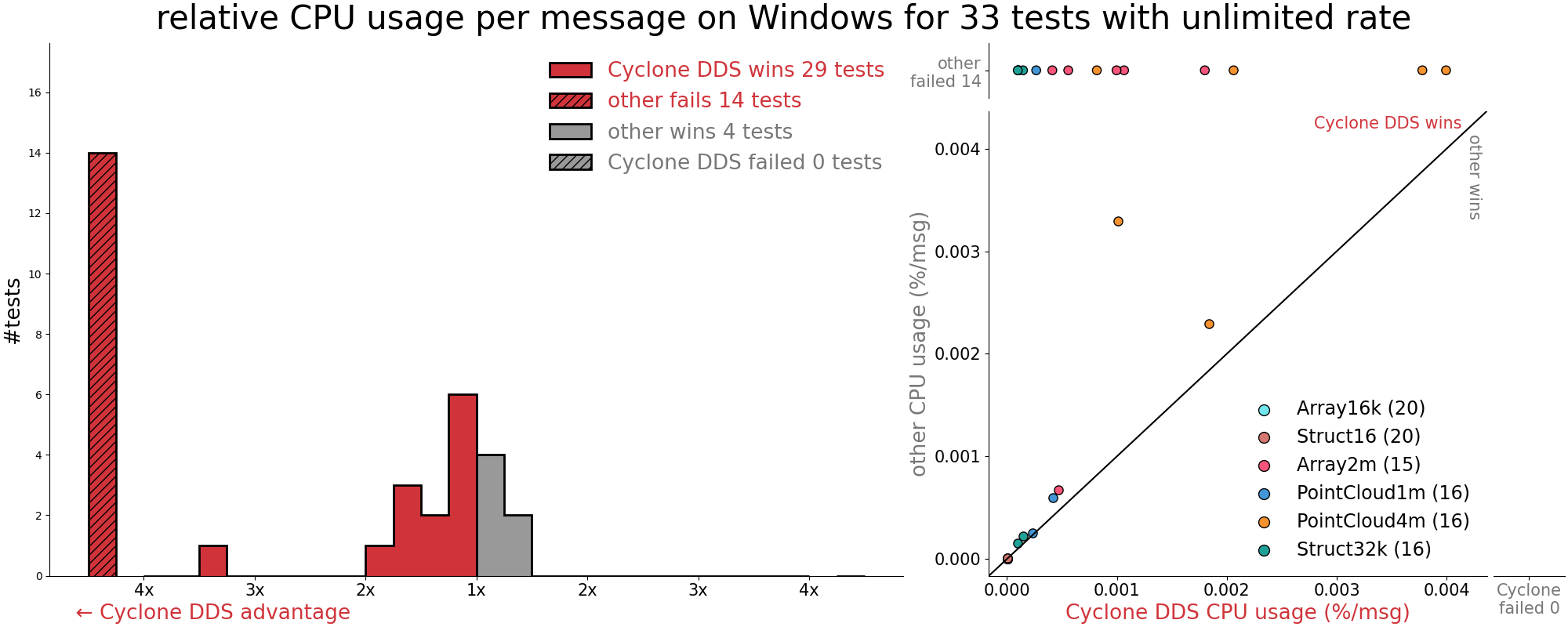

Windows 10

- Cyclone DDS wins 486 times while other wins 99 times.

- Cyclone DDS fails 4 tests while other fails 30 tests.

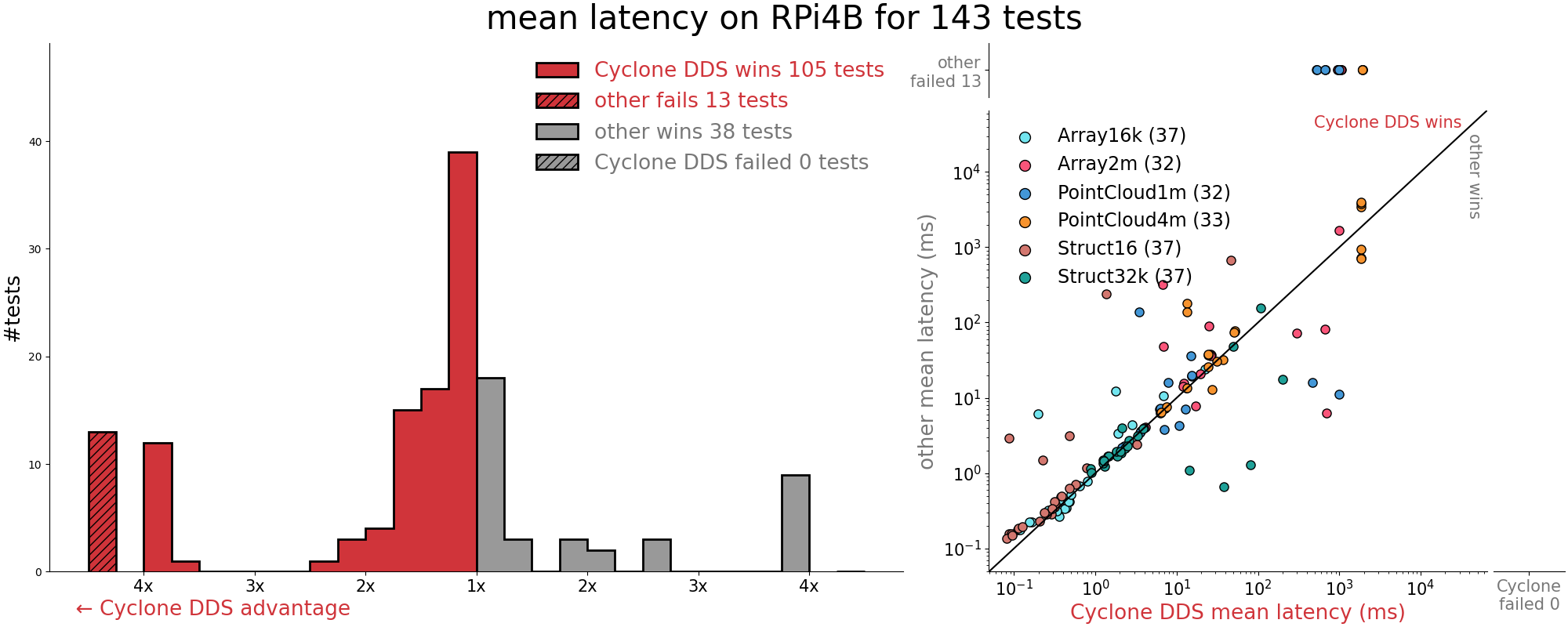

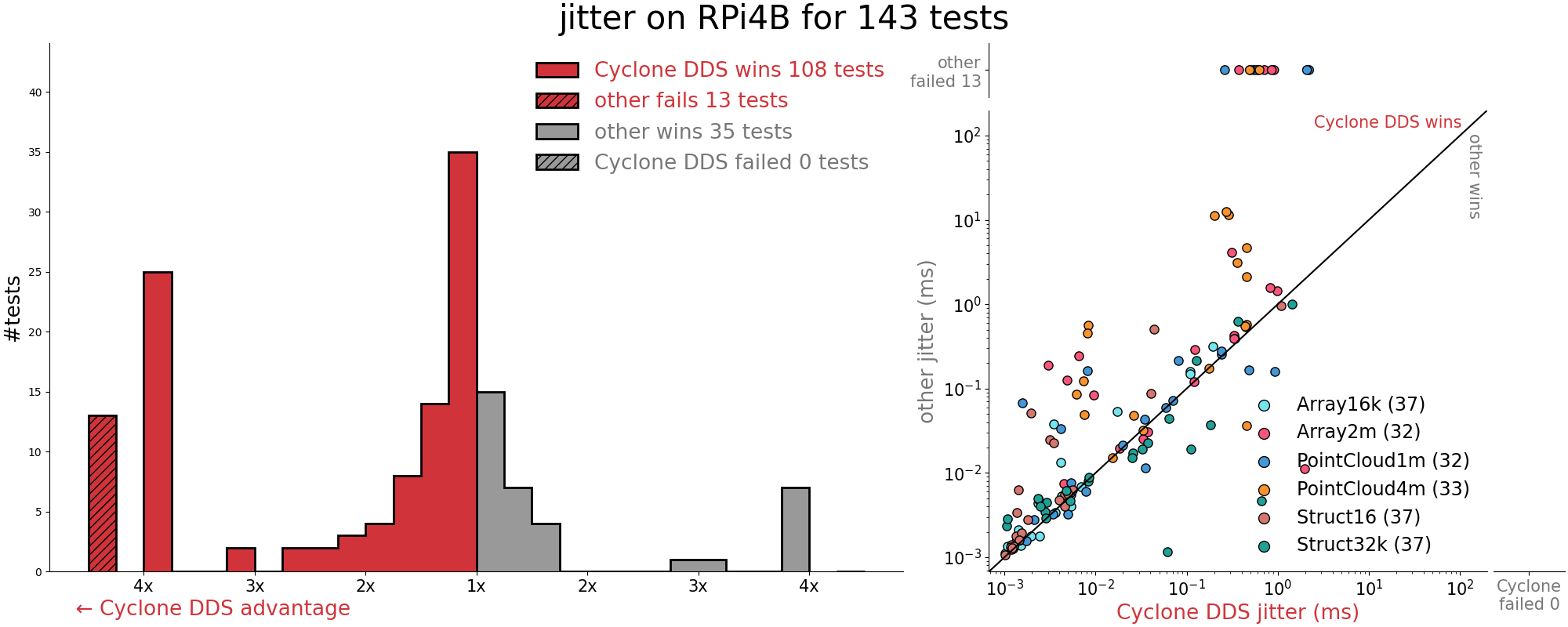

Raspberry Pi Model 4B

- Cyclone DDS wins 425 times while other wins 183 times.

- Cyclone DDS fails 0 tests while other fails 13 tests.

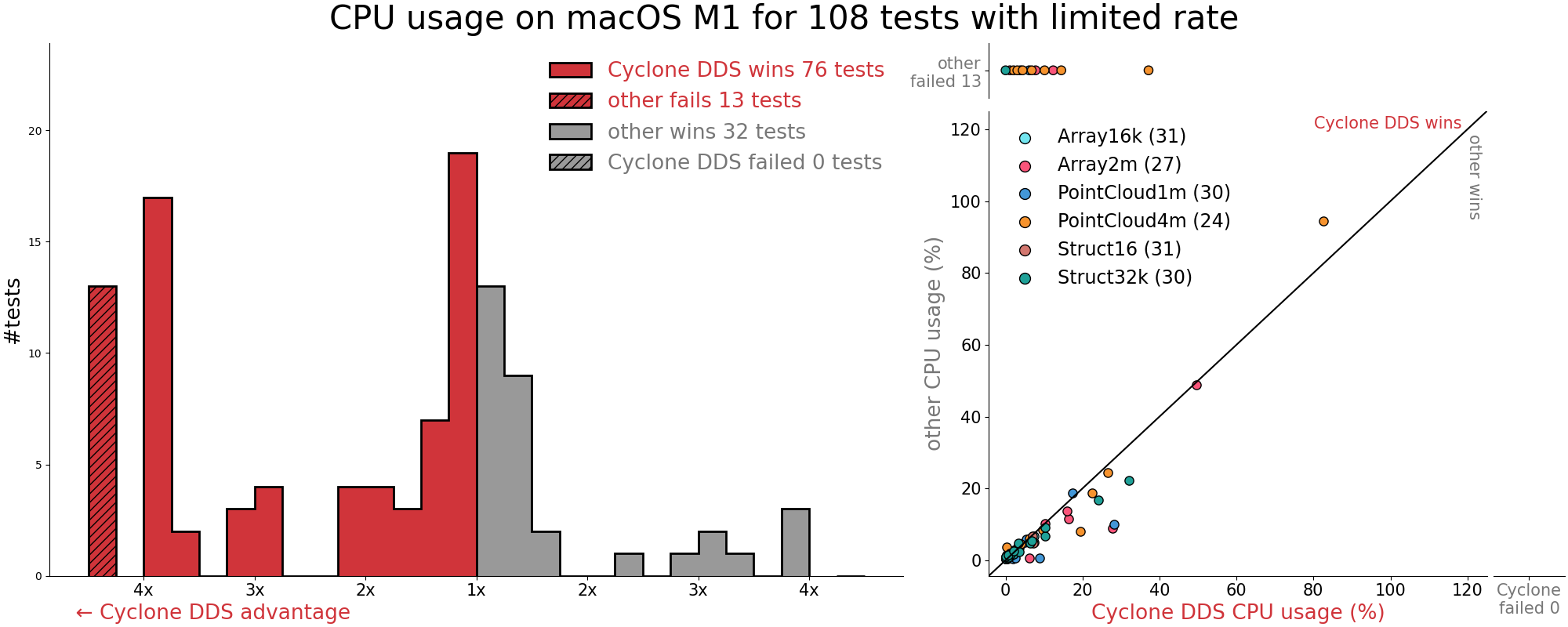

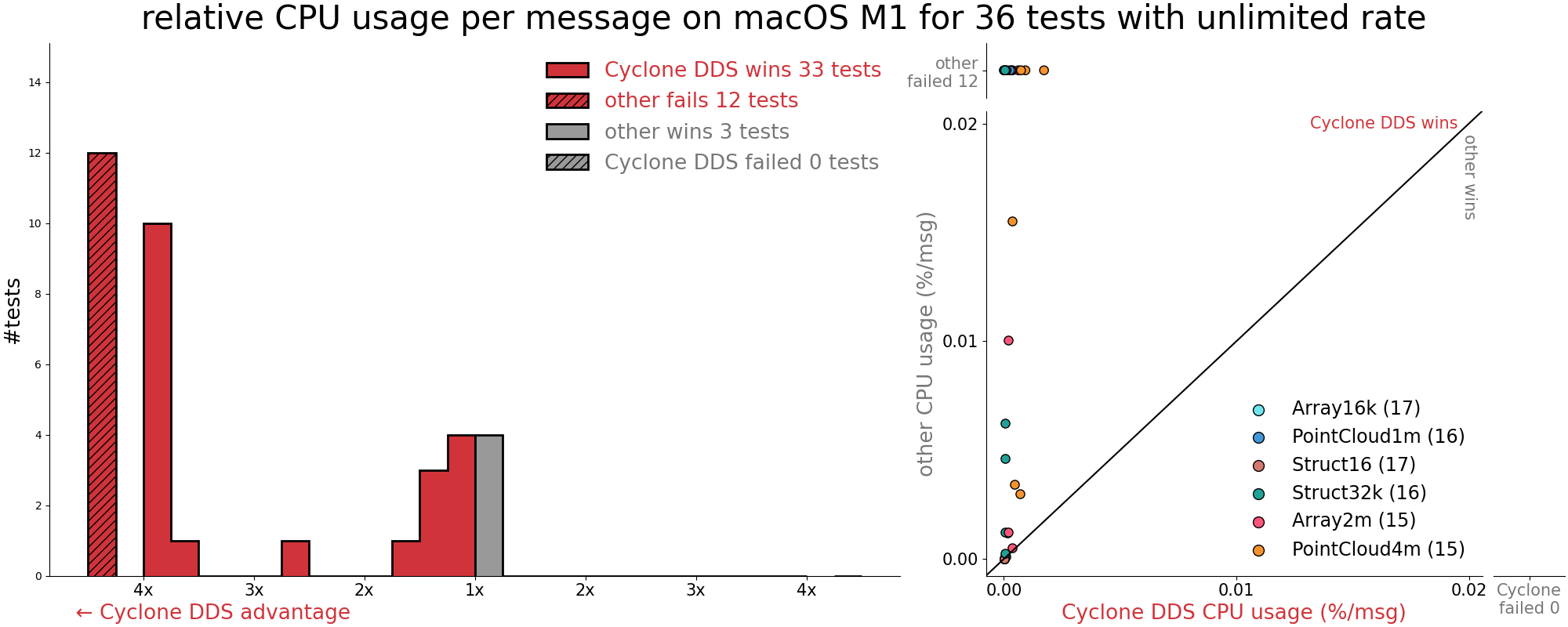

macOS M1

- Cyclone DDS wins 506 times while other wins 106 times.

- Cyclone DDS fails 0 tests while other fails 25 tests.

Appendix C: Tests that fail with OS network buffer defaults

The following tests fail for both middleware when run with operating system defaults on the test hardware listed here. When Ubuntu and Windows 10 network buffer size is increased then Cyclone DDS passes these tests. But this report is about default ROS middleware “out of the box” experience. So configuration changes are outside the scope of this report. Canonical and Microsoft can consider adjusting operating system network buffer defaults so that ROS 2 “just works”. Note that “rate: 0” means the test is free-running unlimited frequency not freerunning parkour.🏃

host: RPi4B, mode: MultiProcess, topic: PointCloud4m, rate: 20, subs: 10, zero_copy: false, reliable true

host: Ubuntu, mode: MultiProcess, topic: PointCloud8m, rate: 0, subs: 1, zero_copy: false, reliable true

host: Ubuntu, mode: MultiProcess, topic: PointCloud8m, rate: 0, subs: 3, zero_copy: false, reliable true

host: Ubuntu, mode: MultiProcess, topic: PointCloud8m, rate: 0, subs: 10, zero_copy: false, reliable true

host: Ubuntu, mode: MultiProcess, topic: PointCloud8m, rate: 20, subs: 1, zero_copy: false, reliable true

host: Ubuntu, mode: MultiProcess, topic: PointCloud8m, rate: 20, subs: 3, zero_copy: false, reliable true

host: Ubuntu, mode: MultiProcess, topic: PointCloud8m, rate: 20, subs: 10, zero_copy: false, reliable true

host: Ubuntu, mode: MultiProcess, topic: PointCloud8m, rate: 100, subs: 1, zero_copy: false, reliable true

host: Ubuntu, mode: MultiProcess, topic: PointCloud8m, rate: 100, subs: 3, zero_copy: false, reliable true

host: Ubuntu, mode: MultiProcess, topic: PointCloud8m, rate: 100, subs: 10, zero_copy: false, reliable true

host: Ubuntu, mode: MultiProcess, topic: PointCloud8m, rate: 500, subs: 1, zero_copy: false, reliable true

host: Ubuntu, mode: MultiProcess, topic: PointCloud8m, rate: 500, subs: 3, zero_copy: false, reliable true

host: Ubuntu, mode: MultiProcess, topic: PointCloud8m, rate: 500, subs: 10, zero_copy: false, reliable true

host: Windows, mode: MultiProcess, topic: Array2m, rate: 500, subs: 10, zero_copy: false, reliable true

host: Windows, mode: MultiProcess, topic: PointCloud1m, rate: 0, subs: 1, zero_copy: false, reliable true

host: Windows, mode: MultiProcess, topic: PointCloud1m, rate: 0, subs: 3, zero_copy: false, reliable true

host: Windows, mode: MultiProcess, topic: PointCloud1m, rate: 0, subs: 10, zero_copy: false, reliable true

host: Windows, mode: MultiProcess, topic: PointCloud1m, rate: 500, subs: 10, zero_copy: false, reliable true

host: Windows, mode: MultiProcess, topic: PointCloud4m, rate: 500, subs: 10, zero_copy: false, reliable true