Introduction

Welcome! This is a book about multi-robot systems. Why? Because it's the future!

Robots are becoming more affordable, more capable, and more useful in many "real life" scenarios. As a result, we are seeing more and more robots that need to share spaces and work together to accomplish tasks. In this book, we will introduce the Robot Operating System 2 (ROS 2) as well as the Robot Middleware Framework (RMF), which is built on ROS 2 and tries to simplify the creation and operation of complex multi-robot systems.

This chapter describes the motivation and goals for ROS 2 and the RMF system for integrating multiple robots.

ROS 2

The Robot Operating System (ROS) is a set of software libraries and tools for building robot applications. From drivers to state-of-the-art algorithms, and with powerful developer tools, ROS has what you need for your next robotics project. And it’s all open source.

Since ROS was started in 2007, a lot has changed in the robotics and ROS community. ROS 1, originally just "ROS", began life as the development environment for the Willow Garage PR2 robot, a high-performance mobile manipulation platform intended for advanced research and development. The original goal of ROS was to provide the software tools users would need to undertake novel research and development projects with this robot. At the same time, the ROS 1 development team knew the PR2 would not be the only robot in the world, nor the most important, so they wanted ROS 1 to be useful on other robots, too. The original focus was on defining levels of abstraction (usually through message interfaces) that would allow much of the software to be reused elsewhere.

ROS 1 satisfied the PR2 use case, but also became useful on a surprisingly wide variety of robots. This included robots similar to the PR2, but also wheeled robots of all sizes, legged humanoids, industrial arms, outdoor ground vehicles (including self-driving cars), aerial vehicles, surface vehicles, and more. ROS 1 adoption also took a surprising turn, happening in domains beyond the mostly academic research community that was the initial focus. ROS-1-based products were coming to market, including manufacturing robots, agricultural robots, commercial cleaning robots, and others. Government agencies were also looking more closely at ROS for use in their fielded systems; NASA, for example, expected to run ROS on the Robonaut 2 deployed to the International Space Station.

All of these applications certainly grew the ROS platform in unexpected ways. Though it held up well, the ROS 1 team believed they could better meet the needs of the broader ROS community by tackling their new use cases head-on. And so, ROS 2 was born.

The initial goal of the ROS 2 project was to adapt to the changing landscape, leveraging what was great about ROS 1 and improving what wasn’t. But there was also a desire to preserve ROS 1 as it existed, to continue working and remain unaffected by the development of ROS 2. So, ROS 2 was built as a parallel set of packages that can be installed alongside and interoperate with ROS 1 (for example, through message bridges).

At the time of writing, we have reached the 13th and last official ROS 1 release, Noetic Ninjemys, and the first LTS release of ROS 2, Foxy Fitzroy.

A large and growing amount of ROS 2 resources can be found on the web. A great place to start is on the ROS Index page for ROS 2 and further along in this book in the ROS 2 chapter.

Enjoy your journey!

Robotics Middleware Framework (RMF)

For a moment, think of any large building. It could be a shopping mall, housing complex, university building, workplace, airport, hospital, hotel, and so on. Are items delivered within the building? Is the building floor cleaned regularly? For most buildings, the answer to both questions is "yes."

Now, let's think of what happens when robots start to perform those tasks. In today's robot marketplace, you can purchase excellent delivery robots, as well as excellent floor-cleaning robots. However, what if the floor is being cleaned at the same time that items are being delivered in the building? This situation is trivial when humans are performing the cleaning and delivery tasks: a quick glance between a delivery person pushing a cart and a custodian cleaning the floor is all it takes to quickly reach a compromise. One or both people will find a way to slightly alter the timing of their task to allow both tasks to be completed.

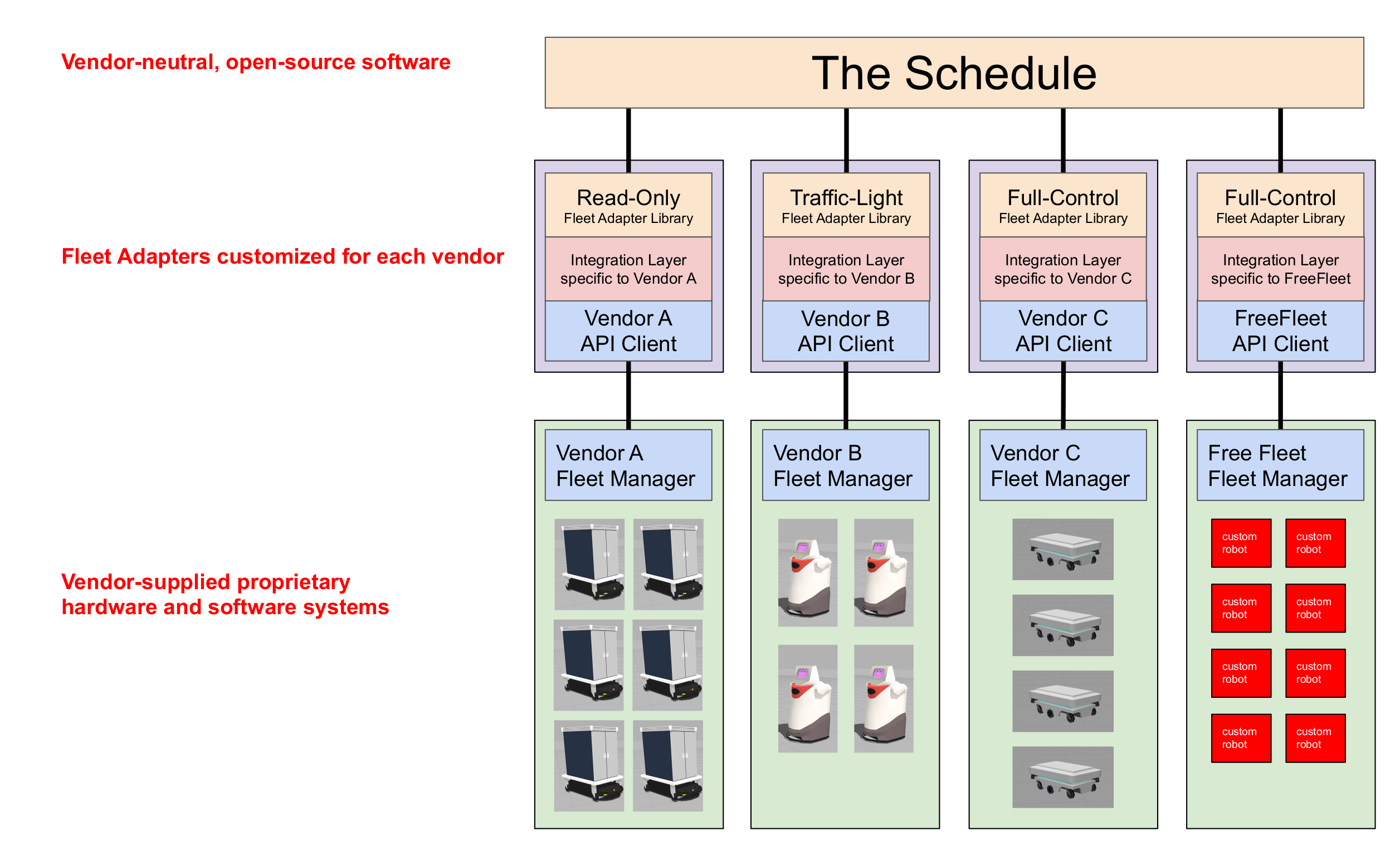

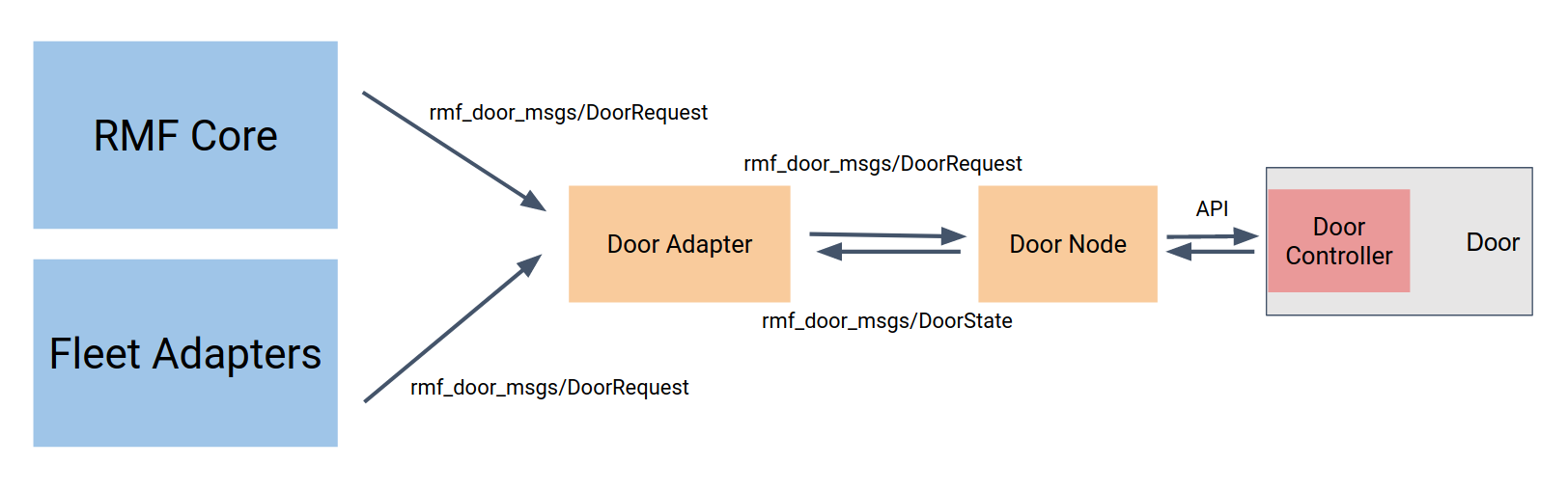

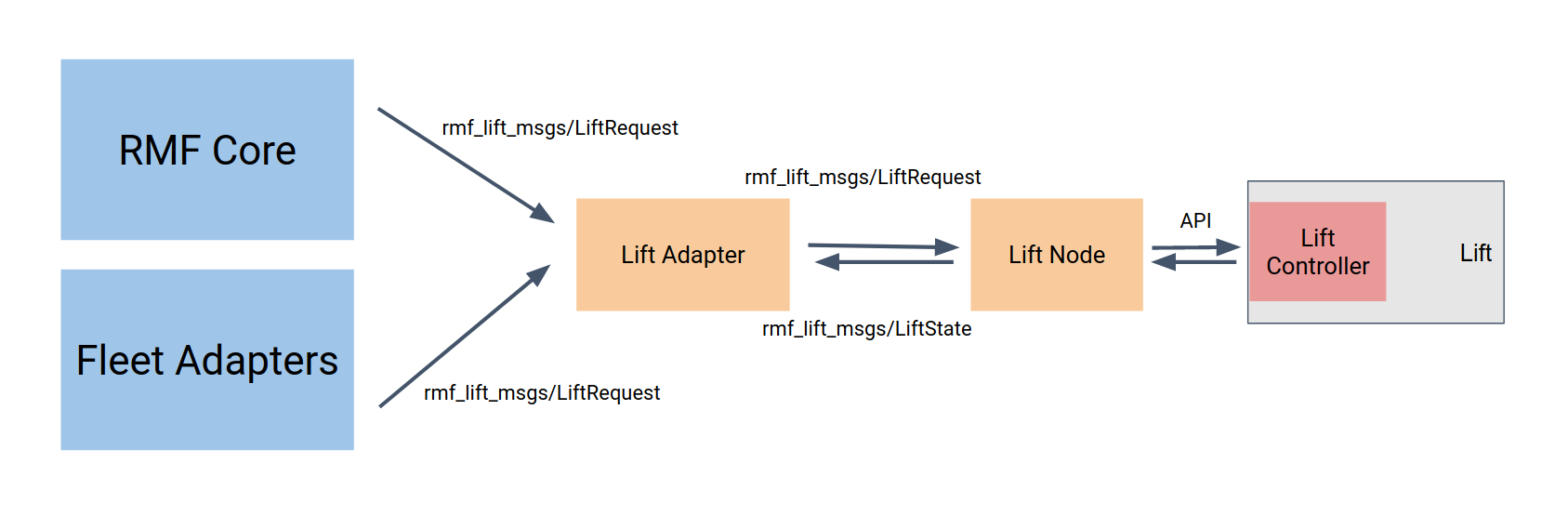

Unfortunately, robots are nowhere near as capable as humans at abstract reasoning, planning, and informal communication! This type of scenario is what the Robotics Middleware Framework (RMF) tries to help avoid. In today's marketplace, if all robots are purchased from the same manufacturer, the robots in such a single-vendor system will know of each other's existence and will avoid conflicting with each other. However, multi-vendor, multi-robot systems remain an open problem, and we expect that multi-vendor robot deployments will be the norm in all large buildings in the future. To address this situation, RMF provides a set of conventions, tools, and software implementations to allow multiple fleets of robots to interoperate with each other and with shared building infrastructure, such as lifts, doors, corridors, and other natural "bottlenecks" to traffic flows and tasks.

Without a framework for multi-vendor robotics in place, there can be significant but hidden risks for building operators and end users when they are forced to commit to a single system or platform provider. Hidden risks are likely to force an end user to limit their selection of future solutions from that a single provider to minimize operational risk and avoid redundant integration costs. As the scope and scale of robotic deployments increase, this problem is exacerbated, leaving the customer with the perception that there are no good options except to stay with their current provider, and preventing the use of robots from newer entrants to the marketplace.

Beyond the increased cost risk of scaling deployment with different providers, there is also the inherent conflict over shared resources such as lifts, doorways, corridors, network bandwidth, chargers, operations-center screen “real estate,” and human resources such as IT personnel and maintenance technicians. As robotic scale increases, it becomes more cumbersome for an operations team to consider managing a large, heterogeneous, multi-vendor robot environment.

These problem statements were the foundational motivations for the development of RMF.

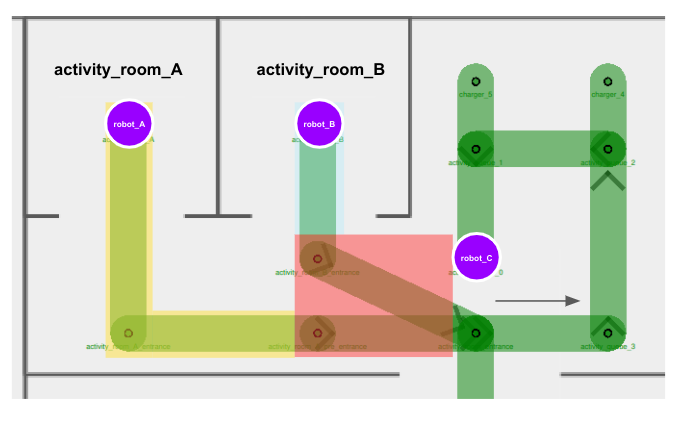

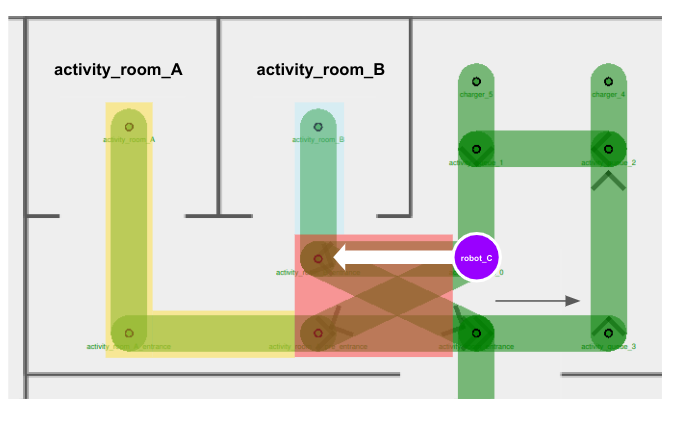

In the previous "cleaning and delivery" scenario, RMF can act as a traffic controller to help the delivery robot and cleaning robot negotiate a way for both tasks to be accomplished, depending on the relative priority and importance of each task. If the cleaning task is urgent (perhaps a spill occurred in a busy corridor), RMF could route the delivery task through a different set of corridors. If the delivery task is time-critical, RMF could direct the cleaning robot to pause its work and move out of the way until the delivery robot clears the corridor. Of course, these solutions are obvious and could be easily hand-written for this particular "cleaning and delivery" corridor-sharing scenario. The challenge comes from trying to be generic across many scenarios, while also trying to be "future proof" to allow expansion to currently-unknown robots, applications, and task domains.

The rest of the book will dive into these details to show how RMF tries to foresee and prevent resource conflicts and improve the efficiency of multi-vendor, multi-robot systems. There is no magic here! All of the implementations are open-source and available for inspection and customization.

We would like to acknowledge the Singapore government for their vision and support to start this ambitious research and development project, "Development of Standardised Robotics Middleware Framework - RMF detailed design and common services, large-scale virtual test farm infrastructure, and simulation modelling". The project is supported by the Ministry of Health (MOH) and National Robotics Program (NRP).

Any opinions, findings and conclusions or recommendations expressed in this material are those of the author(s) and do not reflect the views of the NR2PO and MOH.

So what is RMF?

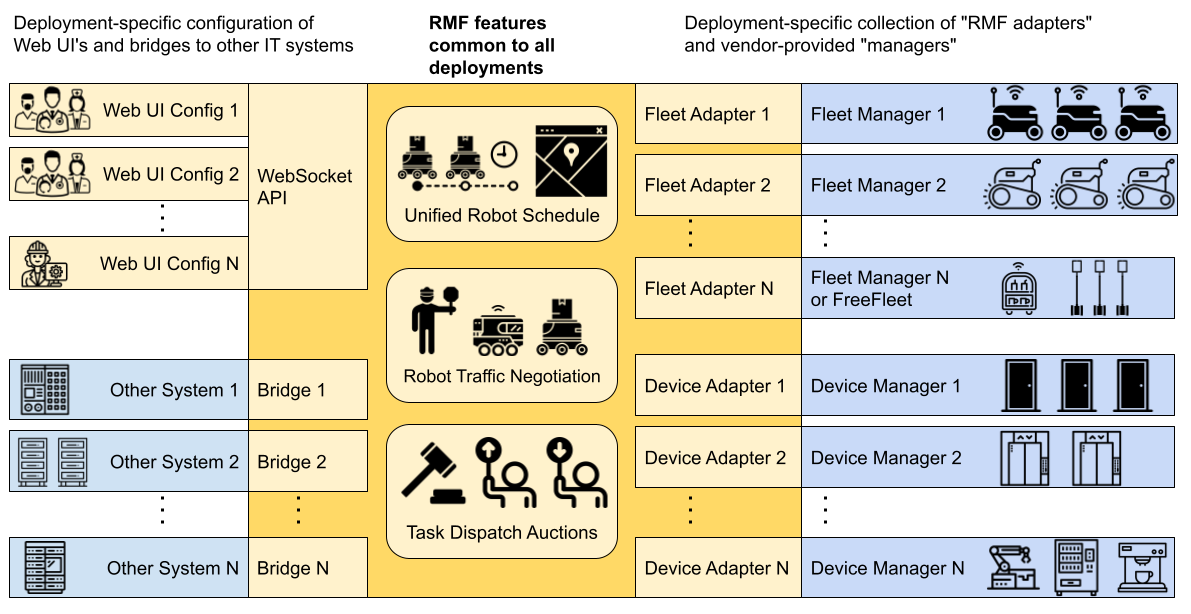

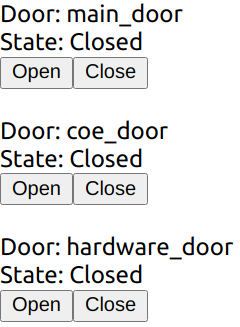

RMF is a collection of reusable, scalable libraries and tools building on top of ROS 2 that enable the interoperability of heterogeneous fleets of any type of robotic systems. RMF utilizes standardized communication protocols to infrastructure, environments and automation where robots are deployed to optimize the use of critical resources (i.e. robots, lifts, doors, passageways, etc). It adds intelligence to the system through resource allocation and by preventing conflicts over shared resources through the RMF Core which will be described in detail later in this book.

RMF is flexible and robust enough to operate over virtually any communications layer and integrate with any number of IOT devices. The architecture of RMF is designed in such a way to allow scalability as the level of automation in an environment increases. There are various ways for systems and users to interact with RMF via APIs and customizable user interfaces. Once deployed in an environment, RMF will save costs by allowing resources to be shared and integrations to be minimized. It is what robotic developers and robot customers have been looking for. In a nutshell, here is RMF:

How does RMF make the magic happen?

We will explore each of these functional areas in more detail in later chapters of this book, but for now we'd like to also introduce some of the other utilities helpful when developing and integrating with RMF.

RMF Demos

The demos are demonstrations of the capabilities of RMF in various environments. This repository serves as a starting point for working and integrating with RMF.

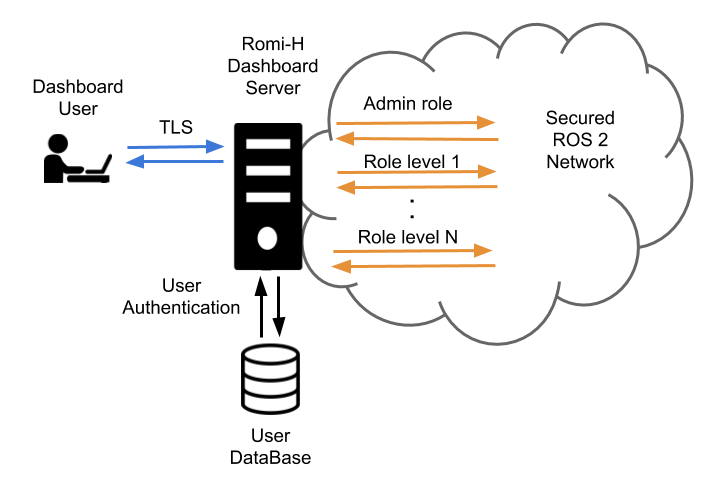

Traffic Editor

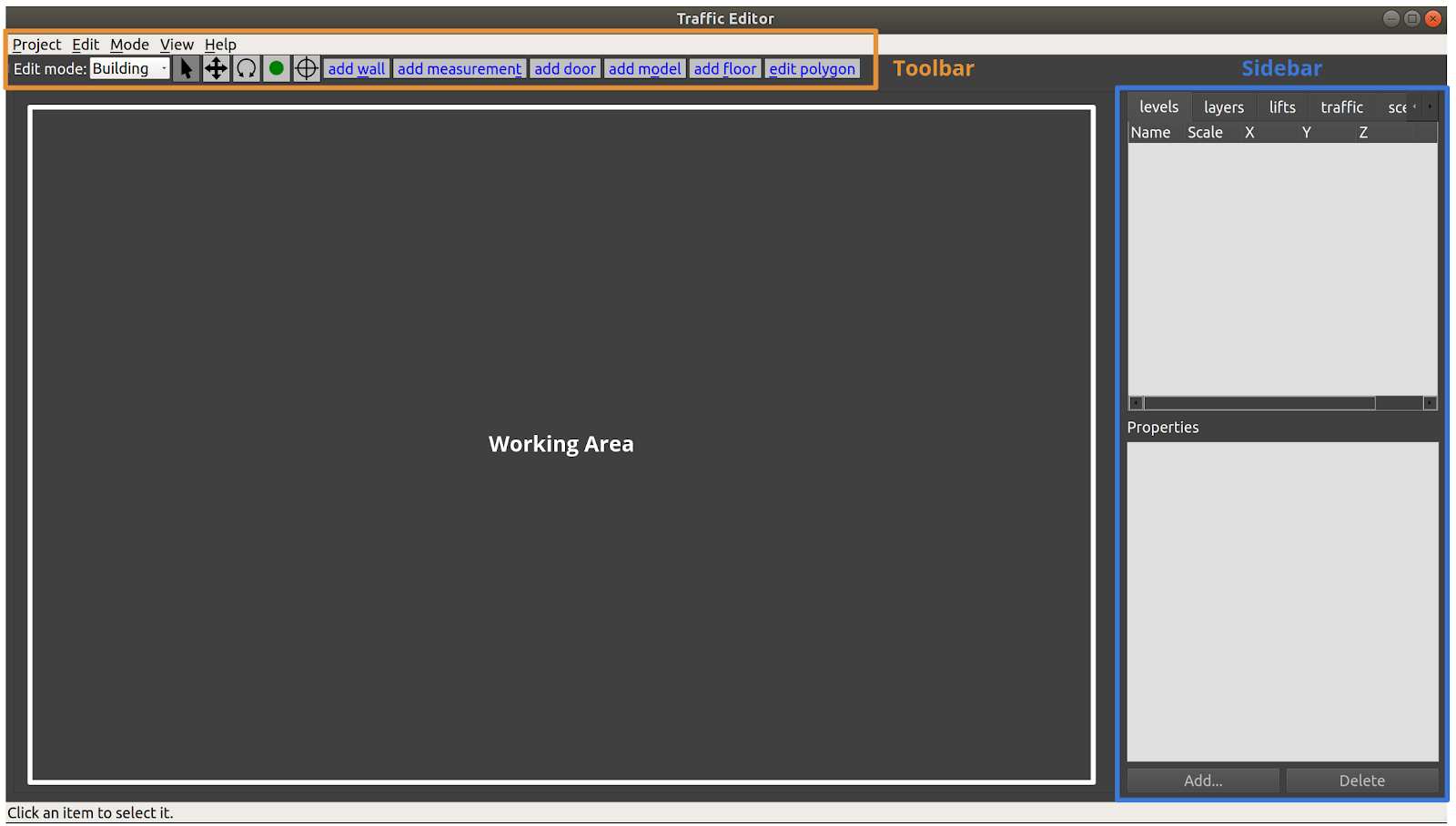

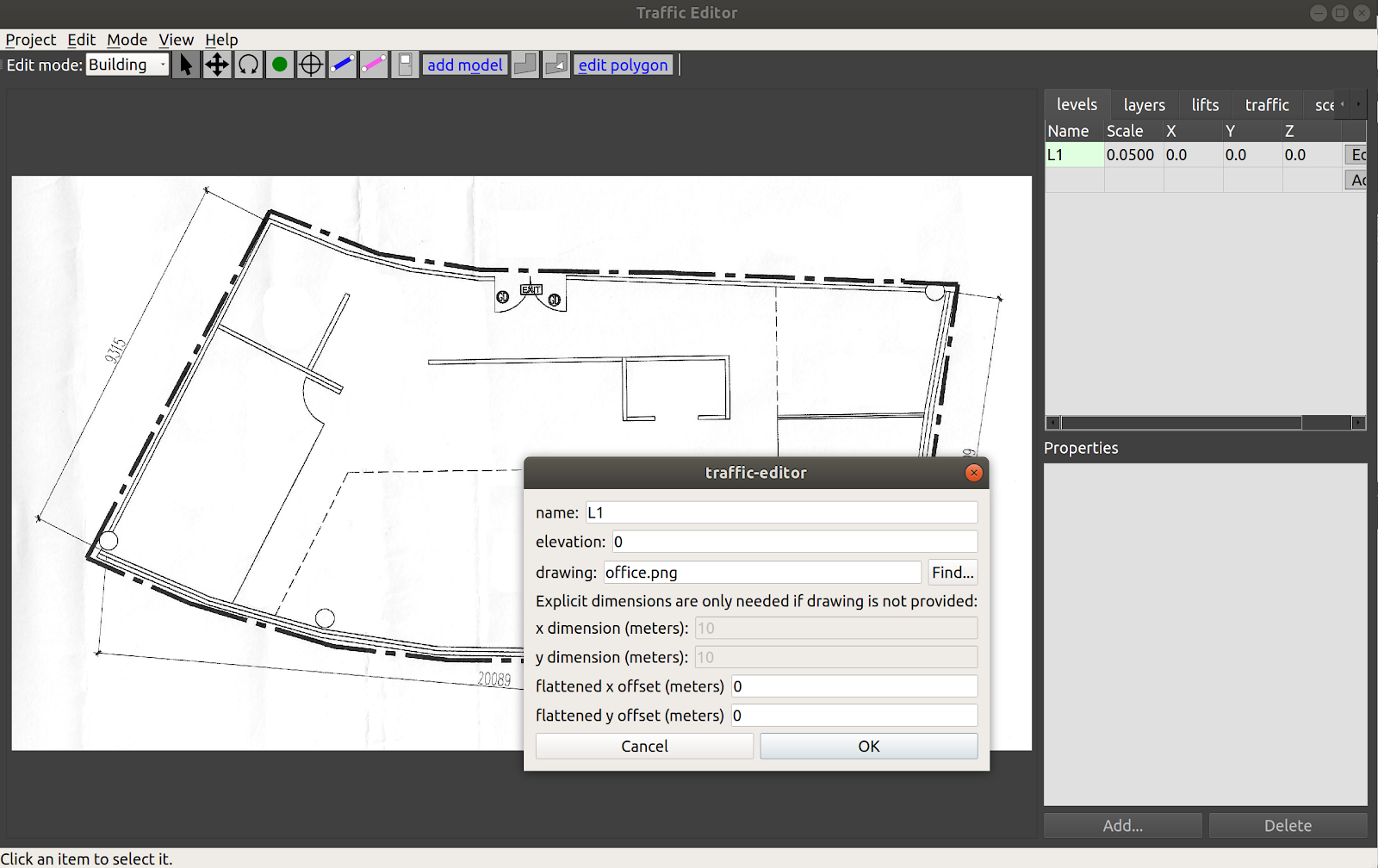

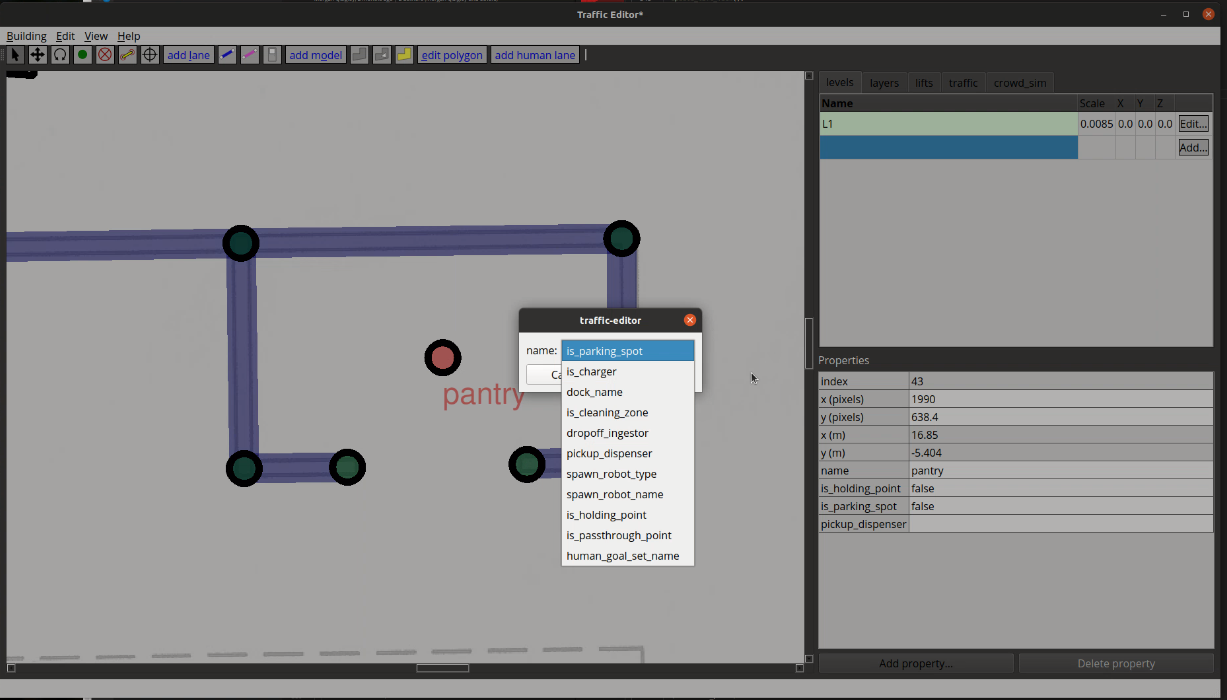

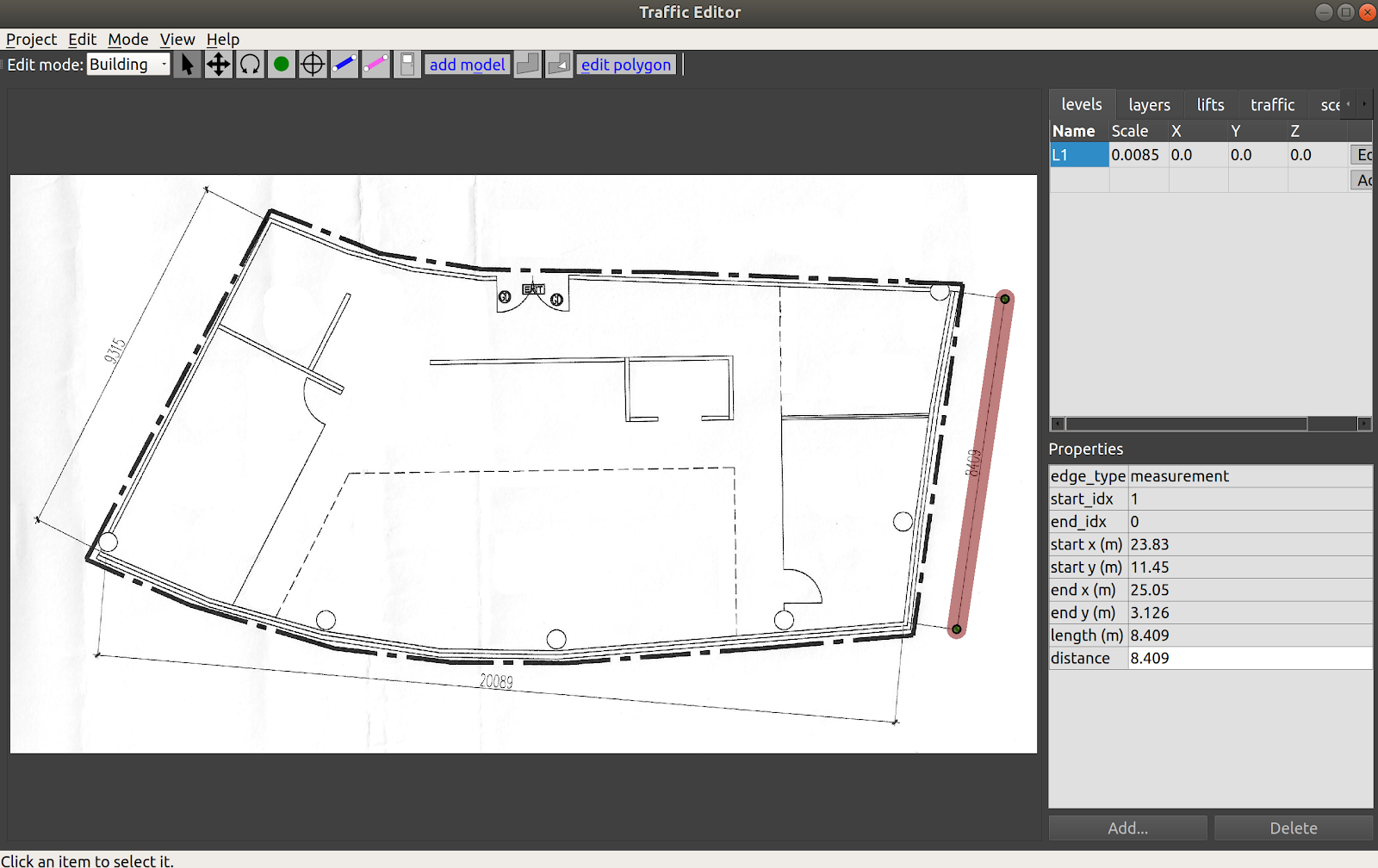

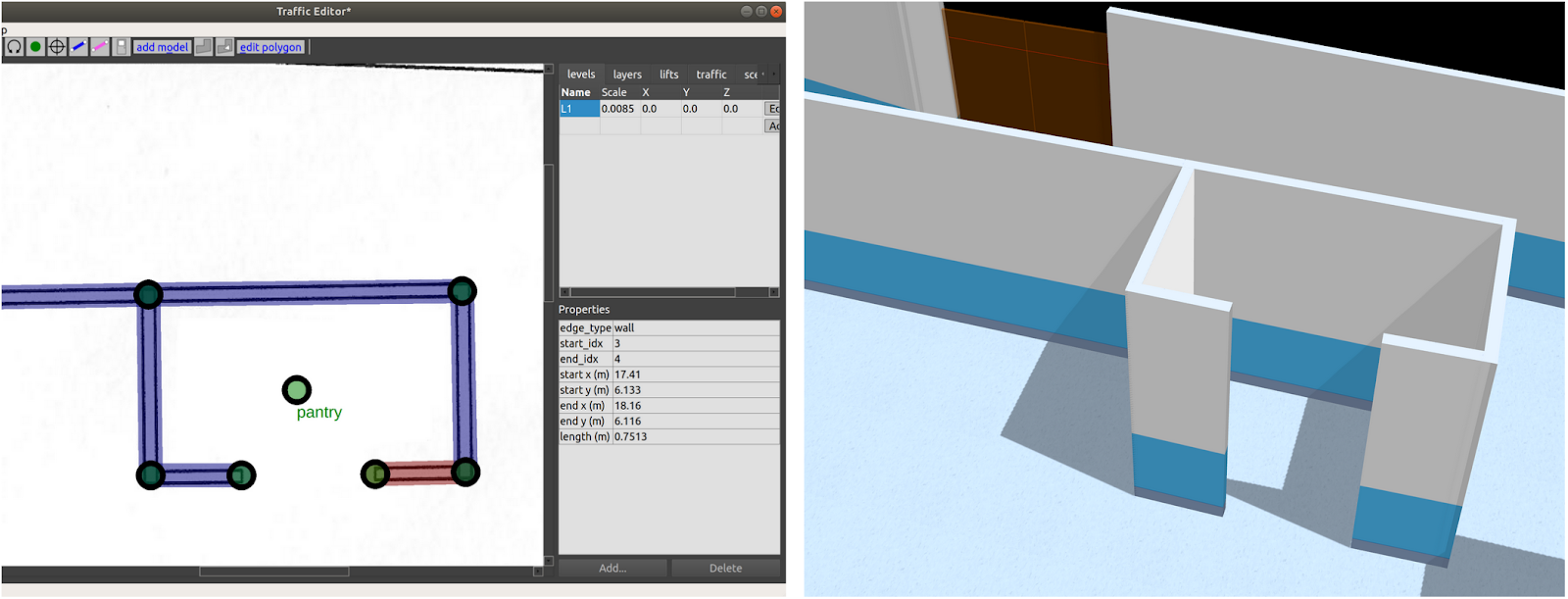

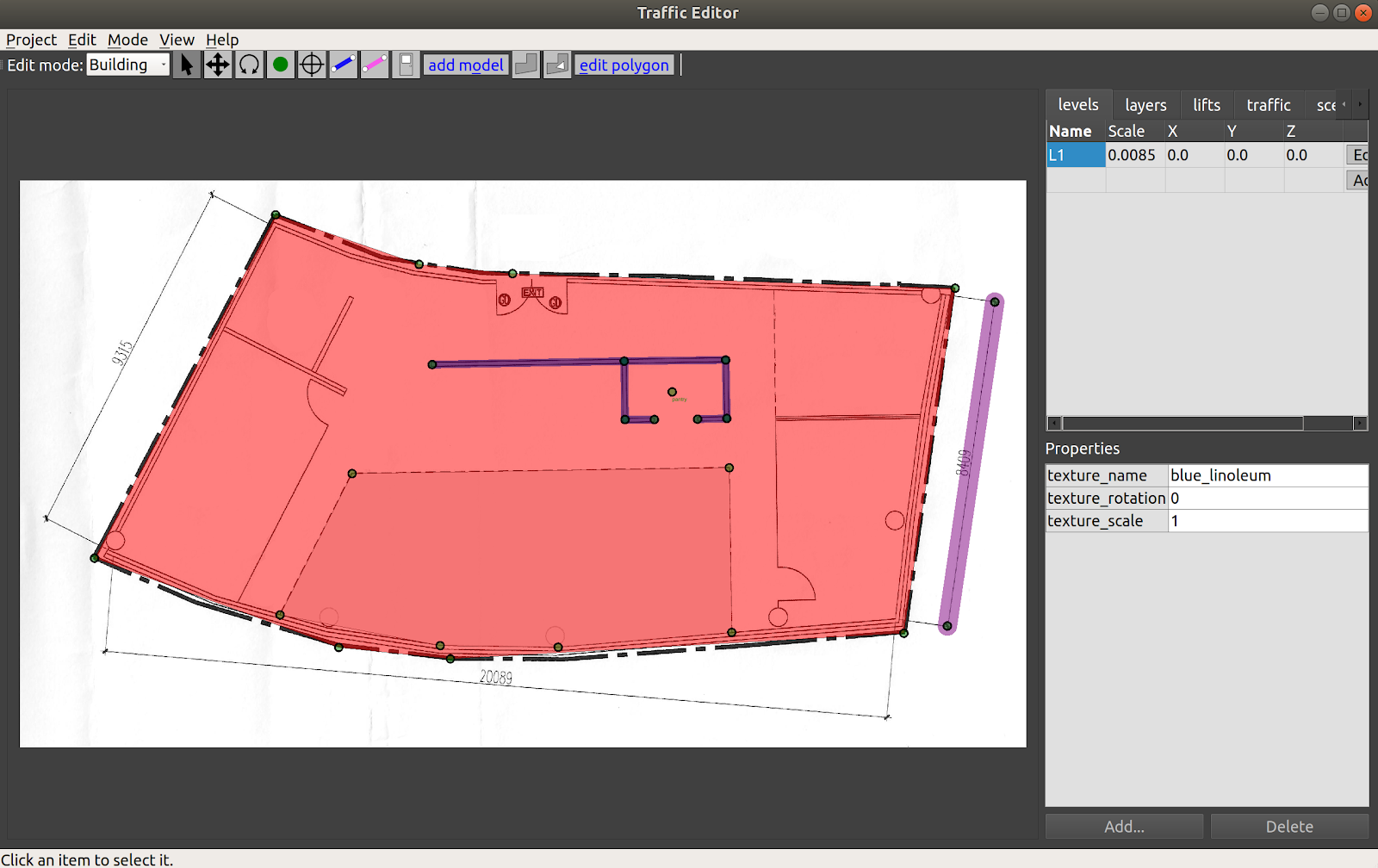

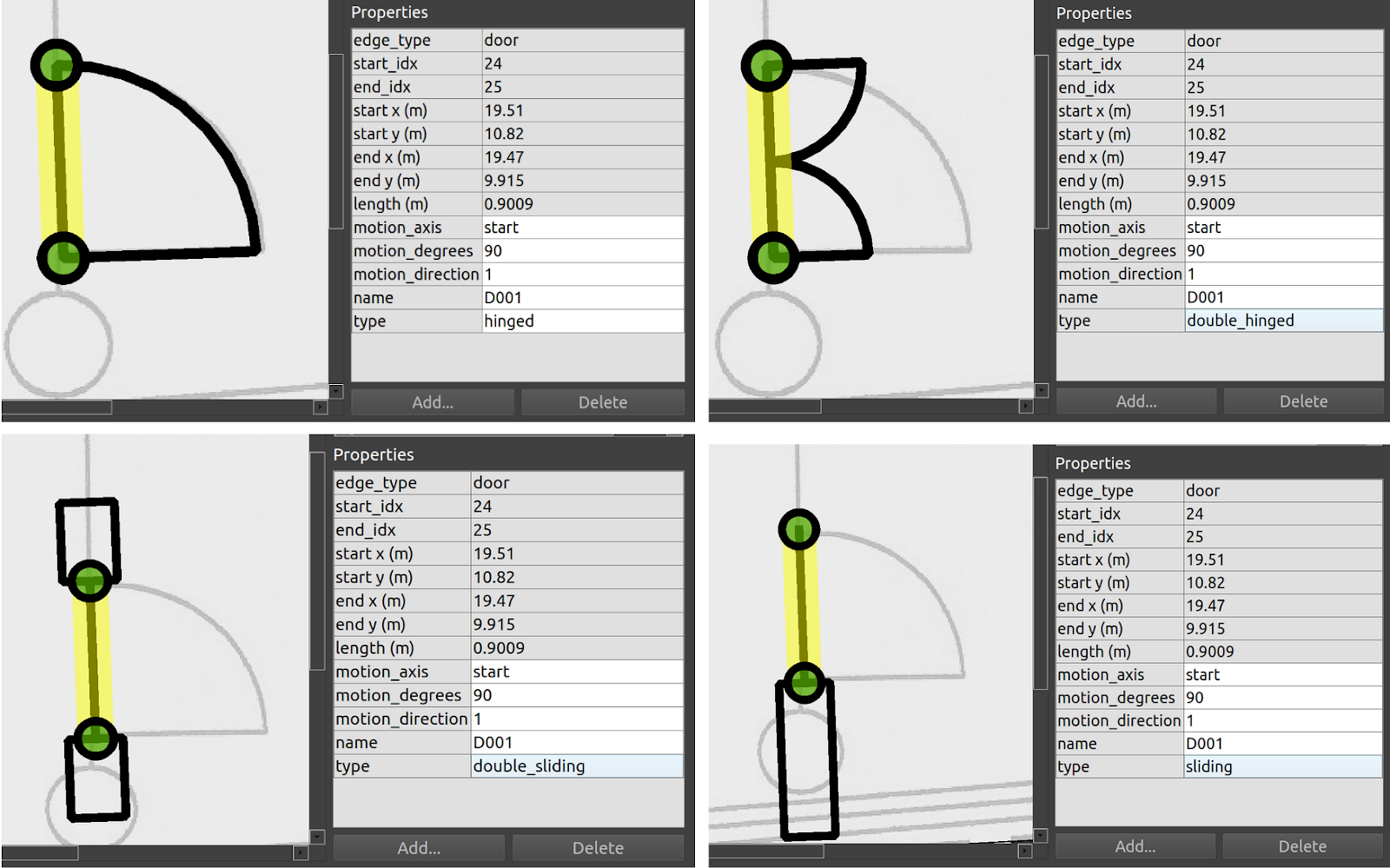

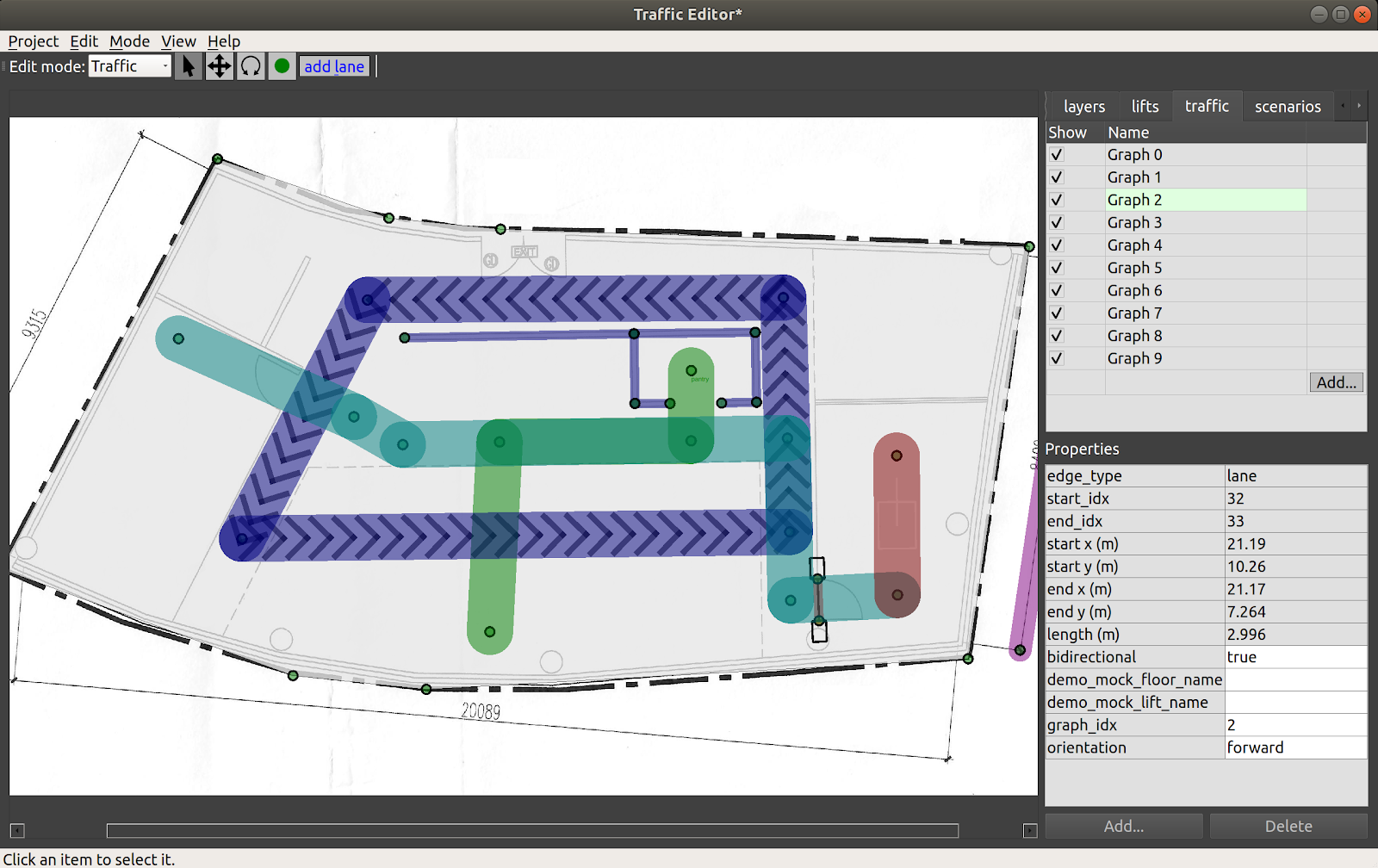

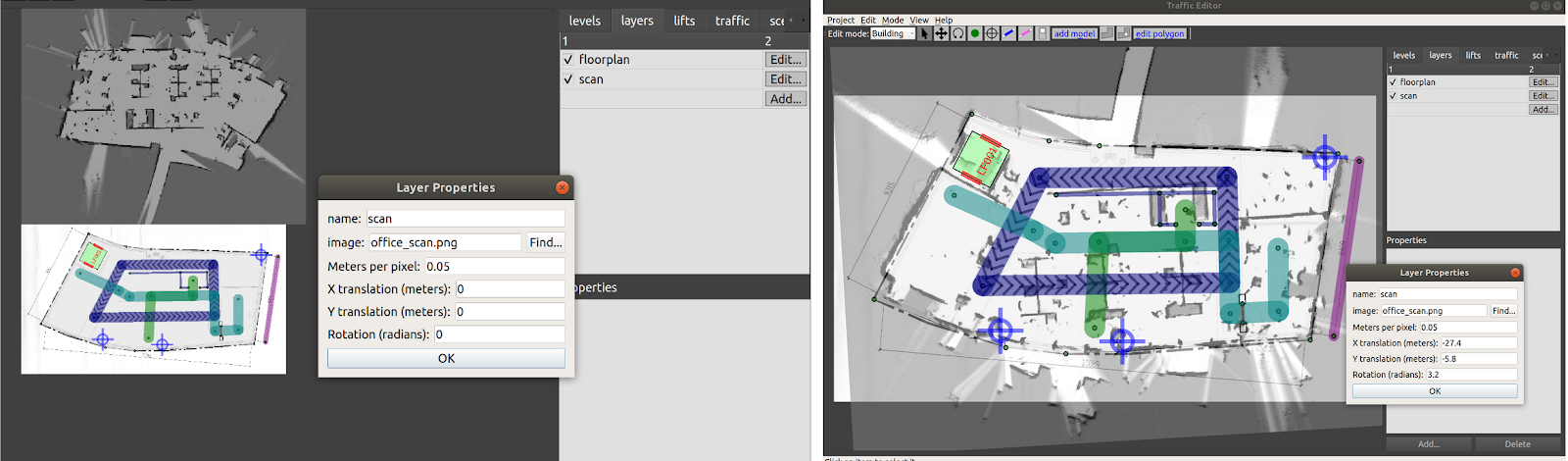

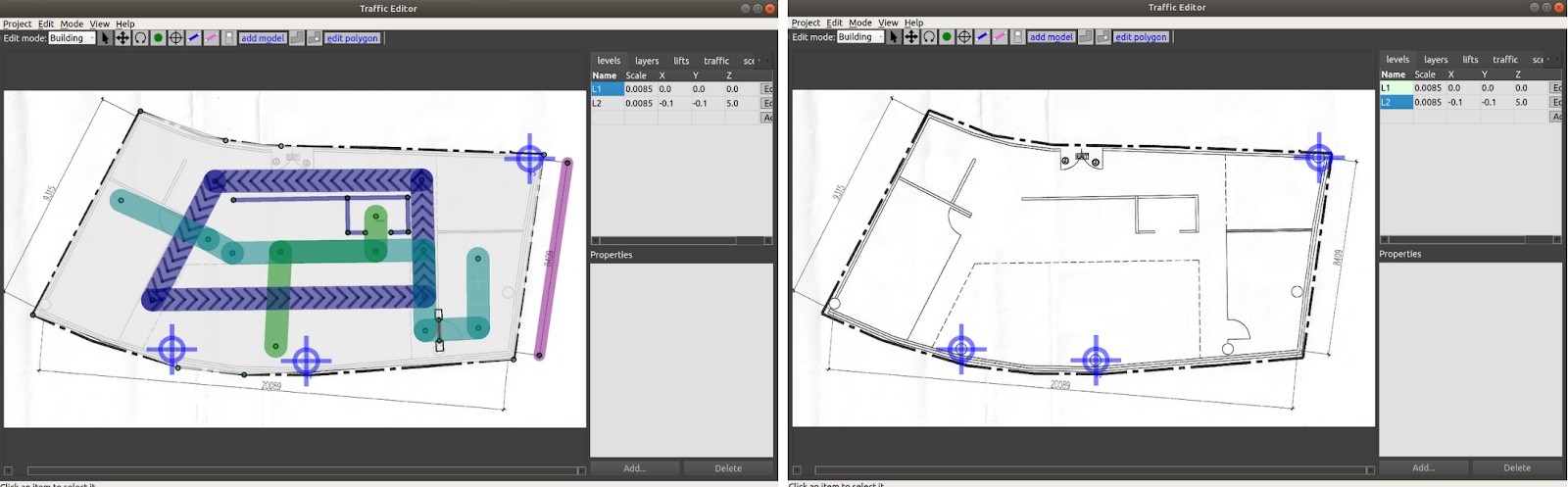

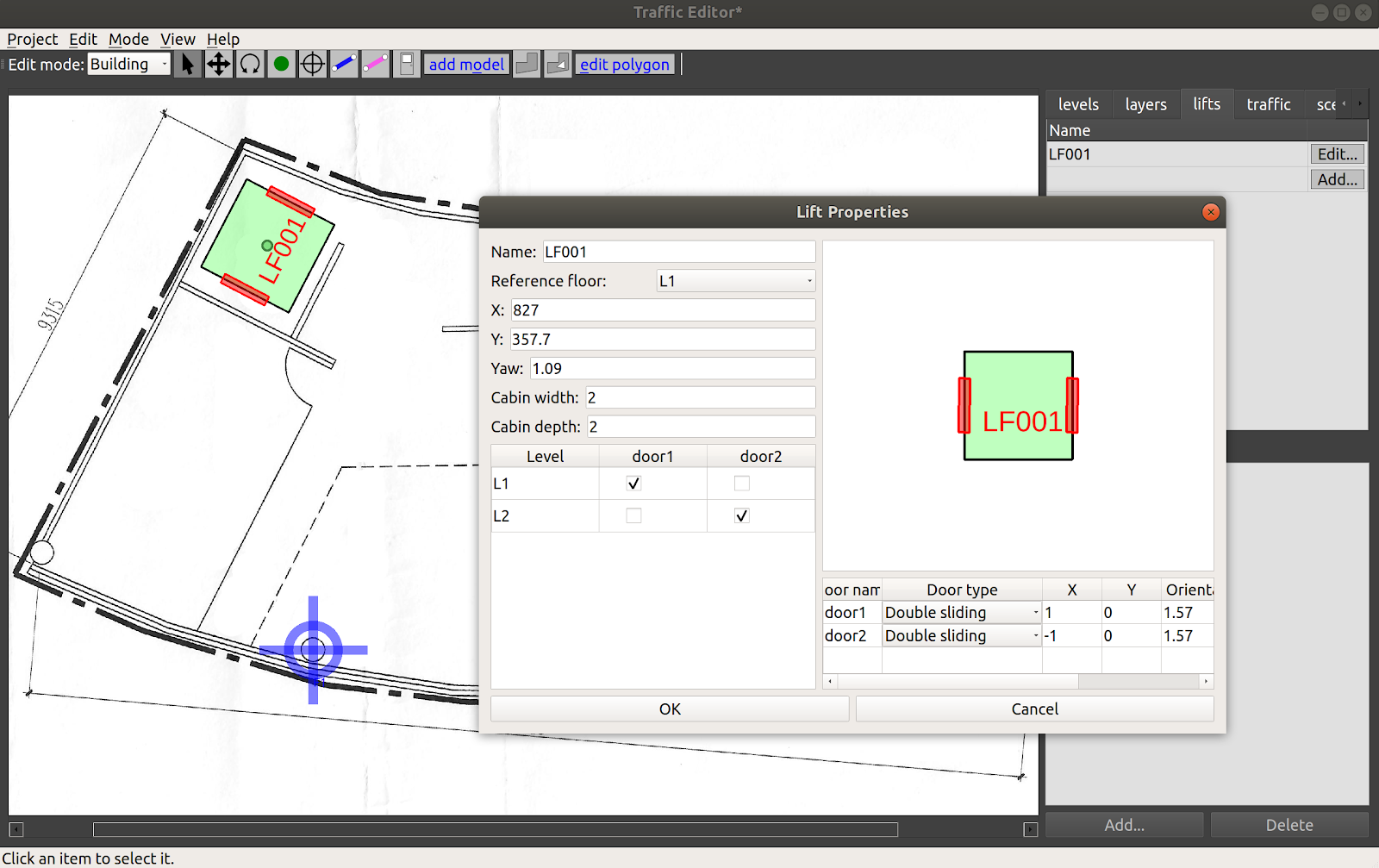

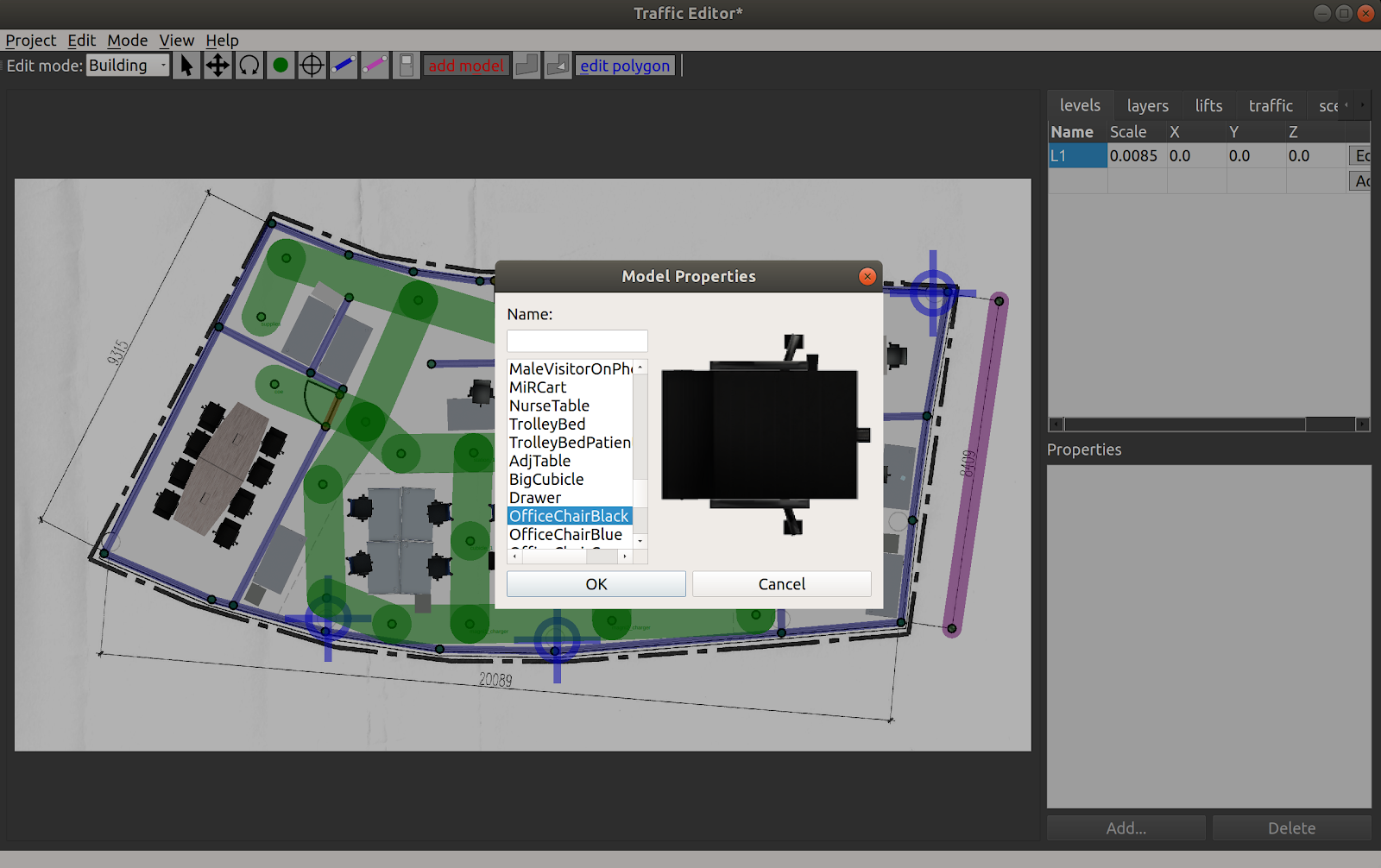

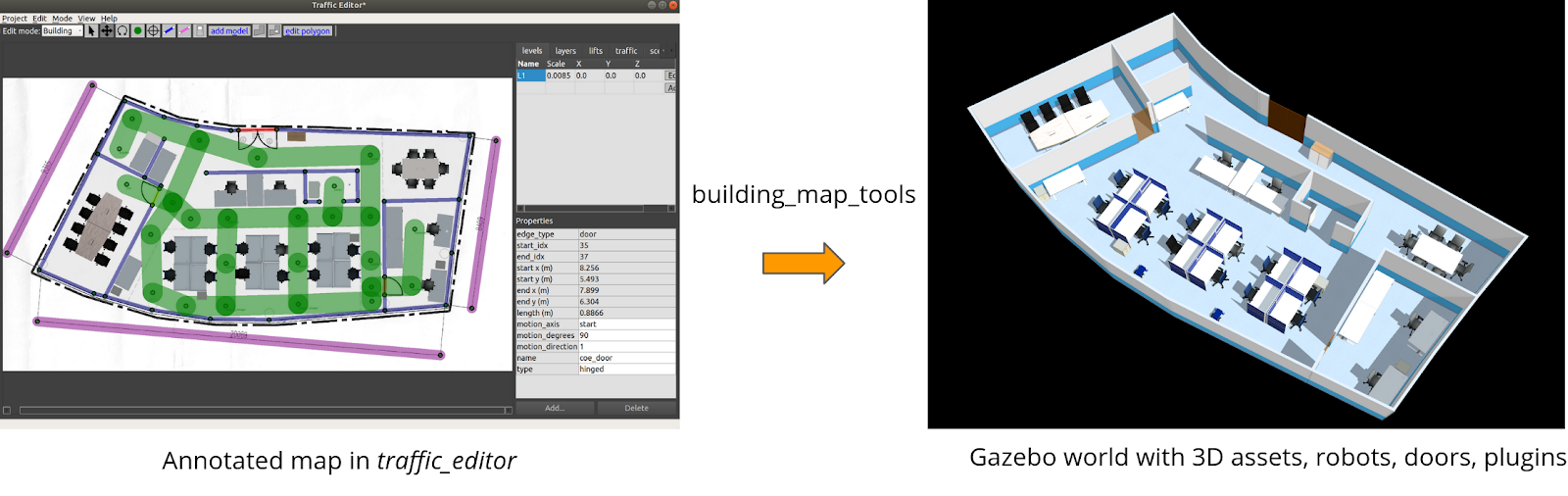

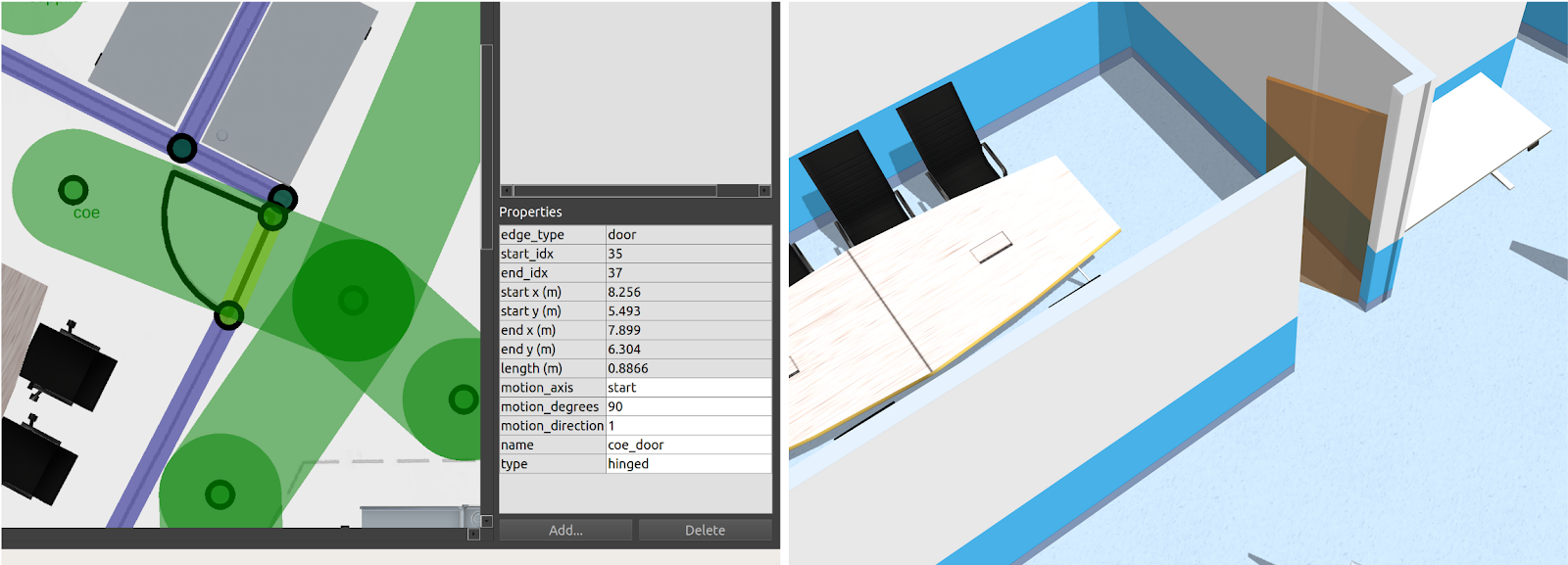

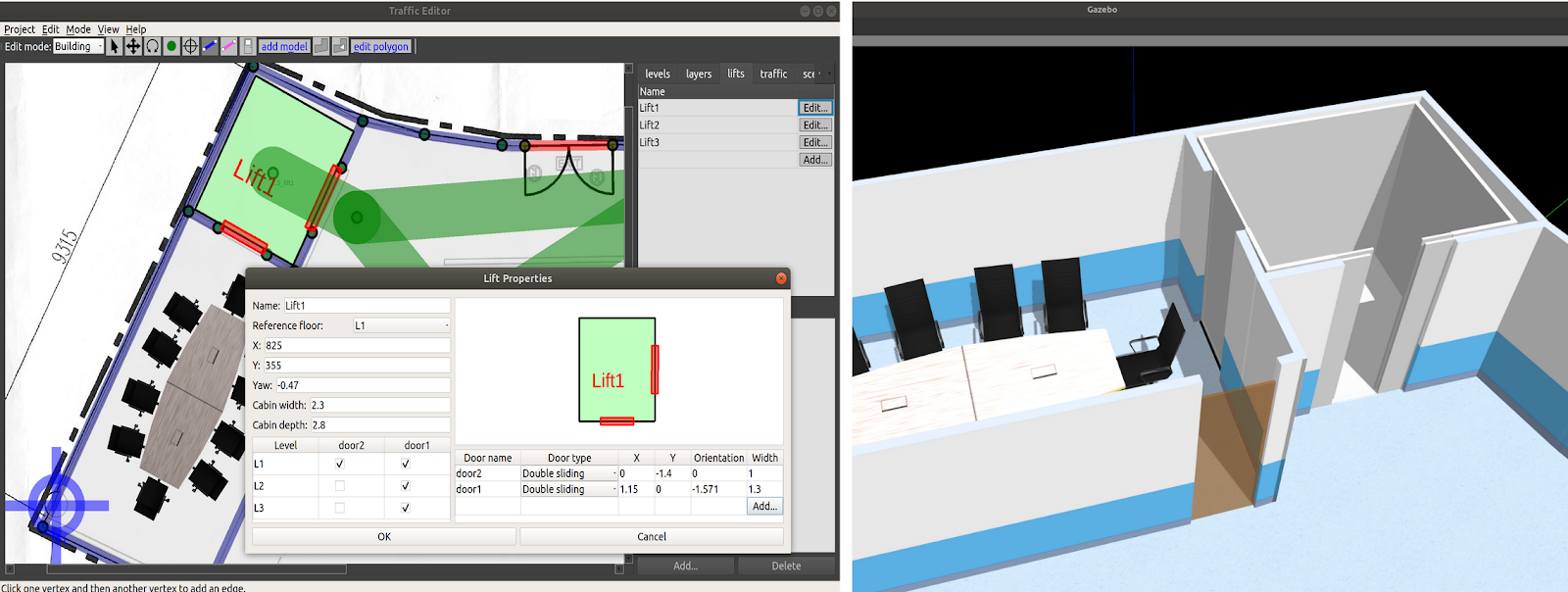

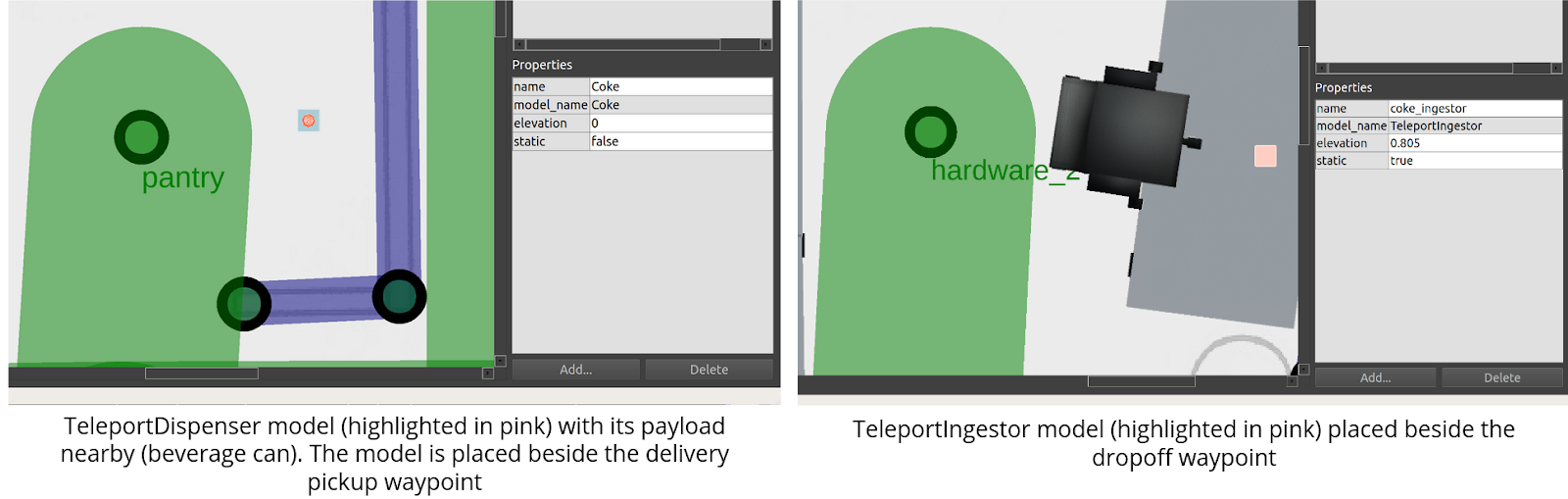

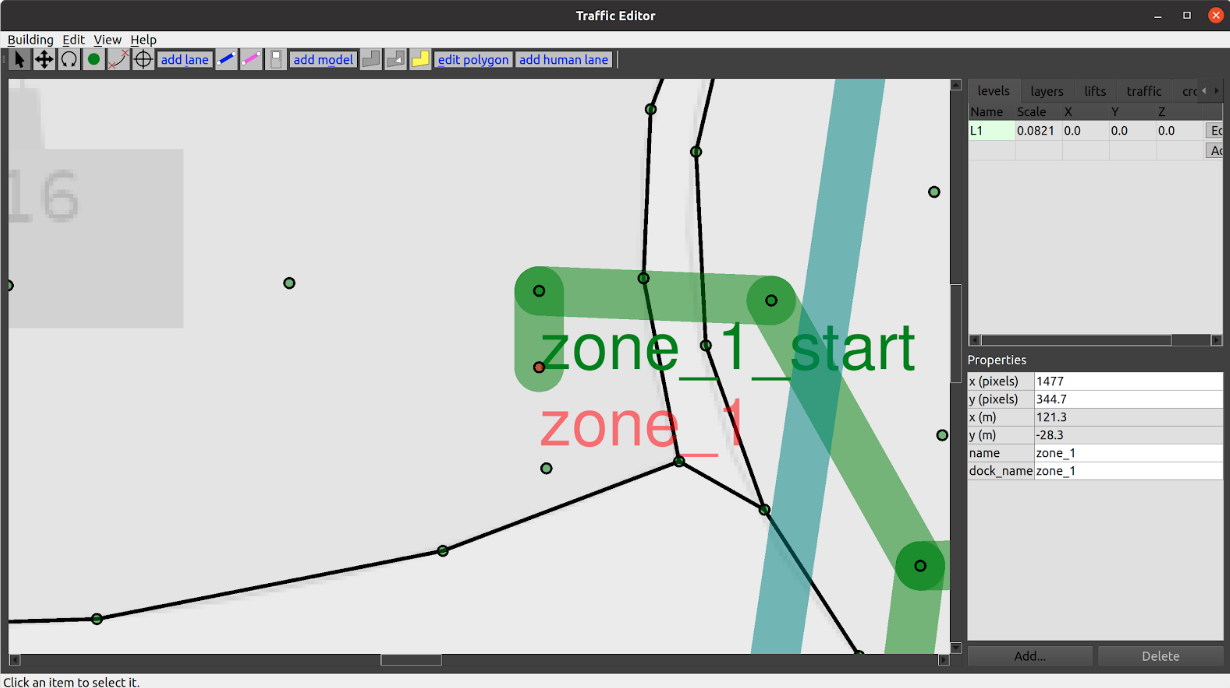

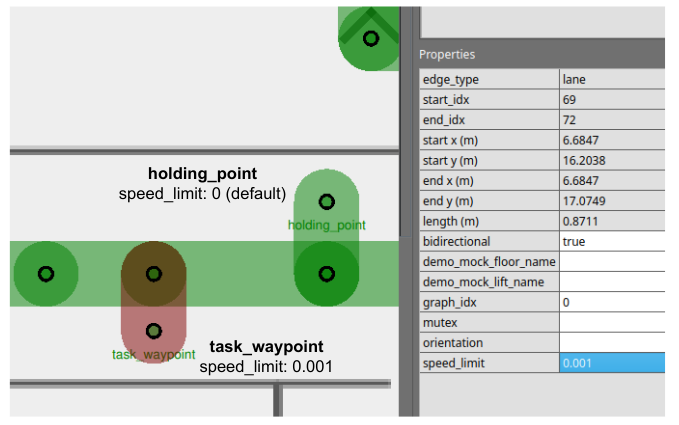

Traffic Editor is a GUI for creating and annotating floorplans for use in RMF.

Through Traffic Editor you are able to create traffic patterns for use in RMF and introduce simulation models to enhance your virtual simulation environments.

The .yaml files can be easily exported for use in Gazebo.

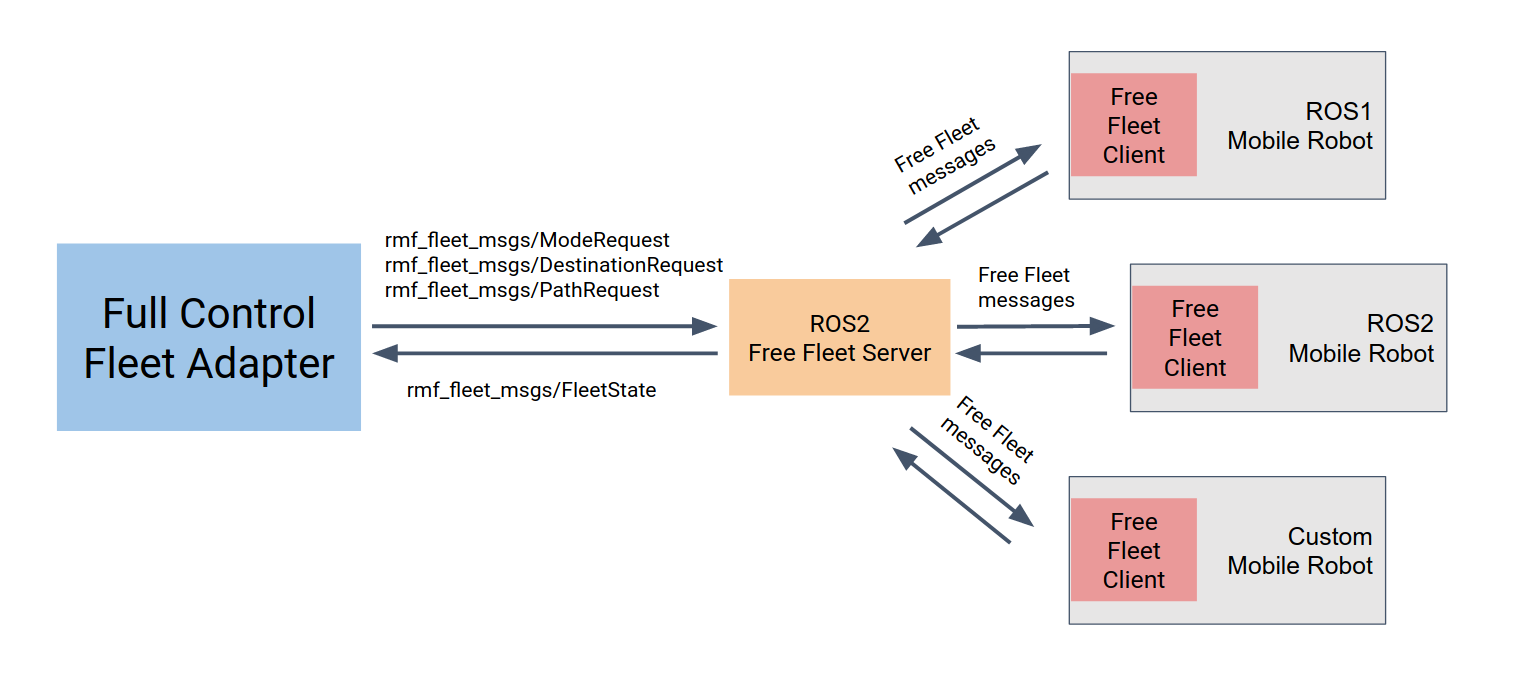

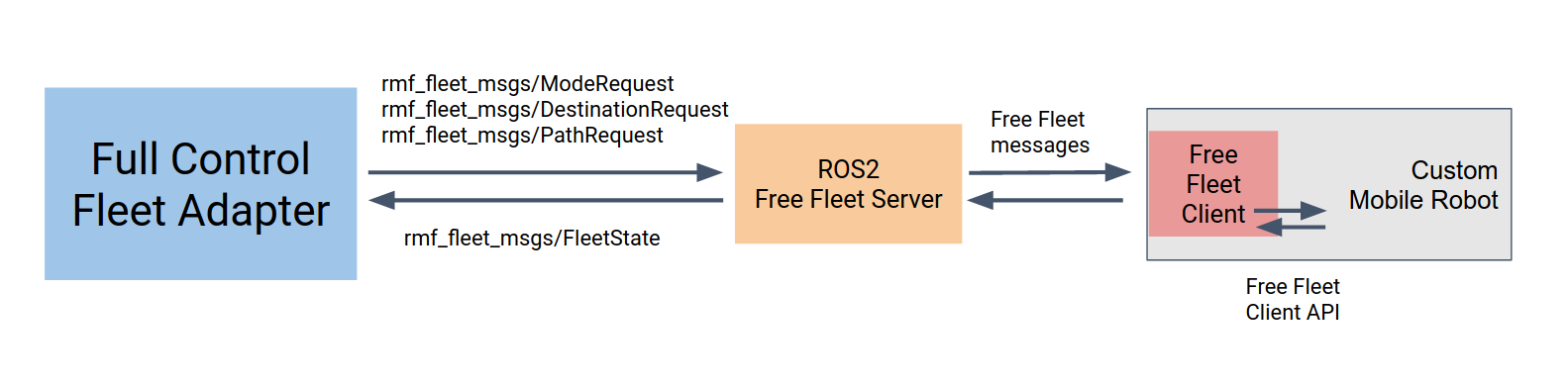

Free Fleet

Free Fleet is an open-source robot fleet management system for robot developers who do not have their own fleet manager or who would prefer to use and contribute to an open-source fleet management utility.

RMF Schedule Visualizer

This visualizer is an rviz-based rmf_core visualizer and control panel.

It is intended to be a functional tool for RMF developers.

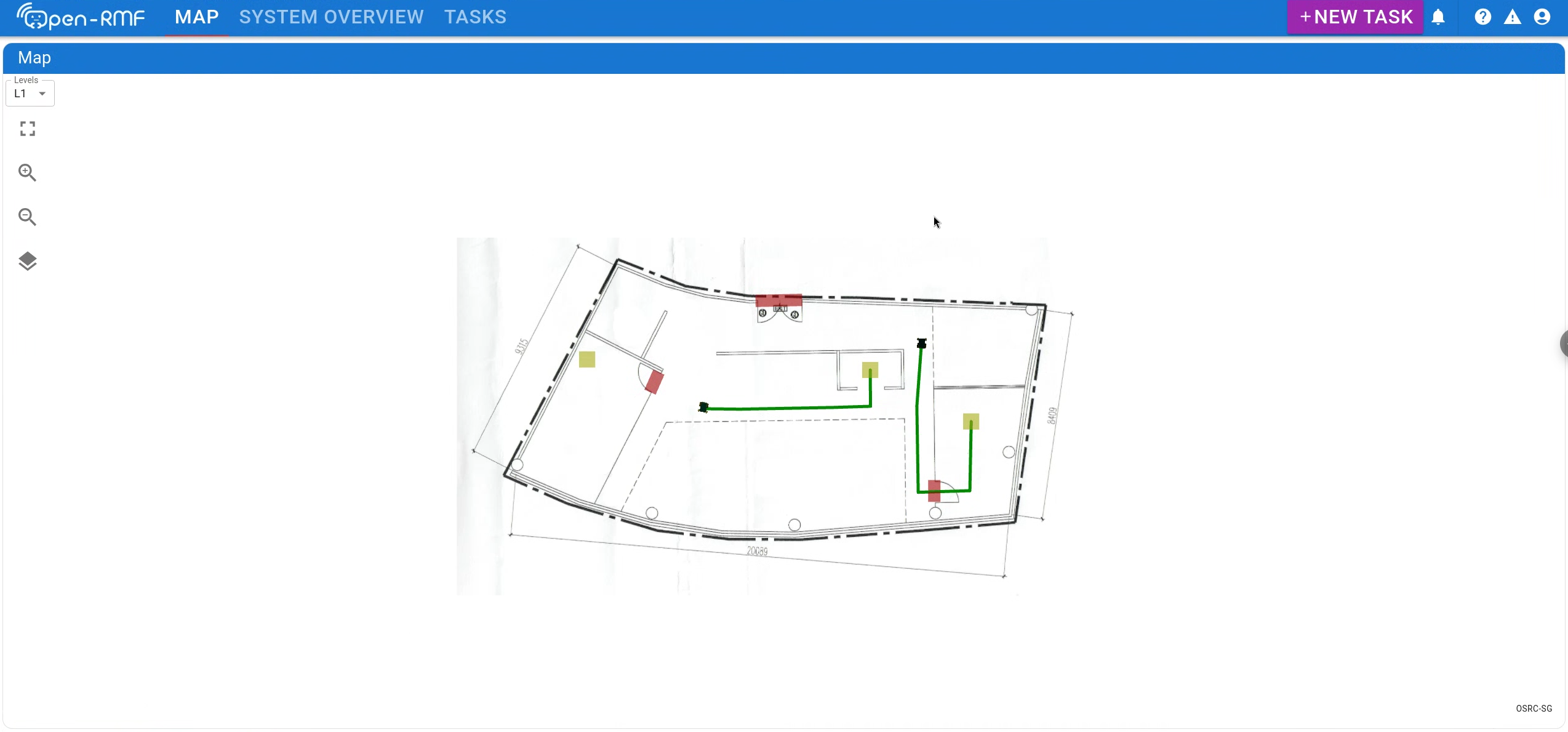

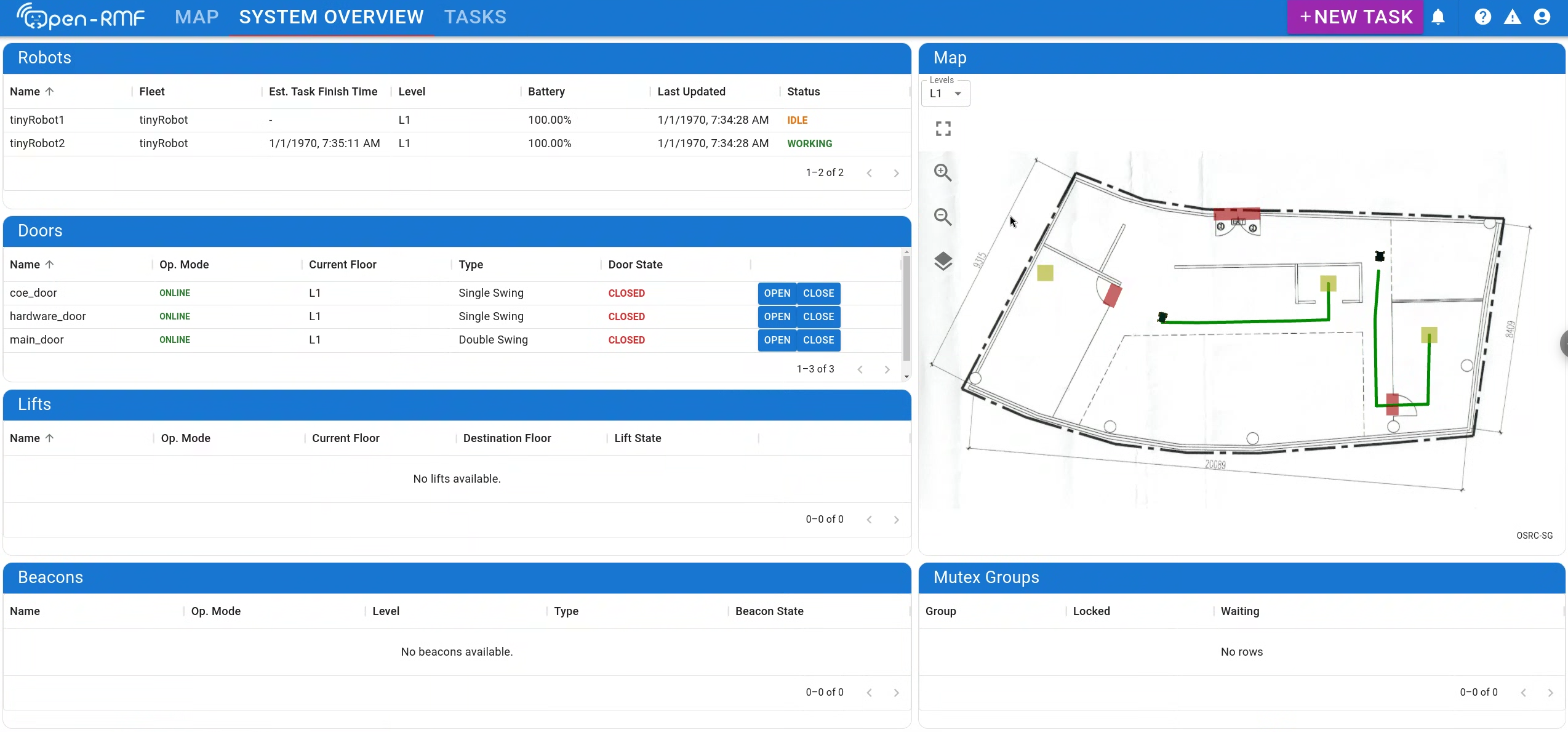

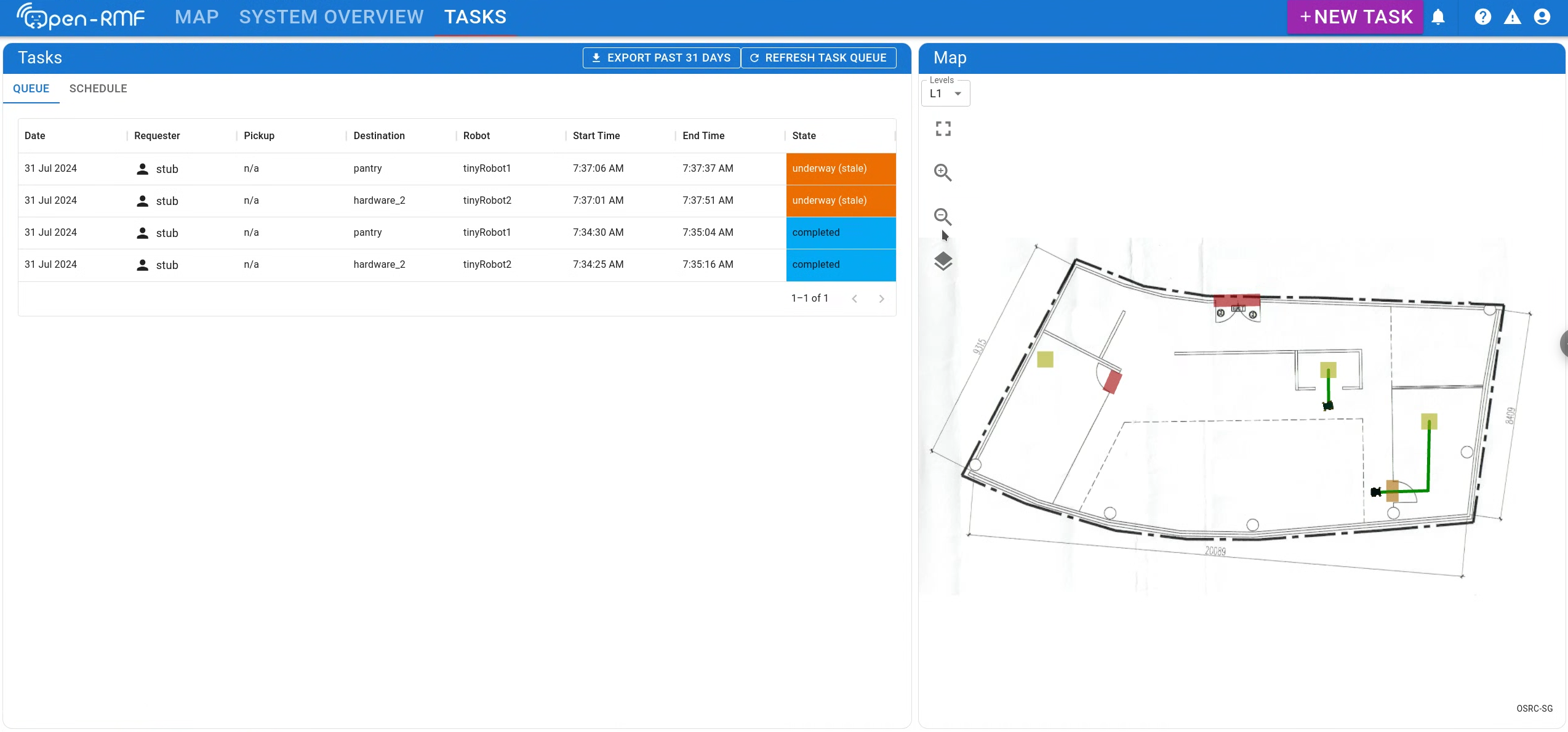

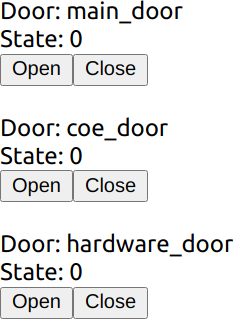

RMF Web UI

rmf-web is a configurable web application that provides overall visualization and control over the RoMi-H system. The dashboard is by design more "operator-friendly" compared to the previously mentioned schedule visualizer.

RMF Simulation

rmf_simulation contains the simulation plugins to simulate RMF. Plugins are available in gazebo and ignition.

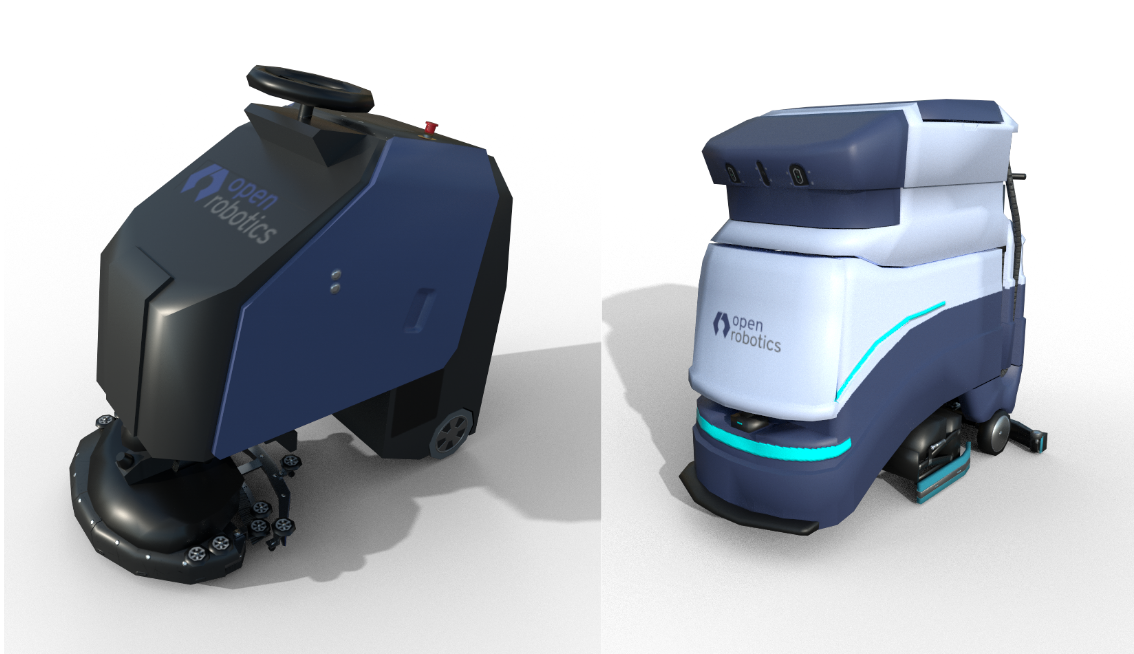

Simulation Assets

The open-source and freely distributable simulation assets are created and shared to accelerate simulation efforts.

For the latest instructions and updates please directly check the open-rmf/rmf repository.

Install ROS 2.

First, please follow the installation instructions for ROS 2. If you are on an Ubuntu 20.04 LTS machine (as recommended), here is the binary install page for ROS 2 Galactic on Ubuntu 20.04.

Setup Gazebo repositories

Setup your computer to accept Gazebo packages from packages.osrfoundation.org.

sudo apt update

sudo apt install -y wget

sudo sh -c 'echo "deb http://packages.osrfoundation.org/gazebo/ubuntu-stable `lsb_release -cs` main" > /etc/apt/sources.list.d/gazebo-stable.list'

wget https://packages.osrfoundation.org/gazebo.key -O - | sudo apt-key add -

Binary install

OpenRMF binary packages are available for Ubuntu Focal 20.04 for the Foxy, Galactic and Rolling releases of ROS 2. Most OpenRMF packages have the prefix rmf on their name, therefore, you can find them by them by searching for the pattern ros-<ro2distro>-rmf

apt-cache search ros-<ro2distro>-rmf

RMF Demos

A good way to install the rmf set of packages in one go is to install the one of the main RMF Demos packages. This will pull all the rest of the OpenRMF packages as a dependency. The core of RMF demos is contained on the rmf_demos package. However, if you want to install it with simulation support, you should install the rmf_demos_gz or rmf_demos_ign package which come with gazebo or ignition support respectively. To install the ROS 2 release with gazebo support package, you would run:

sudo apt install ros-<ro2distro>-rmf-demos-gz

Building from sources

If you want to get the latest developments you might want to install from sources and compile OpenRMF yourself.

Additional Dependencies

Install all non-ROS dependencies of OpenRMF packages,

sudo apt update && sudo apt install \

git cmake python3-vcstool curl \

qt5-default \

-y

python3 -m pip install flask-socketio

sudo apt-get install python3-colcon*

Install rosdep

rosdep helps install dependencies for ROS packages across various distros. It can be installed with:

sudo apt install python3-rosdep

sudo rosdep init

rosdep update

Download the source code

Setup a new ROS 2 workspace and pull in the demo repositories using vcs,

mkdir -p ~/rmf_ws/src

cd ~/rmf_ws

wget https://raw.githubusercontent.com/open-rmf/rmf/main/rmf.repos

vcs import src < rmf.repos

Ensure all ROS 2 prerequisites are fulfilled,

cd ~/rmf_ws

rosdep install --from-paths src --ignore-src --rosdistro <ro2distro> -y

Compiling Instructions

NOTE: Due to newer changes in the source build, there might be conflicts and compilation errors with older header files installed by the binaries. Please remove the binary installations before building from source, using

sudo apt remove ros-<ro2distro>-rmf*.

Compiling on Ubuntu 20.04:

cd ~/rmf_ws

source /opt/ros/<ro2distro>/setup.bash

colcon build --cmake-args -DCMAKE_BUILD_TYPE=Release

NOTE: The first time the build occurs, many simulation models will be downloaded from Ignition Fuel to populate the scene when the simulation is run. As a result, the first build can take a very long time depending on the server load and your Internet connection (for example, 60 minutes).

Run RMF Demos

Demonstrations of OpenRMF are shown in rmf_demos.

Docker Containers

Alternatively, you can run RMF Demos by using docker.

Pull docker image from open-rmf/rmf github registry (setup refer here).

docker pull ghcr.io/open-rmf/rmf/rmf_demos:latest

docker tag ghcr.io/open-rmf/rmf/rmf_demos:latest rmf:latest

Run it!

docker run -it --network host rmf:latest bash -c "export ROS_DOMAIN_ID=9; ros2 launch rmf_demos_gz office.launch.xml headless:=1"

This will run rmf_demos in headless mode. Open this link with a browser to start a task.

(Experimental) User can also run rmf_demos in “non-headless” graphical form, via rocker.

Roadmap

A near-term roadmap of the entire OpenRMF project (including and beyond rmf_traffic) can be found in the user manual here.

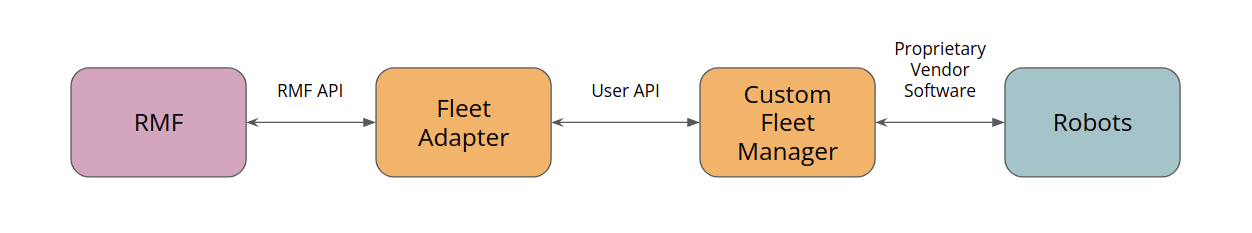

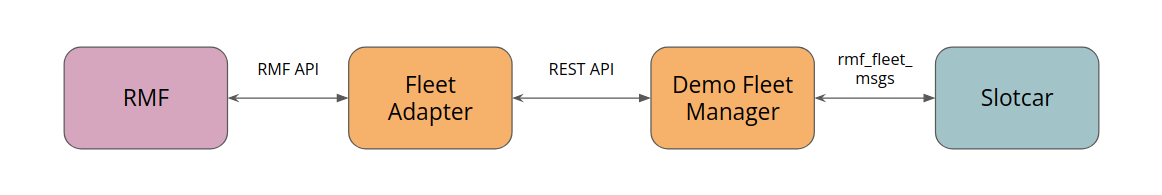

Integrating with RMF

Instructions on how to integrate your system with OpenRMF can be found here.

Open sourced fleet adapters

A number of commercial robots have been integrated with RMF and links to their adapters are available below.

- Gaussian Ecobots

- OTTO Motors (and robots running the Clearpath Autonomy stack)

- Mobile Industrial Robots: MiR

- Temi- the personal robot

Help us add to this list!

A helpful starting point for integrating your fleet with RMF is the fleet_adapter_template package.

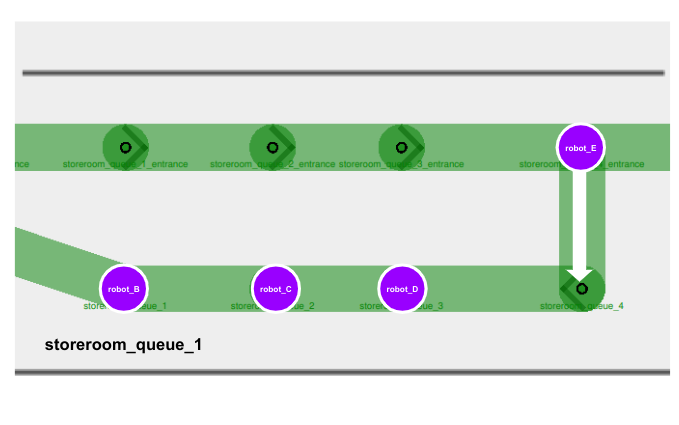

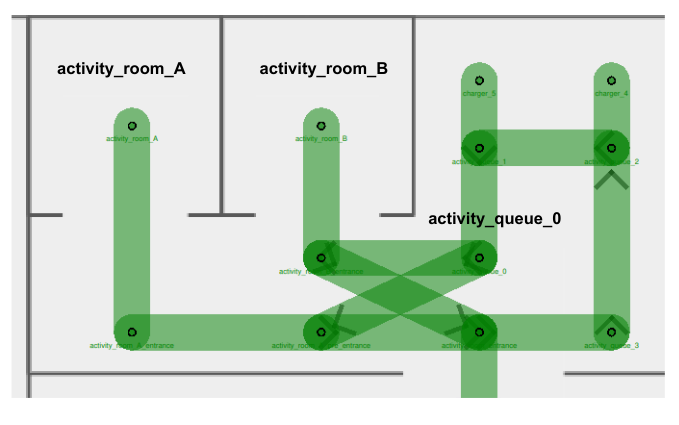

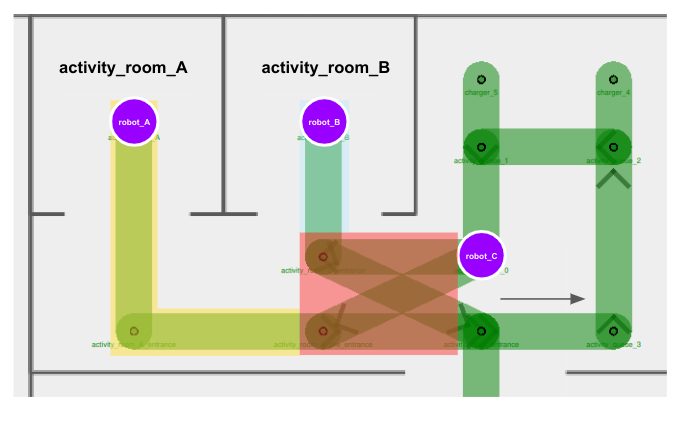

Demos

In this chapter, we will briefly demonstrate the capability of RMF with rmf_demos.

This will give users a brief understanding of the core features of RMF.

For the most updated rmf_demos run instruction, please refer to here.

First make sure, you have have installed the provided RMF demos from their Debian package:

# Demos example with gazebo simulator, use ros-foxy-rmf-demos-ign for ignition

sudo apt-get install ros-foxy-rmf-demos-gz

Run your desired demo. In this case we will run the airport terminal:

Before running the demo, we can ensure all required models are downloaded locally by:

ros2 run rmf_building_map_tools model_downloader rmf_demos_maps -s airport_terminal

ros2 launch rmf_demos_gz airport_terminal.launch.xml

# or with ignition

ros2 launch rmf_demos_ign airport_terminal.launch.xml

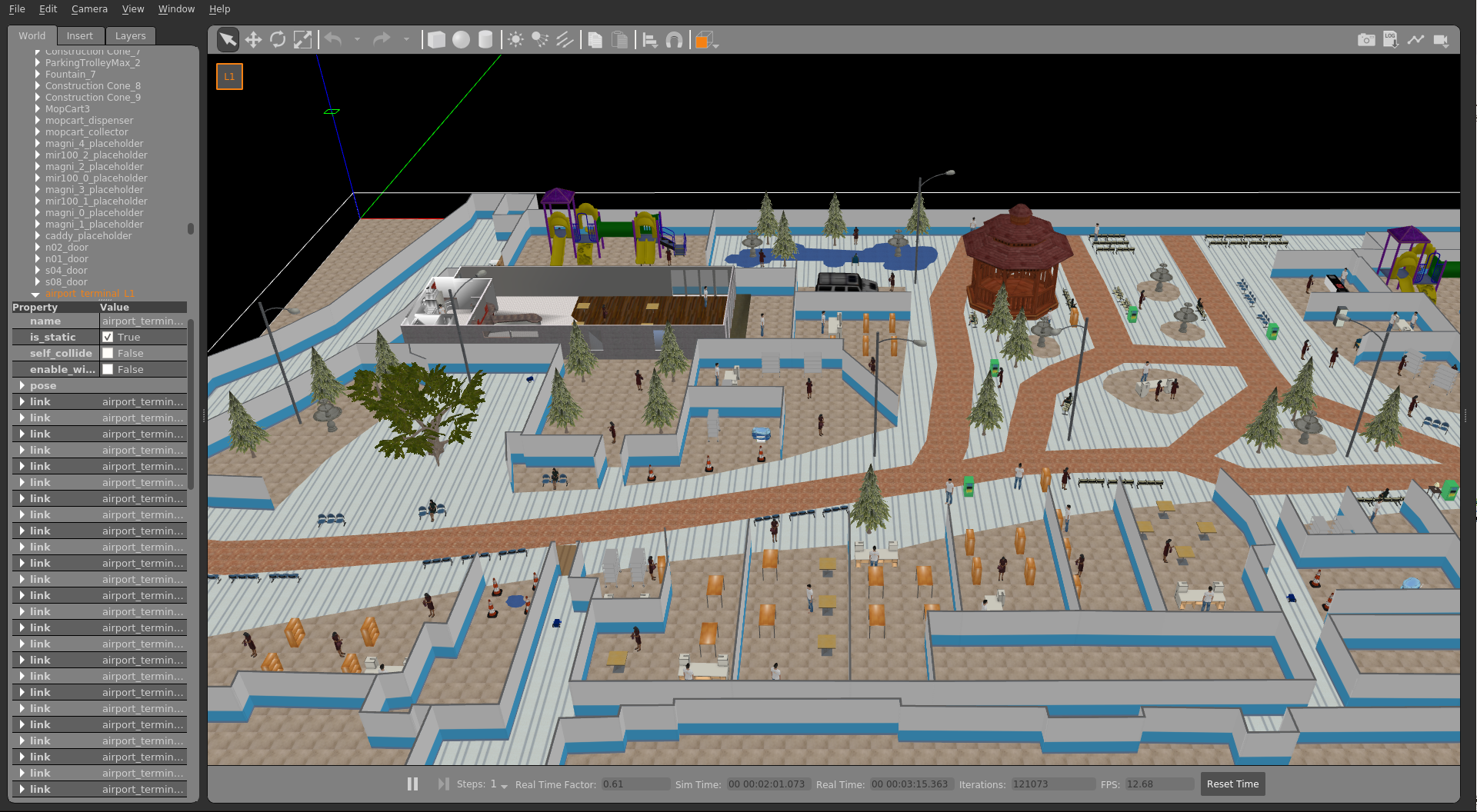

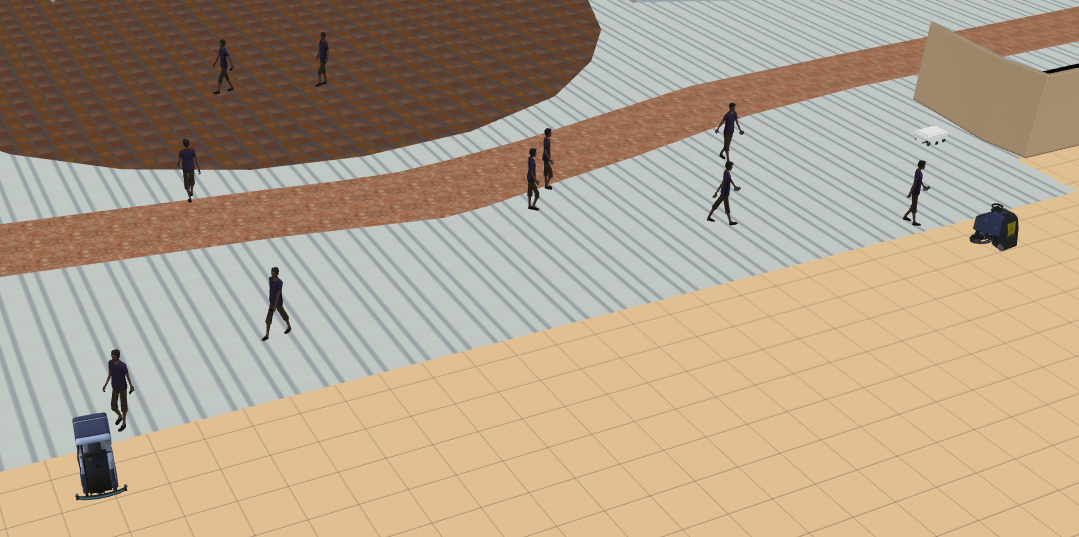

Now you should be able to see the airport terminal with the robots in Gazebo:

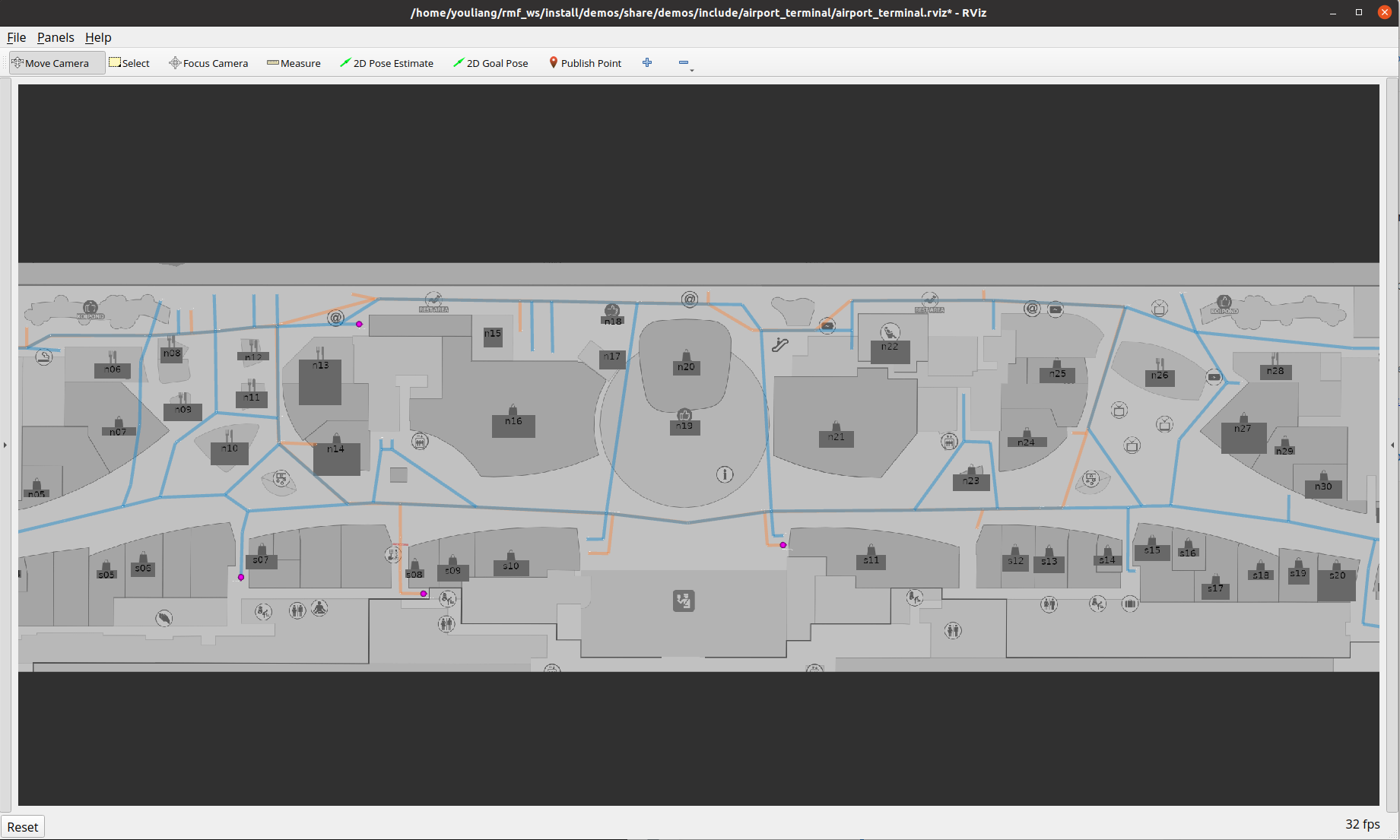

The RMF Schedule Visualizer should have loaded in an rviz window. This canvas will display all integrated robots or infrastructure which are available to RMF.

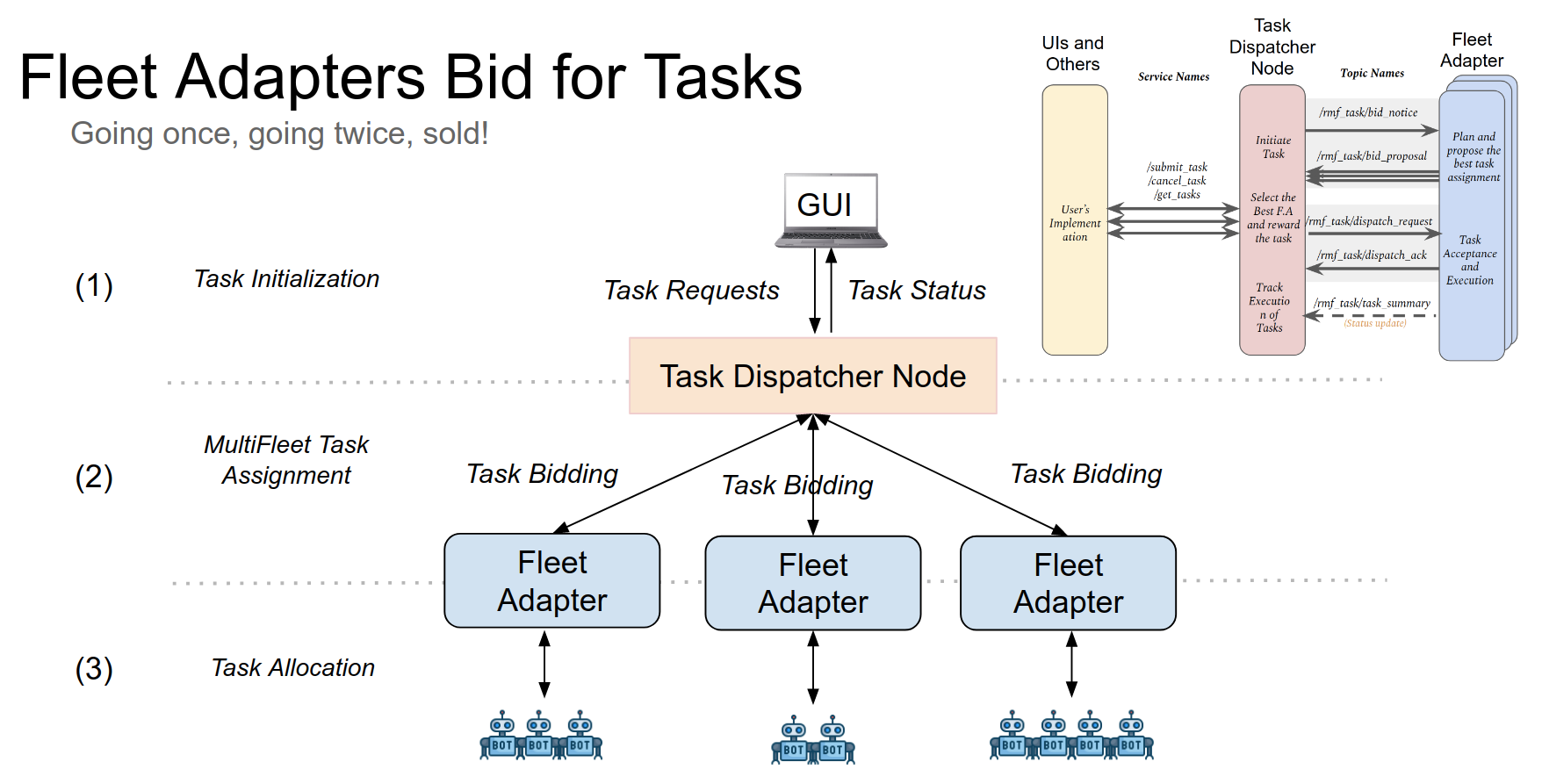

During a task request, instead of requiring the user to specify the robot name to complete a task, RMF will assign the task to the best robot.

RMF currently suports 3 types of task, namely: loop, delivery or clean . User can submit a task via CLI:

Loop Task

ros2 run rmf_demos_tasks dispatch_loop -s s07 -f n12 -n 3 --use_sim_time

Delivery Task

ros2 run rmf_demos_tasks dispatch_delivery -p mopcart_pickup -pd mopcart_dispenser -d spill -di mopcart_collector --use_sim_time

Clean Task

ros2 run rmf_demos_tasks dispatch_clean -cs zone_3 --use_sim_time

Now you can observe robots roaming around the airport space!

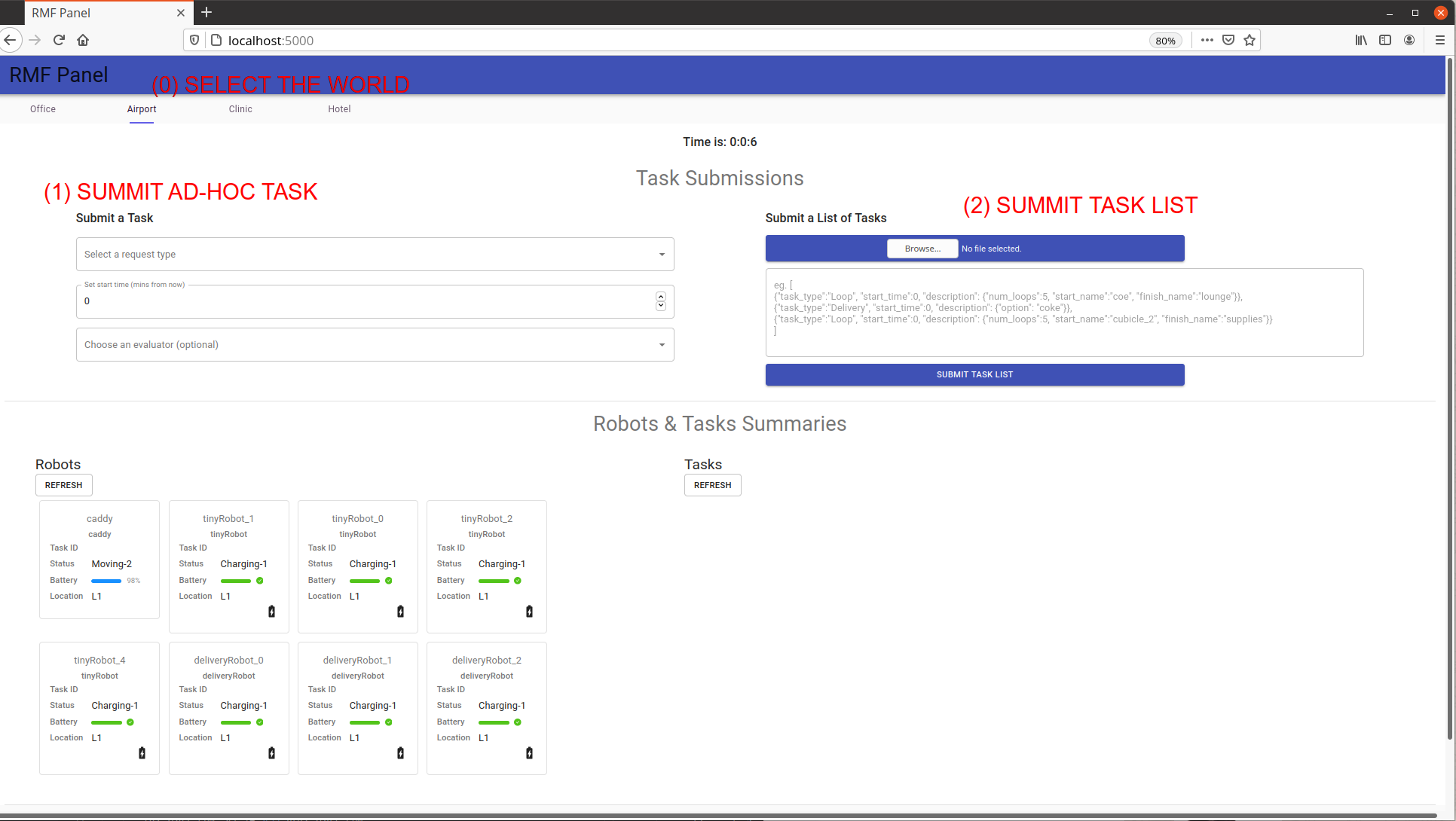

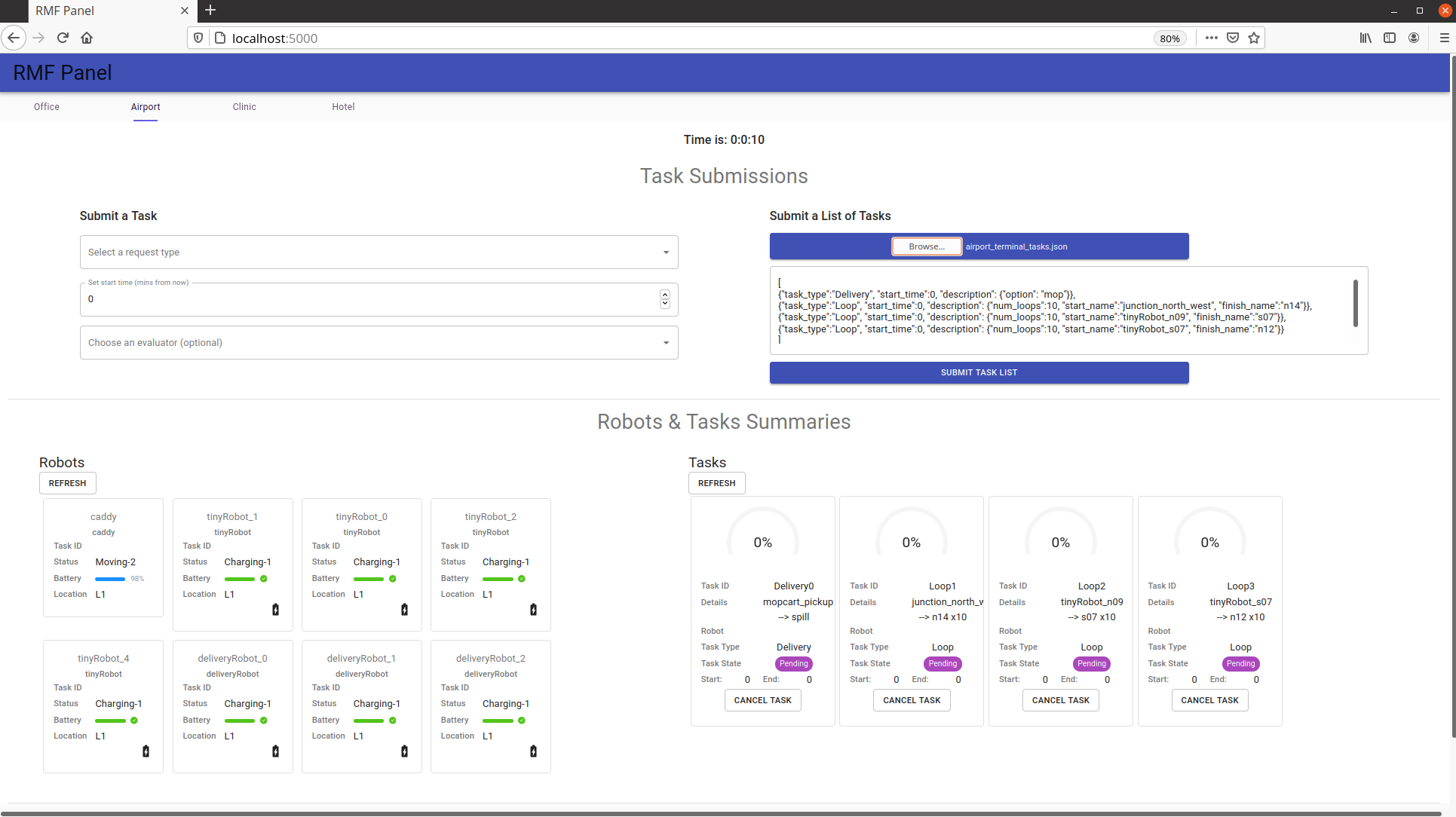

rmf_panel

Another way to observe and interact with RMF is via a web rmf_panel. Open the webpage: firefox https://open-rmf.github.io/rmf-panel-js/ or click here

You can view the status of all the robots under RMF. To request a list of tasks, first select the Airport tab. User can choose to submit a (1) Adhoc task or (2) Task List.

Copy paste this to the Task List Box. (or open a file)

[{"task_type":"Delivery", "start_time":0, "description": {"option": "mop"}},

{"task_type":"Loop", "start_time":0, "description": {"num_loops":10, "start_name":"junction_north_west", "finish_name":"n14"}},

{"task_type":"Loop", "start_time":0, "description": {"num_loops":10, "start_name":"tinyRobot_n09", "finish_name":"s07"}},

{"task_type":"Clean", "start_time":0, "priority":0, "description":{"cleaning_zone":"zone_2"}}

]

Then Click Submit to submit the list of tasks:

Now, sit back and enjoy.

Jump in, the water is fine!

So now you have an idea of what RMF is all about, it's time to jump in. We would suggest if you have not already that you take the time to review the RMF Demos repository and if you want a really quick overview of RMF then take a look at this Mock Airport Terminal video demo (Short film Oscar nominations most welcome). We hope you find RMF to be a useful tool to help you scale your robot deployments and operations and we look forward to the many improvements and contributions to come!

An Introduction to ROS 2

In this chapter we will cover the basics of Robot Operating System (ROS) and give you all the tools you need to build, debug, and understand robotic applications. This chapter is laid out from the most general concepts, necessary for decision makers to make sound decisions, to specific API references needed by engineers to develop new robotic applications. Somewhere in between high level concepts, and low level API commands lives the knowledge necessary for those maintaining and supporting multi-robot deployments in the field.

A good analogy to learning about ROS is the process of learning about motor vehicles. At the practical, day-to-day level, most people will learn how to start a vehicle and safely use it on a motorway. For these individuals, learning about the high level concepts behind ROS, along with application-specific commands is probably sufficient. Those who enjoy driving often choose to learn how to repair and maintain their vehicle. If this is your interest level we recommend learning the basics of the ROS command line interface. This will allow you to "check the oil" of your robotic systems and make sure everything is functioning correctly. Finally, if you are the type that would like to swap out the engine of your vehicle with something more powerful, or potentially build a wholly new vehicle from scratch, then the ROS API is the set of tools that will make this possible. Generally speaking, automotive engineers don't appear into the world fully formed, and the same is true for roboticists. It is advisable to work through each phase of understanding as you develop your skills with ROS.

Following from our analogy above the process of learning how to use robotic systems built on ROS can be divided roughly into four parts. This chapter works through these four parts of the process, using ROS 2. Subsequent chapters then build upon this knowledge and discuss the subtleties of specific applications. The four parts of this chapter are as follows.

-

Meta-discussion of the tools and resources available to help you in the learning process.

-

A high level discussion to the design patterns used in ROS. These patterns are roughly analogous to the subsystems you would find in a vehicle (engine, brakes, safety, climate control, etc).

-

A treatment of the command line interface (CLI) for ROS. The CLI is a set of programs for starting, inspecting, controlling, and monitoring a ROS robot. You can think of this topic as teaching you how check a robot's oil, and read the instrument panel.

-

An introduction to the ROS application programming interface. This section will show you how to create your own applications and modify existing software for your specific application.

While this book aims to cover the basics it should be made clear that ROS, like almost all software, is a moving target. Technology moves quickly, and while print media is helpful at delivering high fidelity instruction, that instruction can become rapidly outdated. For this reason we start this chapter with a meta-discussion of ROS resources that can be used to help you in your learning process.

ROS Kick Off

This chapter describes the avenues available for learning about and getting help with ROS, as well as how to get started by setting up and installing ROS.

Resources

The most up to date information about ROS can be found on the web. There are a myriad of resources on-line to help you out in your educational or practical journey. One thing to keep in mind is that ROS, like most software, has different versions, and the format and structure of commands and API calls may differ slightly between versions (although the developers try to keep things as stable as possible). This book is specifically written for ROS 2, Eloquent Elusor, or ROS Eloquent to be terse.

While newer or older versions of ROS 2 will be generally similar, it is worth paying attention to the distribution name or version number as there are changes between versions. A major version of ROS 2 generally corresponds to a distribution, denoted by a pair of matching letter adjectives and specific nouns related to specific genus and species of turtles (e.g. Eloquent Elusor, or Foxy Fitzroy). It is worth noting that ROS versions are usually pegged to specific version of Ubuntu Linux.

ROS grew up with the modern web, and as such it has a variety of resources and forums to help you solve problems and learn about the API and tools. Some of our web resources actually pre-date more widely used systems, so it helps to know where they are and how to use them. Probably the most important resource on the web for ROS users is answers.ros.org. Answers is a Q&A website similar to StackOverflow. Once you register for Answers you can ask or answer any ROS-related question. Be aware that asking a question well can be difficult. You should include as much information as possible to help others answer your question. This means you should include the ROS version, platform version, any debugging or stack trace information you have, and the offending source code.

Aside from ROS Answers you should check out both the ROS 2 tutorials and API documentation, and the ROS 1 wiki. The ROS 1 wiki can be found at wiki.ros.org. While it is specifically dedicated to ROS 1, much of the information is still relevant to ROS 2. If you are searching for up to date ROS 2 information, your go to source is the ROS 2 tutorials and API documents located at index.ros.org/doc/ros2. Many of the tutorials you will find in this book pull directly from this body of work. If you would like to find the latest ROS news and discuss various ROS features, the ROS Discourse forum at discourse.ros.org is your best bet. ROS discourse is the community hub where developers discuss their latest projects and debate the finer points of ROS development.

For ROS application developers there are a number of tools to help you connect with the broader ROS developer community. Open Robotics supports index.ros.org, which is an extended list of ROS packages sorted by version. If you are searching for a ROS driver for a particular piece of hardware, then the index is a great place to start. If you find a package with failing tests, or would like to know the build status of any ROS package, take a look at build.ros.org. Similarly, for un-indexed packages GitHub maintains a ROS code tag. This tag will allow you to search all of the tagged repositories that are publicly listed. At the time of writing there were close to 4000 repositories listed on GitHub, so there is a pretty good chance you'll find what you need.

Finally, there are a variety of unofficial resources that you should be aware of that can be useful, particularly if you want to keep yourself up to date with the latest ROS projects and features. Both Open Robotics and ROS maintain twitter feeds to share the latest news. We also have a yearly ROS developers conference called ROSCon; most talks are freely available on the web. There are a few other resources that can also be useful including the ROS subreddit and an "unofficial" ROS Discord.

Setting Up Your Computer

For this chapter we assume that you are working on a modern desktop with a discrete graphics card. While a graphics card isn't necessary for this chapter, later chapters will be graphics intensive and having one will greatly improve the end user experience. Moreover, this book assumes you are working with the Ubuntu Linux 18.04 operating system. While other operating systems are supported by ROS 2, all of the tutorials and instructions on this book assume you are running Linux. If instead you're using a Mac or Windows PC, you can install ROS 2 Eloquent Elusor using the instructions found on the ROS 2 installation instructions page. An alternative path for installation on Mac and PC is to using a virtual machine. Roughly the process for doing so is as follows:

- Install virtual machine software like Virtual Box or VMWare on your host machine.

- Create a virtual machine using the software, and install Desktop Ubuntu 18.04 Bionic Beaver from the Canonical website. Configure the installation as you wish.

- Now start your virtual machine and log in as a user. The directions below should be applicable.

For these initial tutorials we will be working with the pre-compiled ROS 2:

Eloquent Elusor desktop version. These directions follow directly from the

installation instructions found on the [Eloquent installation

page]https://index.ros.org/doc/ros2/Installation/Eloquent/Linux-Install-Debians/). To

run these commands you'll need a terminal window. To open a terminal in Ubuntu

18.04 click on the nine dots in the bottom left hand of the screen. A dialog

should appear. Enter the word terminal and click on the terminal icon to open

a terminal. Alternatively, you can press the control, alt, and 't' keys

simultaneously to open a terminal (we abbreviate this CTRL-ALT-T).

Setup Locale

The first step is to make sure you have a locale which supports UTF-8. What this means is that we

will check that the language used by your computer uses a particular format of

text. If you are in a minimal environment, such as a Docker container, the locale may be

something minimal like POSIX. We test with the following settings. It

should be fine if you're using a different UTF-8 supported locale.

sudo locale-gen en_US en_US.UTF-8

sudo update-locale LC_ALL=en_US.UTF-8 LANG=en_US.UTF-8

export LANG=en_US.UTF-8

Setup Sources

You will need to add the ROS 2 apt repositories to your system. Out of the box Ubuntu doesn't know where the ROS 2 binary programs live so we have to give it a secure location. To do this the computer will prompt you for your root password. For more technical readers we need to authorize the ROS GPG key with apt by typing the following command in the terminal:

sudo apt update && sudo apt install curl gnupg2 lsb-release

curl -s https://raw.githubusercontent.com/ros/rosdistro/master/ros.asc | sudo apt-key add -

Install ROS 2 Packages

The next steps for installing ROS is to do a system update (i.e. check for newer programs) and then install ROS Eloquent. To do this we run the following commands. Be aware that these commands will download a lot of data and may take awhile. It is best to run these commands on your home network.

sudo apt update

Desktop Install (Recommended): ROS, RViz, demos, tutorials.

sudo apt install ros-eloquent-desktop

Next we'll install a set of tutorials called TurtleSim. To do this we run

another apt command.

sudo apt install ros-eloquent-turtlesim

ROS 2 command line tools use argcomplete for autocompletion. If you want autocompletion, installing argcomplete is necessary. We're also going to install a few other tools to make our lives easier.

sudo apt install python3-argcomplete htop byobu

Check Your Installation

ROS uses environment variables to help keep track of what version of ROS is

running and where all the programs using ROS are on the computer. To set

these environment variables we source, or load, a bash script file. A bash

script file isn't magic; it is a series of commands to enter into the

terminal, just like the series of commands we just entered to setup ROS. It is

possible to have different versions of ROS running on a single computer. Using

the wrong version of ROS can lead to all sorts of problems and is a common

mistake for new users! If you are having problems, try sourcing the correct ROS

bash file. From now on, whenever you open a new terminal, you will

need to tell the computer which version of ROS to use. To set the necessary

environment variables for ROS you need to source a bash file every time you

open a new terminal. Yes, this is annoying, but it is a sound approach as it

makes the version of ROS you are using explicit. On Ubuntu 18.04 all versions of

ROS live in /opt/ros/. Inside this directory will be a programs and scripts

file to run ROS. To tell the operating system that we want to use ROS Eloquent

we simply source the ROS Eloquent setup.bash file using the command below:

source /opt/ros/eloquent/setup.bash

Once that command runs, your terminal should be ready to run a ROS program. Let's

test our installation by running two small ROS programs called talker and

listener. These two programs will send data back and forth using ROS to

perform the communication. One program was written in C++ and the other in

Python. Running these two different programs is a quick and easy way to check

that your ROS system is configured correctly. To start the talker run the following command:

source /opt/ros/eloquent/setup.bash

ros2 run demo_nodes_cpp talker

If everything is working correctly you should see something like the following:

[INFO] [talker]: Publishing: 'Hello World: 1'

[INFO] [talker]: Publishing: 'Hello World: 2'

[INFO] [talker]: Publishing: 'Hello World: 3'

....

Now, let's fire up the listener. We're going to use a Python listener in this

example to make sure we installed Python correctly. First we will need a second terminal. We can

open a new terminal tab by entering CTRL-SHIFT-T in our terminal. We can also

create a wholly new terminal by pressing CTRL-ALT-T. Pick whatever works best

for you. Now in your new terminal source your bash file and run the following

command:

source /opt/ros/eloquent/setup.bash

ros2 run demo_nodes_py listener

If everything is working correctly you should see something like the following:

[INFO] [listener]: I heard: [Hello World: 264]

[INFO] [listener]: I heard: [Hello World: 265]

[INFO] [listener]: I heard: [Hello World: 266]

Now that we have tested our ROS installation we can stop these two programs. In

ROS most programs run in infinite loops until the robot is shut down. To stop

these programs we navigate to the terminal running the program and press the

Ctrl and C keys simultaneously. We call this combo CTRL-C and you can use

it to stop just about any program in a terminal. Use it to stop the talker and

listener programs.

ROS Concepts and Design Patterns

As we said, learning about ROS is similar to learning about an automobile. In fact, a car is a lot like a robot (and sometimes it really is a robot; see the large and active self-driving vehicle industry). A modern automobile comprises many parts that are connected to each other. The steering wheel is connected to the front axle, the brake pedal is connected to the brake calipers, the oxygen sensor is connected to the fuel injectors, and so on. From this perspective, a car is a distributed system: each part plays a well-defined role, communicating (whether electrically or mechanically) as needed with other parts, and the result of that symphony-like coordination is a working vehicle.

A key philosophical tenet of ROS is that robotics software should also be designed and developed as a distributed system. We aim to separate the functions of a complex system into individual parts that interact with each other to produce the desired behavior of that system. In ROS we call those parts nodes and we call the interactions between them topics (and sometimes services, but we will get to that).

The ROS Communication Graph

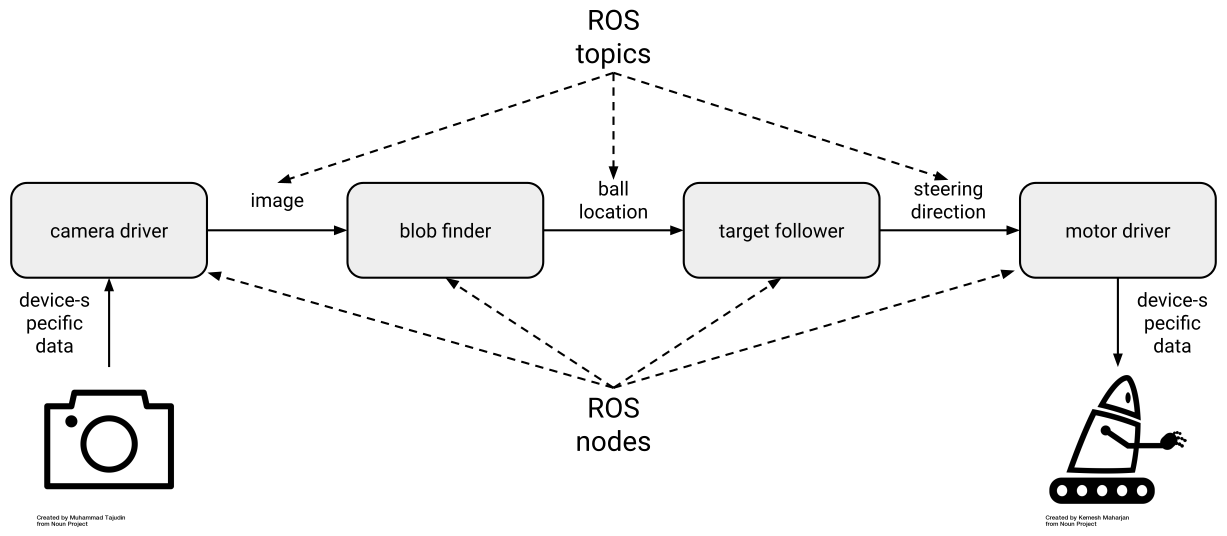

Imagine we are building a wheeled robot that chases a red ball. This robot needs a camera with which to see the ball, a vision system to process the camera images to figure out where the ball is, a control system to decide what direction to move, and some motors to move the wheels to allow it to move toward the ball. Using ROS we might construct the system like so:

This design separates the software into four ROS nodes: two device drivers and two algorithms. Those nodes communicate with each other as shown, via three ROS topics. We call this structure a ROS communication graph: the nodes are the graph vertices and the topics are the graph edges. You can tell a lot about a ROS system by examining its communication graph.

The camera driver node is responsible for handling the details of interacting with the physical camera, which might happen through a custom USB protocol, through a vendor-provided library, or in some other way. Whatever those details, they are encapsulated inside the camera driver node, which presents a standard topic interface to the rest of the system. As a result, the blob finder node does not need to know anything about the camera; it simply receives image data in a standard format that is used for all cameras in ROS. The output of the blob finder is the detected location of the red ball, also in a standard format. Then the target follower node can read in the ball location and produce the steering direction needed to move toward the ball, again in a standard format. Finally, the motor driver node's responsibility is to convert the desired steering direction into the specific instructions necessary to command the robot's wheel motors accordingly.

Publish-Subscribe Messaging: Topics and Types

With the example of the ball-chasing robot in mind, we can add some terminology to describe what is happening as the system operates. First, the ROS communication graph is based on a well-known pattern called publish-subscribe messaging, or simply pub-sub. In a pub-sub system, as the name implies, data are sent as messages from publishers to subscribers. A publisher may have zero, one, or multiple subscribers listening to its published messages. Messages may be published at any time, making the system asynchronous.

In ROS, nodes publish and subscribe via topics, each of which has a name and a

type. A publisher announces that it will be publishing data by advertising a

topic. For example, the camera driver node may advertise a topic named /image

with type sensor_msgs/Image. If the blob finder node subscribes to a topic

with the same name and type, then the two nodes find each other and establish a

connection over which image messages can get from the camera driver to the blob

finder (the nodes find each other and establish those connections in a process

called discovery, which will be covered in detail later in this book). Each

message that flows across the /image topic will be of type

sensor_msgs/Image.

A single node can be (and often is) both a publisher and a subscriber. In our example, the blob finder subscribes to image messages and publishes ball location messages. Similarly the target follower subscribes to ball location messages and publishes steering direction messages.

A topic's type is very important. In fact, taken together, the ROS types are

among the most valuable aspects of the entire platform. First, a type tells you

the syntax: which fields, of which types, does the message contain? Second, it

tells you the semantics: what do those fields mean and how should they be

interpreted? For example, a thermometer and a pressure sensor might produce what

appear to be the same data: a floating-point value. But in ROS, a well-designed

thermometer driver node would publish one clearly defined type (say,

sensor_msgs/Temperature), while a pressure sensor driver node would publish

another (say, sensor_msgs/FluidPressure).

We always advise the use of semantically meaningful message types.

For example, ROS provides simple message types like std_msgs/Float64, which

contains a single 64-bit floating-point field called data. But you should only

use that sort of generic type for rapid prototyping and experimenting. When you

build a real system, even if something like std_msgs/Float64 could get the job

done on syntax, you should instead find or define a message that also matches

the semantics of your application.

Why Publish-Subscribe?

Given that it comes with additional complexity (nodes, topics, types, etc.), it is reasonable to ask why ROS follows the pub-sub pattern. After more than a decade of building and deploying ROS-based robot systems, we can identify several key benefits:

- Substitution: If we decide to upgrade the robot's camera, we need only modify or replace the camera driver node. The rest of the system never knew the details of the camera anyway. Similarly, if we find a better blob finder node, then we can just swap it in for the old one and nothing else changes.

- Reuse: A well-designed blob finder node can be used today on this robot to chase the red ball, then reused tomorrow on a different robot to chase an orange cat, and so on. Each new use of a node should require only configuration (no code) changes.

- Collaboration: By cleanly separating concerns between nodes, we let our blob finder expert do their work independently of the target follower expert, with neither of them bothering the device driver expert. It is often the case that a robot application requires the combined expertise of many people, and it would be difficult to overstate the importance of ensuring that they can each contribute confidently and efficiently.

- Introspection: Because the nodes are explicitly communicating with each other via topics, we can listen in. So when the robot fails to chase the red ball, and we think that the problem is in the blob finder, we can use developer tools to visualize, log, and play back that node's inputs and outputs. The ability to introspect a running system in this way is instrumental to being able to debug it.

- Fault tolerance: Say that the target follower node crashes because of a bug. If it is running in its own process, then that crash will not bring down the rest of the system, and we can get things working again by just restarting the target follower. In general with ROS we have the choice to run nodes in separate processes, which allows for such fault tolerance, or run them together in a single process, which can provide higher performance (and of course we can mix and match the two approaches).

- Language independence: It can happen that our blob finder expert writes their computer vision code in C++, while our target follower expert is dedicated to Python. We can accommodate those preferences easily by just running those nodes in separate processes. In ROS, it is perfectly reasonable, and in fact quite common, to mix and match the use of languages in this way.

Beyond Topics: Services, Actions, and Parameters

Most ROS data flows over topics, which we introduced in the previous sections. Topics are best for streaming data, which includes a lot of the common use cases in robotics. For example, going back to our ball-chasing robot, most cameras will naturally produces a stream of images at some rate, say, 30Hz. So it makes sense for the camera driver to publish the ROS messages containing those images just as soon as they're received. Then the blob finder will be receiving image messages at 30Hz, so it might as well publish its ball location messages at the same rate, and so on, through the target follower to the motor driver. We might say that such a systems is clocked from the camera: the data rate of the primary sensor, the camera in this case, drives the rate of computation of the system, with each node reacting in to receipt of messages published via topics by other nodes. This approach is fairly common and is appropriate for systems like our ball-chasing robot. There is no reason to do any work until you have a new camera image, and once you have one you want to process it as quickly as possible and then command an appropriate steering direction in response.

(We are making various simplifying assumptions, including that there is sufficient computational capacity to run all the nodes fast enough to keep up with the camera's data rate; that we do not have a way to predict where the ball is going in between camera frames; and that the motors can be commanded at the same rate the camera produces images.)

Services

So topics get the job done for the basic ball-chasing robot. But now say that we want to add the ability to periodically capture an ultra-high-resolution image. The camera can do it, but it requires interrupting the usual image stream that we rely on for the application, so we only want it to happen on demand. This kind of interaction is a poor fit for the publish-subscribe pattern of a topic. Fortunately, ROS also offers a request-reply pattern in a second concept: services.

A ROS service is a form of remote procedure call (RPC), a common concept in distributed systems. Calling a ROS service is similar to calling a normal function in a library via a code API. But because the call may be dispatched to another process or even another machine on the network, there is more to it than just copying pointers around. Specifically, a ROS service is implemented using a pair of ROS messages: a request and a reply. The node calling the service populates the request message and sends it to the node implementing the service, where the request is processed, resulting in a reply message that is sent back.

We might implement the new high-res snapshot capability like so:

- Define a new service type. Because services are less widely used than

topics, there are relatively few "standard" service types predefined.

In our case, the new service's request message might include the desired

resolution of the snapshot. The request message could be a standard

sensor_msgs/Image. - Implement the service. In the camera driver, we would advertise the newly defined service so that when a request is received, the usual image-handling is interrupted temporarily to allow the device interaction necessary to grab one high-res snapshot, which is then packed into a reply message and sent back to the node that called the service.

- Call the service. In the target follower node, we might add a timer so that every 5 minutes, it calls the new service. The target follower would receive the high-res snapshot in response to each call, and could then, say, add it to a photo gallery on disk.

In general, if you have a need for infrequent, on-demand interactions among nodes, ROS services are a good choice.

Actions

Sometimes, when building robot control systems, there is a need for an interaction that looks like request-reply, but that can require a lot of time between the request and the reply. Imagine that we want to wrap up our ball-chasing control system into a black box that can be invoked as part of a larger system that makes the robot play football. In this case, the higher level football controller will periodically want to say, "please chase the red ball until you have it right in front of you." Once the ball is in front of the robot, the football controller wants to stop the ball-chasing controller and invoke the ball-catching controller.

We could achieve this kind of interaction with a ROS service. We could define a chase-ball service and implement it in the target follower. Then the football controller could call that service when it wants the ball chased. But ball-chasing may take quite some time to complete, and it may fail to complete. Unfortunately, after calling the chase-ball service, the football controller is stuck waiting for the reply, similar to the situation in which you call a long-running function in code. The football controller does not know how well (or poorly) the chase is going, and it cannot stop the chase.

For such goal-oriented, time-extended tasks, ROS offers a third concept that is similar to services but more capable: actions. A ROS action is defined by three ROS messages: a goal, a result, and feedback. The goal, sent once by the node calling the action to initiate the interaction, indicates what the action is trying to achieve; for ball-chasing it might be the minimum required distance to the ball. The result, sent once by the node implementing the action after the action is complete, indicates what happened; for ball-chasing it might be final distance to the ball after the chase. The feedback, sent periodically by the node implementing the action until it is complete, updates the caller on how things are going; for ball-chasing it might be the current distance to the ball during the chase. In addition, actions are cancelable, so the football controller can decide to give up and move onto another tactic if the chase is taking too long or if the feedback messages are showing that there is little chance of success.

In general, if you want to support on-demand, long-running behaviors, ROS actions are a good choice.

Parameters

Any nontrivial system requires configuration, and ROS is no exception.

When we start our robot's motor driver node, how do we tell it to

connect to the motors via /dev/ttyUSB1? We do not want to hard-code

that information into the node, because on the next robot it might be

/dev/ttyUSB0 instead. ROS addresses such configuration needs via a

fourth concept: parameters. A ROS parameter is what you might expect:

a named, typed, place to store a piece of data. For example, the motor

driver node may define a parameter called serial_port with type

string. When it starts up, the node would use the value of that

parameter to know which device to open to get to the motor system.

ROS parameters can be set in a few ways:

- Defaults. A ROS node that uses a parameter must embed in its code some default value for that parameter. In the case that nothing else in the system sets the parameter value explicitly, the node needs some value to work with.

- Command-line. There is standard syntax for setting parameter values on the command-line when launching a node. Values set in this manner override defaults in the code.

- Launch files. When launching nodes via the

launchtool instead of manually via the command-line, you can set parameter values in the launch file. Values set in this manner override defaults in the code. - Service calls. ROS parameters are dynamically reconfigurable via a standard ROS service interface, allowing them to be changed on the fly, if the node hosting the parameters allows it. Values set in this manner override whatever previous values were set.

For most nodes, parameter management is relatively simple: define a

handful of parameters, each with a reasonable default; retrieve the

parameters' values at startup, which accounts for changes made via

command-line or launch file, then begin execution and disallow future

changes. This pattern makes sense for the motor driver, which needs to

know which /dev/ttyUSB device file to open at startup, and does not

support changing that setting later. But there are cases that require

more sophisticated handling. For example, the blob finder node may

expose as parameters a variety of thresholds or other settings that

configure how it identifies the red ball in images. These kinds of

settings can be changed on the fly, which the target follower might want

to do, based on how well the chase is going. In this case the blob

finder needs to be sure to use the latest values for its parameters,

knowing that they may have been changed by another node.

In general, when you want to store stable, but possibly changeable, configuration information in a node, ROS parameters are a good choice.

Asynchrony in Code: Callbacks

Throughout ROS, you will see a common pattern in the code, which is the use of callback functions, or simply callbacks. For example, when subscribing to a topic, you supply a callback, which is a function that will be invoked each time your node receives a message on that topic. Similarly, when you advertise a service, you supply a callback that is invoked when the service is called. The same goes for actions (for handling of goals, results, and feedback) and parameters (for handling of setting new values).

Programming with callbacks is not familiar to everyone. It differs from the

standard sequential presentation of programming, in which you write a main()

function that does A, then B, then C, and so on. By contrast, in ROS (and in

most systems that focus on data-processing and/or control), we follow an

event-based pattern. In this pattern, we do A whenever X happens, B whenever Y

happens, and so on.

A common structure for a ROS node is the following:

- Get parameter values. Retrieve the node's configuration, considering defaults and what may have been passed in from outside.

- Configure. Do whatever is necessary to configure the node, like establish connections to hardware devices.

- Set up ROS interfaces. Advertise topics, services, and/or actions, and subscribe to services. Each of these steps supplies a callback function that is registered by ROS for later invocation.

- Spin. Now that everything is configured and ready to go, hand control over to ROS. As messages flow in and out, ROS will invoke the callbacks you registered.

Following this structure, a main() function in a ROS node is often very

short: initialize and configure everything, then call a spin function to let

ROS take over. When you are trying to understand what is happening in a ROS

node, look in the callbacks; that is where the real work is happening.

The ROS Command Line Interface

The ROS command line interface, or CLI for short, is a set of programs for starting, inspecting, controlling, and monitoring a ROS robot. The best way to think of the CLI is a collection of small and simple programs that allow you perform basic tasks in ROS. Drawing from our car analogy, the CLI can be thought of as the subsystems of a vehicle: the breaks, the transmission, the window wipers, all of the smaller parts that are composed together to build the larger vehicle. What we'll show you in this section is how to turn on the car, put it in gear, turn on the radio, and perhaps check your oil to perform routine maintenance. The ROS 2 CLI draws heavily from the Unix/Linux philosophy of small programs that can be composed together. If you are familiar with the command line interface found in Unix and Linux, or to a lesser extent in MacOS or Windows, you'll feel right at home.

The ROS command line tools draw heavily from the design patterns mentioned in the previous section, and directly interface with the APIs we will address in the next section. The CLI interface is at its core just a set of simple tools built from the ROS 2 API; this API is simply an implementation of the high-level patterns we discussed in the previous section. If your goal is to simply interface with a particular piece of software written using ROS, the CLI interface is the way you will go about starting, stopping, and controlling the underlying ROS software. For more advanced users these tools will allow you to study a ROS system by exploring the underlying software processes in the system.

There are only two things you need to memorize from this section. The first command simply tells your computer that you are using ROS, and what version of ROS you want to use. Let's take a look at the magic command:

source /opt/ros/eloquent/setup.bash

If everything is working correctly, this command should simply return. Nothing happens that you can see, but underneath the hood you've just told this particular shell that you are using ROS 2 Eloquent Elusor, and where all the ROS programs and files live. You should plan on doing this every time you want to use ROS. The most common mistake new users have is not running this command. If you're not sure if you ran the command in a shell, that's okay. The command is idempotent; running it twice in a row won't break anything. You can run it a million times in a row and it won't make any difference.

The other command you need to commit to memory is ros2. Almost everything in

the ROS 2 CLI starts with ros2. Go ahead and try it in the same shell where you just sourced the setup file.

If everything is working correctly you should see the following:

$ ros2

usage: ros2 [-h] Call `ros2 <command> -h` for more detailed usage. ...

ros2 is an extensible command-line tool for ROS 2.

optional arguments:

-h, --help show this help message and exit

Commands:

action Various action related sub-commands

component Various component related sub-commands

daemon Various daemon related sub-commands

doctor Check ROS setup and other potential issues

interface Show information about ROS interfaces

launch Run a launch file

lifecycle Various lifecycle related sub-commands

msg Various msg related sub-commands

multicast Various multicast related sub-commands

node Various node related sub-commands

param Various param related sub-commands

pkg Various package related sub-commands

run Run a package specific executable

security Various security related sub-commands

service Various service related sub-commands

srv Various srv related sub-commands

topic Various topic related sub-commands

wtf Use `wtf` as alias to `doctor`

Call `ros2 <command> -h` for more detailed usage.

From this one command you can figure out what every single ROS 2 CLI program does and

how to use it. The ROS 2 CLI has a syntax just like most languages. All ROS CLI commands start

with ros2, followed by a command. After the command any number of other things

can come; you can append --help or -h to see the documentation and find out what arguments any of the commands are expecting.

The rest of this section just walks through each of the commands one by one.

Writing commands using the command line is tricky and error

prone. There are a couple of tools you can use to make the process much

smoother. The first is the TAB key, which attempts to auto complete whatever you type.

It can't read your mind, but for common command combinations you usually only need to type the

first one or two letters. Another tool is the up arrow key. When you use the

command line sometimes you mistype a command, or need to rerun a

command. Pressing the up key will cycle through the previous commands which you

can modify and rerun as needed.

Running Your First ROS Program

Let's get started with our first ROS CLI command. The first command we'll visit

is run. Let's start by looking at the documentation for the run command:

$ ros2 run

usage: ros2 run [-h] [--prefix PREFIX] package_name executable_name ...

ros2 run: error: the following arguments are required: package_name, executable_name, argv

To get more complete information about a ROS 2 command, simply ask the command for help by

adding --help to the command. Let's try that again:

$ ros2 run --help

usage: ros2 run [-h] [--prefix PREFIX] package_name executable_name ...

Run a package specific executable

positional arguments:

package_name Name of the ROS package

executable_name Name of the executable

argv Pass arbitrary arguments to the executable

optional arguments:

-h, --help show this help message and exit

--prefix PREFIX Prefix command, which should go before the executable.

Command must be wrapped in quotes if it contains spaces

(e.g. --prefix 'gdb -ex run --args').

We can see that ros2 run is the

command to, "Run a package specific executable." In ROS 2 collections of ROS

software are gathered into logical units called packages. Each package

contains all of the source code for the package as a variety of other data that

tells ROS how to build and compile the package and the names of all the

programs, also called executables, that can be found in the package. The line

below the description then gives the positional arguments for the

package. Positional arguments are the words and values that come after ros2

and the command you run. In this case the syntax for the command sentence we

want to write is as follows:

ros2 run <package name> <program/executable name> <args>

There is one piece of missing information here. What is this argv that the

command is asking for? The argv element is programmer short hand for variable

arguments, and it simply means, "some number of additional arguments that are

determined by the executable". It is worth noting that a program can have zero

arguments and you can just leave it blank. This is actually how a lot of

programs work. For example, say we had a package

called math, and an executable called add that takes in two numbers and

returns the result. In this case argv would be the two numbers to add. The

final command would look like:

ros2 run math add 1 2

Finally, below the positional arguments we have optional arguments. You don't need to included them, unless you need to.

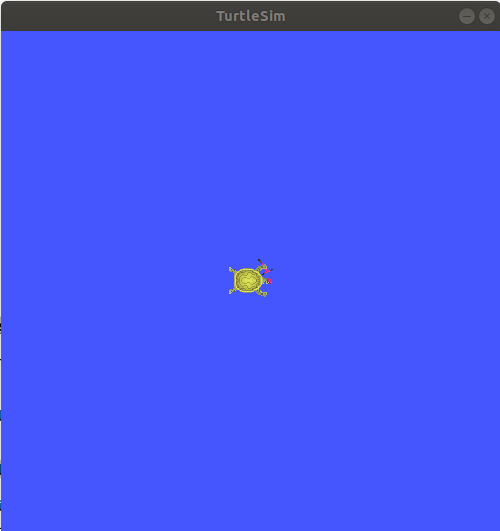

Now that we've looked into our help file let's run our first ROS program. For

these tutorials we're going to use a package called turtlesim, and the program

we want to run is turtlesim_node. Let's run this program (remember your tab

complete!). Your command should look like the following:

ros2 run turtlesim turtlesim_node

If everything goes smoothly you should see the following:

[INFO] [turtlesim]: Starting turtlesim with node name /turtlesim

[INFO] [turtlesim]: Spawning turtle [turtle1] at x=[5.544445], y=[5.544445], theta=[0.000000]

A window should also pop up with a cute little turtle that looks like the one below:

The real power in ROS isn't that it can run a program, it is that it can run lots of programs all at the same time, all talking together to control a robot, or multiple robots, all working together. To illustrate this let's run a second ROS program that makes our little turtle move around.

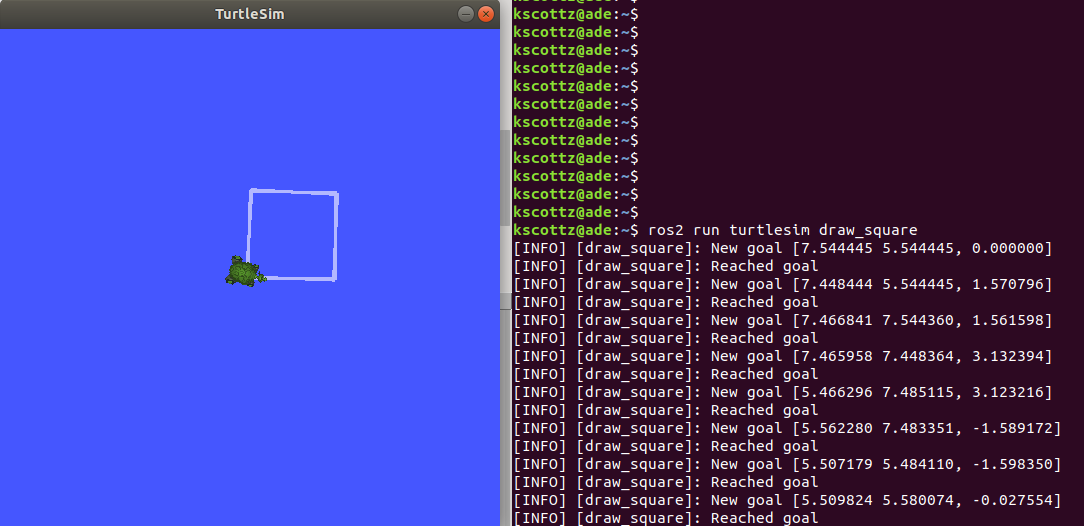

To do this we'll first open a new terminal (using CTRL-SHIFT-T). Next we'll

tell that terminal that we want to use ROS Eloquent by using the source command.

Finally, we'll run another program in the

turtlesim package to draw a square. See if you can find the program

yourself (hint: use TAB). If everything works you should have typed the following, and the

following output should be visible:

$ source /opt/ros/eloquent/setup.bash

$ ros2 run turtlesim draw_square

[INFO] [draw_square]: New goal [7.544445 5.544445, 0.000000]

[INFO] [draw_square]: Reached goal

[INFO] [draw_square]: New goal [7.448444 5.544445, 1.570796]

[INFO] [draw_square]: Reached goal

Your screen should look roughly like this:

It is worth noting that you can stop any ROS program by pressing the Ctrl and

C keys at the same time in the terminal; we call this CTRL-C (note that

CTRL-SHIFT-C and CTRL-SHIFT-V are responsible for copy and paste in a Linux terminal).

Feel free to try it out. Start and stop the programs, and then

restart them before moving on.

ROS Topics

We now have two ROS 2 programs running from the turtlesim package.

There is turtle_node that opens our turtle simulation, and draw_square

that makes the turtle in turtle_node move around. How are these two

programs communicating?

ROS programs, also called nodes, communicate over topics on the ROS message bus. ROS topics use namespaces to distinguish themselves. For example, in a vehicle running ROS, the positions of each wheel may be organized as follows:

/wheels/front/driver/velocity

/wheels/front/passenger/velocity

/wheels/rear/driver/velocity

/wheels/rear/passenger/velocity

The key thing to realize about topics is that the data they contain is dynamic, meaning it changes

constantly. In our vehicle example the velocity of each wheel might be measured

one thousand times a second or more. Since the data in a ROS topic is constantly

changing, an important distinction for a topic is whether the topic is "creating"

or as we like to say in ROS publishing, or if it is reading the data, what we call

subscribing to the topic. Many ROS nodes subscribe to one set of topics,

process that input data, and then publish to another set of topics.

Let's return to our turtlesim example and see if we can use the ROS CLI to

understand the topics, publishers, and subscribers.

To see sub commands and syntax for the topic command, we'll run: ros2 topic --help.

This command outputs the following:

$ ros2 topic --help

usage: ros2 topic [-h] [--include-hidden-topics]

Call `ros2 topic <command> -h` for more detailed usage. ...

Various topic related sub-commands

optional arguments:

-h, --help show this help message and exit

--include-hidden-topics

Consider hidden topics as well

Commands:

bw Display bandwidth used by topic

delay Display delay of topic from timestamp in header

echo Output messages from a topic

find Output a list of available topics of a given type

hz Print the average publishing rate to screen

info Print information about a topic

list Output a list of available topics

pub Publish a message to a topic

type Print a topic's type

Call `ros2 topic <command> -h` for more detailed usage.

There are quite a

few sub commands; we won't discuss all of them, but let's look closely at a few.

Sub commands have their

own help command. Why don't we examine the list command. Repeating our command

pattern let's try running ros2 topic list --help.

usage: ros2 topic list [-h] [--spin-time SPIN_TIME] [-t] [-c]

[--include-hidden-topics]

Output a list of available topics

optional arguments:

-h, --help show this help message and exit

--spin-time SPIN_TIME

Spin time in seconds to wait for discovery (only

applies when not using an already running daemon)

-t, --show-types Additionally show the topic type

-c, --count-topics Only display the number of topics discovered

--include-hidden-topics

Consider hidden topics as well

As indicated at the top of this command help file, ros2 topic list will

"Output a list of available topics." There appears to be a variety of

optional arguments that we don't need to include if we don't want to. However,

the -t, --show-types line looks interesting. It is worth noting that command

arguments, sometimes called flags, can have two types. A short form indicated

with a single dash ("-"), and a long form indicated by a double dash

("--"). Don't worry, despite looking different both versions of the argument do

the same thing. Let's try running this command, sub command pair with the

-show-types argument.

$ ros2 topic list --show-types

/parameter_events [rcl_interfaces/msg/ParameterEvent]

/rosout [rcl_interfaces/msg/Log]

/turtle1/cmd_vel [geometry_msgs/msg/Twist]

/turtle1/color_sensor [turtlesim/msg/Color]

/turtle1/pose [turtlesim/msg/Pose]

On the left hand side we see all of the ROS topics

running on the system, each starting with /. We can see that most of them are gathered in the

/turtle1/ group. This group defines all the inputs and outputs of the little

turtle on our screen. The words in brackets ([]) to the right of the topic

names define the messages used on the topic. Our car wheel example was

simple, we were only publishing velocity, but ROS allows you to publish more

complex data structures that are defined by a message type. When we added the

--show-types flag we told the command to include this information. We'll dig

into messages in detail a bit later.

One of the more commonly used topic sub commands is

info. Unsurprisingly, info provides info about a topic. Let's peek at its

help file using ros2 topic info --help

$ ros2 topic info --help

usage: ros2 topic info [-h] topic_name

Print information about a topic

positional arguments:

topic_name Name of the ROS topic to get info (e.g. '/chatter')

optional arguments:

-h, --help show this help message and exit

That seems pretty straight forward. Let's give it a go by running it on

/turtle1/pose

$ ros2 topic info /turtle1/pose

Type: turtlesim/msg/Pose

Publisher count: 1

Subscriber count: 1

What does this command tell us? First it tells us the message type for the

/turtle1/pose topic, which is /turtlesim/msg/Pose. From this we can determine that the

message type comes from the turtlesim package, and its type is Pose. ROS

messages have a predefined message type that can be shared by different

programming languages and between different nodes. We can also see that this

topic has a single publisher, that is to say a single node generating data on the

topic. The topic also has a single subscriber, also called a listener, who is

processing the incoming pose data.

If we only wanted to know the message type of a topic

there is a sub command just for that called, type. Let's take a look at its

help file and its result:

$ ros2 topic type --help

usage: ros2 topic type [-h] topic_name

Print a topic's type

positional arguments:

topic_name Name of the ROS topic to get type (e.g. '/chatter')

optional arguments:

-h, --help show this help message and exit

kscottz@kscottz-ratnest:~/Code/ros2multirobotbook$ ros2 topic type /turtle1/pose

turtlesim/msg/Pose

While it is not part of the topic command it is worthwhile for us to jump ahead

briefly and look at one particular command, sub command pair, namely the interface

command and the show sub command. This sub command will print all the

information related to a message type so you can better understand the data

being moved over a topic. In the previous example we saw that the topic type

sub command told us the /turtle1/pose topic has a type turtlesim/msg/Pose.

But what does turtlesim/msg/Pose data look like? We can look at the data

structure transferred by this topic by running the ros2 interface show

sub command and giving the message type name as an input. Let's look at the help

for this sub command and its output:

$ ros2 interface show --help

usage: ros2 interface show [-h] type

Output the interface definition

positional arguments:

type Show an interface definition (e.g. "std_msgs/msg/String")

optional arguments:

-h, --help show this help message and exit

$ ros2 interface show turtlesim/msg/Pose

float32 x

float32 y

float32 theta

float32 linear_velocity

float32 angular_velocity

We can see the values x and y which are the position coordinates of our turtle,

and that they are of type float32.

theta is the direction the head is pointing. The

next two values, linear_velocity and angular_velocity, are how

fast the turtle is moving and how quickly it is turning, respectively. To summarize, this

message tells us where a turtle is on the screen, where it is headed, and how

fast it is moving or rotating.

Now that we know what ROS topics are on our simple turtlesim, and their message

types, we can dig in and find out more about how everything works. If we look

back at our topic sub commands, we can see a sub command called echo. Echo is

computer jargon that means "repeat" something. If you echo a topic it means you

want the CLI to repeat what's on a topic. Let's look at the echo sub command's

help text:

$ ros2 topic echo --help

usage: ros2 topic echo [-h]

[--qos-profile {system_default,sensor_data,services_default,parameters,parameter_events,action_status_default}]

[--qos-reliability {system_default,reliable,best_effort}]

[--qos-durability {system_default,transient_local,volatile}]

[--csv] [--full-length]

[--truncate-length TRUNCATE_LENGTH] [--no-arr]

[--no-str]

topic_name [message_type]

Output messages from a topic

positional arguments:

topic_name Name of the ROS topic to listen to (e.g. '/chatter')

message_type Type of the ROS message (e.g. 'std_msgs/String')

optional arguments:

-h, --help show this help message and exit

--qos-profile {system_default,sensor_data,services_default,parameters,parameter_events,action_status_default}

Quality of service preset profile to subscribe with

(default: sensor_data)

--qos-reliability {system_default,reliable,best_effort}

Quality of service reliability setting to subscribe

with (overrides reliability value of --qos-profile

option, default: best_effort)

--qos-durability {system_default,transient_local,volatile}

Quality of service durability setting to subscribe

with (overrides durability value of --qos-profile

option, default: volatile)

--csv Output all recursive fields separated by commas (e.g.

for plotting)

--full-length, -f Output all elements for arrays, bytes, and string with

a length > '--truncate-length', by default they are

truncated after '--truncate-length' elements with

'...''

--truncate-length TRUNCATE_LENGTH, -l TRUNCATE_LENGTH

The length to truncate arrays, bytes, and string to

(default: 128)

--no-arr Don't print array fields of messages

--no-str Don't print string fields of messages

Wow, that's a lot of features. The top of the help files says that this CLI

program "output[s] messages from a topic." As we scan the positional arguments we see one

required argument, a topic name, and an optional message type. We know the

message type is optional because it has square brackets ([]) around it. Let's

give the simple case a whirl before we address some of the optional

elements. Two things to keep in mind: first is that topics are long and easy to mess

up, so use the TAB key. Second is that this will print a lot of data, fast. You can

use CTRL-C to stop the command and stop all the output. Let's take a look at the

/turtle1/pose topic.

$ ros2 topic echo /turtle1/pose

x: 5.4078755378723145

y: 7.081490516662598

theta: -1.0670461654663086

linear_velocity: 1.0

angular_velocity: 0.0

---

x: 5.4155988693237305

y: 7.067478179931641

theta: -1.0670461654663086

linear_velocity: 1.0

angular_velocity: 0.0

---

x: 5.423322677612305

y: 7.053465843200684

theta: -1.0670461654663086

linear_velocity: 1.0

angular_velocity: 0.0

---

<<GOING ON FOREVER>>

Let's examine what is going on. Between the

dashes (---) is a single ROS message on our topic. If you examine the numbers

closely you can see that they are changing, and doing so in relation to the

movement of the turtle. Going back to our car example you can see how this would

be useful for understanding the instantaneous velocity of each of our wheels.

Now that we have the basics down let's dig into a few of the optional

arguments. We see a variety of commands that start with --qos. "QOS" here

means "quality of service" and it is a really cool feature that is only in

ROS 2. Without getting too technical, QOS is a way of asking for a certain level

of networking robustness. A ROS system can operate over a network, and just like

streaming video or video games, packets can get dropped or not get to their

destination. The OS settings help you control which packets are the most

important and should get the highest priority.

Most of the other commands deal with changing the output format of this CLI

program, but there is one in particular that is super handy, and it is also new

in ROS 2. The --csv flag stands for "comma separated values" and it a very

simple way of defining a spreadsheet. What this argument does is make the topic

echo command output data in the comma separate value format. Many command lines

allow you send data from the screen to a file, saving the data for later

review or analysis. To do this file saving in Linux we use the > character

followed by a file name. Below are two examples of using the --csv argument:

$ ros2 topic echo /turtle1/pose --csv

7.097168922424316,8.498645782470703,2.442624092102051,0.0,0.4000000059604645

7.097168922424316,8.498645782470703,2.449024200439453,0.0,0.4000000059604645

...

<<CTRL-C>>

$ ros2 topic echo /turtle1/pose --csv > mydata.csv

<<nothing happens>>

<<CTRL-C>>

The second command above creates a file called mydata.csv. You can look at it

using a CLI utility called less (press q to quit), or open it with your

favorite spreadsheet tool.

Now that we've looked at ros2 topic echo let's take a look at a few other

topic sub commands. One thing you may have noticed is that topics can output a lot

of data! More complex robots, like a self driving car, can saturate a high

speed internet connection with how much data it produces. There are two topic

sub commands that can be used to diagnose performance issues. The first

sub command is topic hz which is the abbreviation of Hertz, the unit of

frequency. The Hz sub command will

tell you how often a particular topic produces a message. Similarly there is

the topic bw sub command, where bw stands for bandwidth, which is a

engineering term related to the volume of data being produced. A high

bandwidth connection can move more data, like high definition video, than a low

bandwidth data, which might move a radio show. Let's take a look at the help

for these two commands:

$ ros2 topic hz --help

usage: ros2 topic hz [-h] [--window WINDOW] [--filter EXPR] [--wall-time]

topic_name

Print the average publishing rate to screen

positional arguments:

topic_name Name of the ROS topic to listen to (e.g. '/chatter')

optional arguments:

-h, --help show this help message and exit

--window WINDOW, -w WINDOW

window size, in # of messages, for calculating rate

(default: 10000)

--filter EXPR only measure messages matching the specified Python

expression

--wall-time calculates rate using wall time which can be helpful

when clock is not published during simulation

$ ros2 topic bw --help

usage: ros2 topic bw [-h] [--window WINDOW] topic

Display bandwidth used by topic

positional arguments:

topic Topic name to monitor for bandwidth utilization

optional arguments:

-h, --help show this help message and exit

--window WINDOW, -w WINDOW

window size, in # of messages, for calculating rate

(default: 100)

Both bw and hz follow the same pattern, they simply take in a topic name

followed by a few optional arguments. The only argument worth noting is the

window argument. Both of these commands calculate statistics for a series of

messages; how many messages to use in calculating those statistics is the window

size. The default value for window is 100, so when you call ros2 topic bw it